OpenShift Applications

- Projects

- Create a project as another user

- Configure project creation

- Operators

- Operator Lifecycle Manager

- ClusterServiceVersions (CSVs)

- Operator Lifecycle Manager architecture

- Operator Lifecycle Manager Operator

- Catalog Operator

- Catalog Registry

- OperatorGroups

- OperatorGroup membership

- Troubleshoot OperatorGroup membership

- Target namespace selection

- OperatorGroup ClusterServiceVersion annotations

- Provided APIs annotation

- Role-based access control

- Copied ClusterServiceVersions

- Static OperatorGroups

- OperatorGroup intersection

- Rules for intersection

- OperatorHub

- Add Operators to a cluster

- Delete Operators from a cluster

- Create applications from installed Operators

- Manage resources from Custom Resource Definitions

- Create applications

- Create an application using the CLI

- Create an application from source code

- Local

- Remote

- Build strategy detection

- Language Detection

- Create an application from an image

- DockerHub MySQL image

- Image in a private registry

- Existing imagestream and optional imagestreamtag

- Create an application from a template

- Template Parameters

- Modify application creation

- Specify environment variables

- Specify build environment variables

- Searching for images, templates, and other inputs

- Install the service catalog

- Provision template applications

- Uninstall the Template Service Broker

- Install the OpenShift Ansible Broker

- Configure the OpenShift Ansible Broker

- Provision service bundles

- Uninstall the OpenShift Ansible Broker

- Understanding Deployments and DeploymentConfigs

- Manage deployment processes

- Manage DeploymentConfigs

- Starting a deployment

- View a deployment

- Retrying a deployment

- Rolling back a deployment

- Execute commands inside a container

- View deployment logs

- Deployment triggers

- Set deployment triggers

- Set deployment resources

- Scaling manually

- Access private repositories from DeploymentConfigs

- Assign pods to specific nodes

- Run a Pod with a different service account

- Use DeploymentConfig strategies

- Use route-based deployment strategies

- Extend the Kubernetes API with Custom Resource Definitions

- Manage resources from Custom Resource Definitions

- Resource quotas per project

- Resource quotas across multiple projects

- Idling applications

- Unidling applications

- Hard pruning the registry

- Operator SDK

- Architecture of the Operator SDK

- Workflow

- Manager file

- Prometheus Operator support

- Install the Operator SDK CLI

- Install from GitHub release

- Install from Homebrew

- Compile and install from source

- Build a Go-based Memcached Operator using the Operator SDK

- Manage a Memcached Operator using the Operator Lifecycle Manager

- Additional resources

- Create Ansible-based Operators

- Ansible support in the Operator SDK

- Custom Resource files

- Watches file

- Advanced options

- Extra variables sent to Ansible

- Ansible Runner directory

- Install the Operator SDK CLI

- Install from GitHub release

- Install from Homebrew

- Compile and install from source

- Build an Ansible-based Operator using the Operator SDK

- Manage application lifecycle using the k8s Ansible module

- Install the k8s Ansible module

- Test the k8s Ansible module locally

- Test the k8s Ansible module inside an Operator

- Testing an Ansible-based Operator locally

- Testing an Ansible-based Operator on a cluster

- Manage Custom Resource status using the k8s_status Ansible module

- Use the k8s_status Ansible module when testing locally

- Additional resources

- Create Helm-based Operators

- Generate a ClusterServiceVersion (CSV)

- How ClusterServiceVersion generation works

- ClusterServiceVersion composition configuration

- Manually-defined ClusterServiceVersion fields

- Generate a CSV

- Custom Resource Definitions (CRDs)

- Owned Custom Resource Definitions

- Required Custom Resource Definitions

- Custom Resource Definition templates

- Understanding your API services

- Owned APIServices

- Required APIServices

- Configure built-in monitoring with Prometheus

- Configure leader election

- Operator SDK CLI reference

- Appendices

Projects

Projects allow user communities to organize and manage content in isolation from other communities.

If allowed by the cluster administrator, we can create a new project.

For example:

The number of projects we can create may be limited by the system administrator. After our limit is reached, we might have to delete an existing project in order to create a new one. Click the Resources button to see workloads in the project. Click the Dashboard button to see metrics and details about the project.

When viewing projects, we are restricted to seeing only the projects we have access to view based on the authorization policy.

Run:

This command provides a high-level overview of the current project, with its components and their relationships.

When we delete a project, the server updates the project status to Terminating from Active. Then, the server clears all content from a project in the Terminating state before finally removing the project. While a project is in Terminating status, we cannot add new content to the project. Projects can be deleted from the CLI or the web console.

Impersonation allows us to create a project as a different user.

Requests to the OpenShift API can include an Impersonate-User header, which indicates that the requester wants the request handled as though it came from the specified user.

Before User A can impersonate User B, User A is authenticated. Then, an authorization check occurs to ensure that User A is allowed to impersonate the user named User B. If User A is requesting to impersonate a service account, system:serviceaccount:namespace:name, OpenShift confirms that User A can impersonate the serviceaccount named name in namespace. If the check fails, the request fails with a 403 (Forbidden) error code.

By default, project administrators and editors can impersonate service accounts in their namespace. The sudoers role allows a user to impersonate system:admin, which in turn has cluster administrator permissions. The ability to impersonate system:admin grants some protection against typos, but not security, for someone administering the cluster. For example, running oc delete nodes --all fails, but running oc delete nodes --all --as=system:admin succeeds.

We can impersonate a different user when creating a project request. Because system:authenticated:oauth is the only bootstrap group that can create project requests, we must impersonate that group.

In OpenShift, projects are used to group and isolate related objects. When requests are made to create a new project using the web console or oc new-project command, an endpoint in OpenShift is used to provision the project according to a template, which can be customized.

We can allow and configure how developers and service accounts can create, or self-provision, their own projects.

The OpenShift API server automatically provisions new projects based on the project template identified by the projectRequestTemplate parameter in the cluster's project configuration resource. If the parameter is not defined, the API server creates a default template that creates a project with the requested name, and assigns the requesting user to the admin role for that project.

When a project request is submitted, the API substitutes the following parameters into the template:

Default project template parameters

Access to the API is granted to developers with the self-provisioner role and the self-provisioners cluster role binding. This role is available to all authenticated developers by default.

We can modify the default project template so that new projects are created using our custom requirements.

To create our own custom project template:

Project configuration resource with custom project template

We can prevent an authenticated user group from self-provisioning new projects.

Review the subjects in the self-provisioners section.

Consider customizing this project request message to provide more helpful instructions specific to our organization.

When a developer or a service account that is unable to self-provision projects makes a project creation request using the web console or CLI, the following error message is returned by default:

Cluster administrators can customize this message. Consider updating it to provide further instructions on how to request a new project specific to our organization. For example:

To customize the project request message:

Project configuration resource with custom project request message

For example:

Operators monitor a Kubernetes environment (such as OpenShift), using the current state to make decisions in real time, including upgrades and failures. Operators package, deploy, and manage Kubernetes applications.

Operators provide:

Kubernetes (and by extension, OpenShift) contains all of the primitives needed to build complex distributed systems....

An Operator uses the Kubernetes' extension mechanism, Custom Resource Definitions (CRDs), so a custom object, for example MongoDB, looks and acts just like the built-in, native Kubernetes objects.

A Service Broker is a step towards programmatic discovery and deployment of an app. However, because it is not a long running process, it cannot execute Day 2 operations like upgrade, failover, or scaling. Customizations and parameterization of tunables are provided at install time, versus an Operator that is constantly watching the cluster's current state. Off-cluster services continue to be a good match for a Service Broker, although Operators exist for these as well.

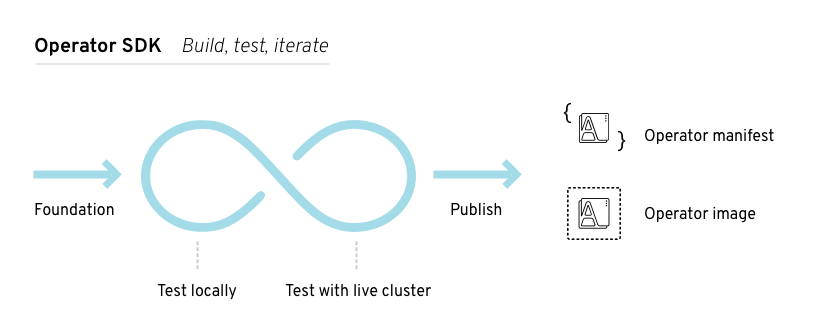

The Operator Framework is a family of open source tools used for testing, delivering, and updating Operators.

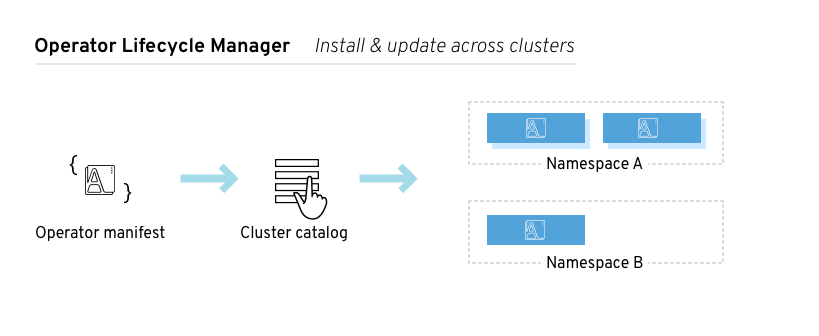

The Operator Lifecycle Manager (OLM) manages the lifecycle of all Operators and their associated services. Operator Lifecycle Manager is part of the Operator Framework used to manage Kubernetes native applications (Operators).

The Operator Lifecycle Manager runs by default in OpenShift 4.1, and is used in installing, upgrading, and granting access to Operators running on the cluster. The OpenShift web console provides management screens for cluster administrators to install Operators, as well as grant specific projects access to use the catalog of Operators available on the cluster.

Developers can provision databases instances, monitoring, and big data services.

A ClusterServiceVersion (CSV) is a YAML manifest created from Operator metadata that assists the Operator Lifecycle Manager (OLM) in running the Operator in a cluster. ClusterServiceVersions are the metadata that accompany an Operator container image, and are used to populate user interfaces with information like logo, description, and version. ClusterServiceVersions are also a source of technical information needed to run the Operator, like the required RBAC rules and which Custom Resources (CRs) it manages or depends on.

A ClusterServiceVersion is composed of:

The Operator Lifecycle Manager (OLM) is composed of two Operators:

Each of these Operators are responsible for managing the Custom Resource Definitions (CRDs) that are the basis for the Operator Lifecycle Manager framework:

Custom Resource Definitions are managed by Operator Lifecycle Manager and Catalog Operators

Each of these Operators are also responsible for creating resources:

The Operator Lifecycle Manager Operator deploys applications defined by ClusterServiceVersion resources after the required resources specified in the ClusterServiceVersion are present in the cluster.

The Operator Lifecycle Manager Operator is not concerned with the creation of the required resources; users can choose to manually create these resources using the CLI, or users can choose to create these resources using the Catalog Operator. This separation of concern enables users incremental buy-in in terms of how much of the Operator Lifecycle Manager framework they choose to leverage for their application.

While the Operator Lifecycle Manager Operator is often configured to watch all namespaces, it can also be operated alongside other Operator Lifecycle Manager Operators so long as they all manage separate namespaces.

OLM Operator workflow

A ClusterServiceVersion must be an active member of an OperatorGroup in order for the install strategy to be run.

The Catalog Operator resolves and installs ClusterServiceVersions and their specified resources. It is also responsible for watching CatalogSources for updates to packages in channels and upgrading them (optionally automatically) to the latest available versions.

To track a package in a channel create a Subscription resource configuring the desired package, channel, and the CatalogSource from which to pull updates. When updates are found, an appropriate InstallPlan is written into the namespace on behalf of the user.

We can also create an InstallPlan resource directly, containing the names of the desired ClusterServiceVersion and an approval strategy, and the Catalog Operator creates an execution plan for the creation of all of the required resources. After approval, the Catalog Operator creates all of the resources in an InstallPlan; this then independently satisfies the Operator Lifecycle Manager Operator, which proceeds to install the ClusterServiceVersions.

Catalog Operator workflow

The Catalog Registry stores Custom Resource Definitions and ClusterServiceVersions for creation in a cluster and stores metadata about packages and channels.

A package manifest is an entry in the Catalog Registry that associates a package identity with sets of ClusterServiceVersions. Within a package, channels point to a particular CSV. Because ClusterServiceVersions explicitly reference the ClusterServiceVersion that they replace, a package manifest provides the Catalog Operator all of the information that is required to update a ClusterServiceVersion to the latest version in a channel (stepping through each intermediate version).

An OperatorGroup is an Operator Lifecycle Manager resource that provides multitenant configuration to Operator Lifecycle Manager-installed Operators. An OperatorGroup selects a set of target namespaces in which to generate required RBAC access for its member Operators. The set of target namespaces is provided by a comma-delimited string stored in the CSV's olm.targetNamespaces annotation. This annotation is applied to member Operator's ClusterServiceVersion instances and is projected into their deployments.

An Operator is considered a member of an OperatorGroup if the following conditions are true:

An InstallMode consists of an InstallModeType field and a boolean Supported field. A CSV's spec can contain a set of InstallModes of four distinct InstallModeTypes:

If a CSV's spec omits an entry of InstallModeType, then that type is considered unsupported unless support can be inferred by an existing entry that implicitly supports it.

Specify the set of namespaces for the OperatorGroup using a label selector with the spec.selector field:

We can also explicitly name the target namespaces using the spec.targetNamespaces field:

If both spec.targetNamespaces and spec.selector are defined, spec.selector is ignored.

Alternatively, we can omit both spec.selector and spec.targetNamespaces to specify a global OperatorGroup, which selects all namespaces:

The resolved set of selected namespaces is shown in an OperatorGroup's status.namespaces field. A global OperatorGroup's status.namespace contains the empty string (""), which signals to a consuming Operator that it should watch all namespaces.

Member ClusterServiceVersions of an OperatorGroup have the following annotations:

All annotations except olm.targetNamespaces are included with copied ClusterServiceVersions. Omitting the olm.targetNamespaces annotation on copied ClusterServiceVersions prevents the duplication of target namespaces between tenants.

Information about what GroupVersionKinds (GVKs) are provided by an OperatorGroup are shown in an olm.providedAPIs annotation. The annotation's value is a string consisting of <kind>.<version>.<group> delimited with commas. The GVKs of Custom Resource Definitions and APIServices provided by all active member ClusterServiceVersions of an OperatorGroup are included.

Review the following example of an OperatorGroup with a single active member CSV that provides the PackageManifest resource:

When an OperatorGroup is created, three ClusterRoles are generated. Each contains a single AggregationRule with a ClusterRoleSelector set to match a label, as shown below:

The following RBAC resources are generated when a CSV becomes an active member of an OperatorGroup, as long as the ClusterServiceVersion is watching all namespaces with the AllNamespaces InstallMode and is not in a failed state with reason InterOperatorGroupOwnerConflict.

ClusterRoles generated for each API resource from a Custom Resource Definition

Aggregation labels:

Aggregation labels:

Aggregation labels:

Aggregation labels:

ClusterRoles generated for each API resource from an APIService

Aggregation labels:

Aggregation labels:

Aggregation labels:

Additional Roles and RoleBindings

Operator Lifecycle Manager creates copies of all active member ClusterServiceVersions of an OperatorGroup in each of that OperatorGroup's target namespaces. The purpose of a copied ClusterServiceVersion is to tell users of a target namespace that a specific Operator is configured to watch resources created there. Copied ClusterServiceVersions have a status reason Copied and are updated to match the status of their source CSV. The olm.targetNamespaces annotation is stripped from copied ClusterServiceVersions before they are created on the cluster. Omitting the target namespace selection avoids the duplication of target namespaces between tenants. Copied ClusterServiceVersions are deleted when their source ClusterServiceVersion no longer exists or the OperatorGroup that their source ClusterServiceVersion belongs to no longer targets the copied CSV's namespace.

An OperatorGroup is static if its spec.staticProvidedAPIs field is set to true. As a result, Operator Lifecycle Manager does not modify the OperatorGroup's olm.providedAPIs annotation, which means that it can be set in advance. This is useful when a user wants to use an OperatorGroup to prevent resource contention in a set of namespaces but does not have active member ClusterServiceVersions that provide the APIs for those resources.

Below is an example of an OperatorGroup that protects Prometheus resources in all namespaces with the something.cool.io/cluster-monitoring: "true" annotation:

Two OperatorGroups are said to have intersecting provided APIs if the intersection of their target namespace sets is not an empty set and the intersection of their provided API sets, defined by olm.providedAPIs annotations, is not an empty set.

A potential issue is that OperatorGroups with intersecting provided APIs can compete for the same resources in the set of intersecting namespaces.

When checking intersection rules, an OperatorGroup's namespace is always included as part of its selected target namespaces.

Each time an active member ClusterServiceVersion synchronizes, Operator Lifecycle Manager queries the cluster for the set of intersecting provided APIs between the ClusterServiceVersion's OperatorGroup and all others. Operator Lifecycle Manager then checks if that set is an empty set:

Failure states caused by OperatorGroups are non-terminal.

The following actions are performed each time an OperatorGroup synchronizes:

The Operator Lifecycle Manager exposes certain Operator Lifecycle Manager-specific resources for use by the Prometheus-based OpenShift cluster monitoring stack.

Metrics exposed by Operator Lifecycle Manager

The OperatorHub interface is used to discover and install Operators. With one click, an Operator...

Cluster administrators can choose from OperatorSource grouped into the following categories:

The OperatorHub component is installed and run as an Operator by default on OpenShift in the openshift-marketplace namespace.

The OperatorHub component's Operator manages two Custom Resource Definitions (CRDs): an operatorsource_crd.yaml and a CatalogSourceConfig.

Although some OperatorSource and CatalogSourceConfig information is exposed through the OperatorHub user interface, those files are only used directly by those who are creating their own Operators.

An OperatorSource defines the external data store for Operator bundles. A basic OperatorSource includes:

An Operator's CatalogSourceConfig enables Operators present in the OperatorSource on the cluster.

A basic CatalogSourceConfig yaml file identifies:

We can install an Operator from the OperatorHub using the OpenShift web console or the CLI. We can then subscribe the Operator to one or more namespaces to make it available for developers on the cluster.

During installation, determine the following initial settings for the Operator:

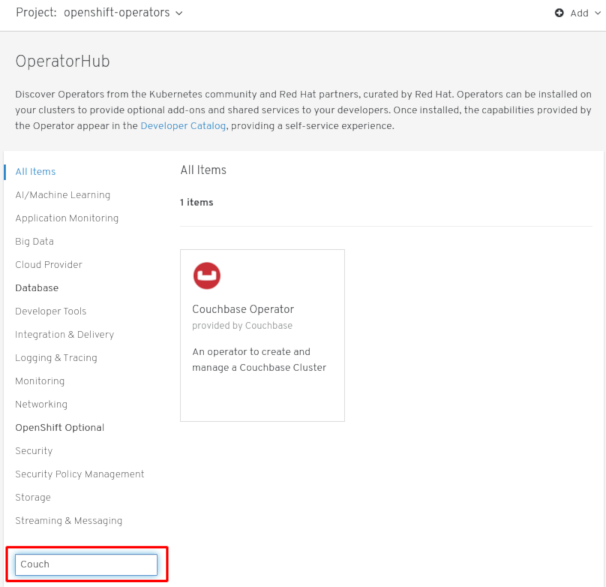

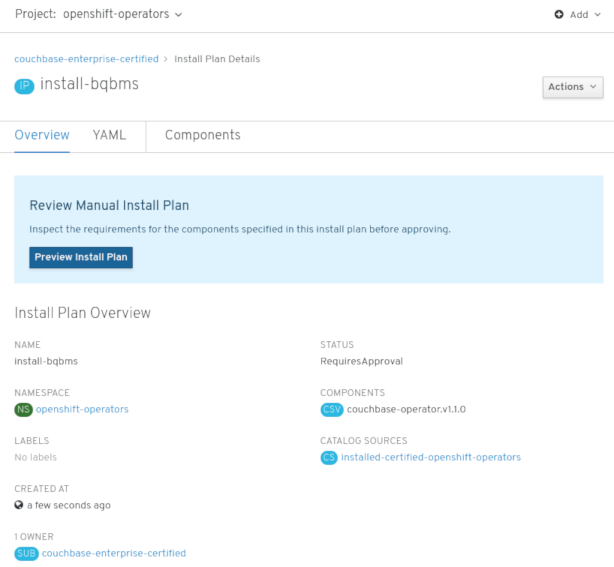

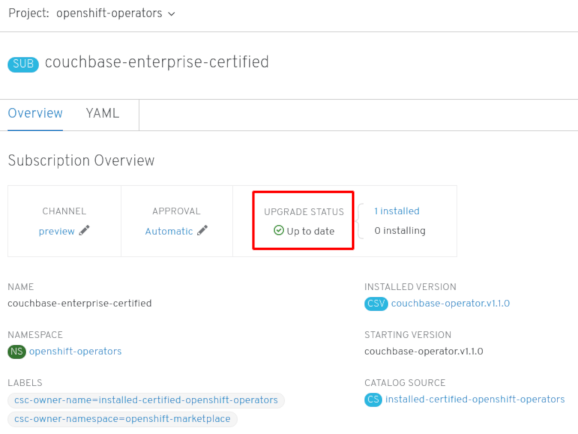

This procedure uses the Couchbase Operator as an example to install and subscribe to an Operator from the OperatorHub using the OpenShift web console.

Prerequisites

Procedure

For a Community Operator, we are warned that Red Hat does not certify those Operators. Acknowledge the warning before continuing. Information about the Operator is displayed.

After approving on the Install Plan page, the Subscription upgrade status moves to Up to date.

For the All namespaces Installation Mode, the status resolves to InstallSucceeded in the openshift-operators namespace, but the status is Copied if you check in other namespaces.

If it does not:

We can install an Operator from the OperatorHub using the CLI with the oc command to create or update a CatalogSourceConfig object, then add a Subscription object.

The web console version of this procedure handles the creation of the CatalogSourceConfig and Subscription objects behind the scenes, appearing as if it was one step.

Prerequisites

Procedure

Example CatalogSourceConfig

The Operator generates a CatalogSource from the CatalogSourceConfig in the namespace specified in targetNamespace.

Example Subscription yaml file

At this point, the OLM is now aware of the selected Operator. A ClusterServiceVersion (CSV) for the Operator should appear in the target namespace, and APIs provided by the Operator should be available for creation.

Example updated CatalogSourceConfig

Additional resources

To delete (uninstall) an Operator from the cluster, we can simply delete the subscription to remove it from the subscribed namespace. If we want a clean slate, we can also remove the operator ClusterServiceVersion and deployment, then delete Operator's entry in the CatalogSourceConfig. The following text describes how to delete Operators from a cluster using either the web console or the command line.

To delete an installed Operator from the selected namespace through the web console:

Although the Operator is no longer installed or receiving updates, that Operator will still appear on the Operator Catalogs list, ready to re-subscribe. To remove the Operator from that listing, we can delete the Operator's entry in the CatalogSourceConfig from the command line (as shown in last step of "Deleting operators from a cluster using the CLI").

Instead of using the OpenShift web console, we can delete an Operator from the cluster using the CLI to delete the Subscription and ClusterServiceVersion from the targetNamespace, then editing the CatalogSourceConfig to remove the Operator's package name.

Prerequisites

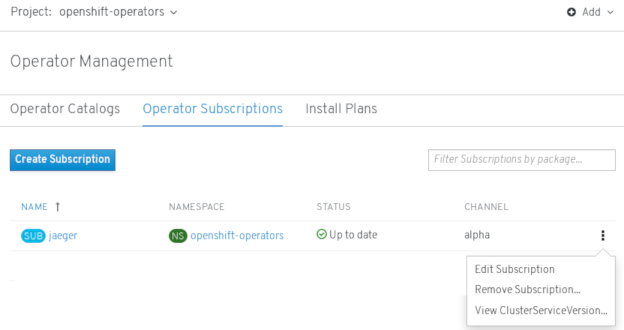

In this example, there are two Operators (Jaeger and Descheduler) installed in the openshift-operators namespace. The goal is to remove Jaeger without removing Descheduler.

Procedure

For example, the spec section might appear as follows:

Example of CatalogSourceConfig

Remove the package from the packages line, as shown:

Save the change and the marketplace-operator will reconcile the CatalogSourceConfig.

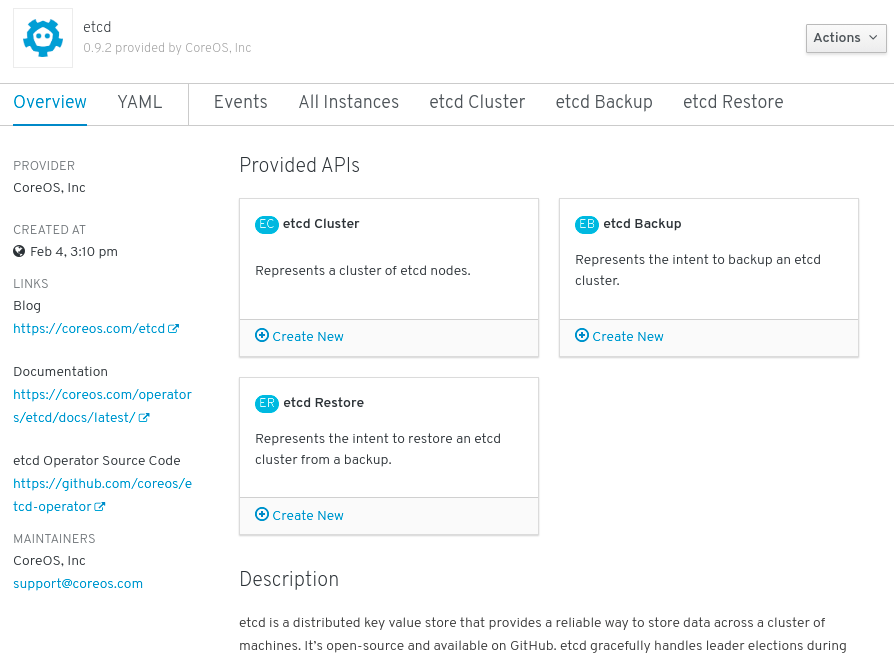

This procedure walks through creating a new etcd using the etcd Operator, managed by the Operator Lifecycle Manager (OLM).

Prerequisites

We can get this list from the CLI using:

As shown under Provided APIs, this Operator makes available three new resource types, including one for an etcd Cluster (the EtcdCluster resource). These objects work similar to the built-in native Kubernetes ones, such as Deployments or ReplicaSets, but contain logic specific to managing etcd.

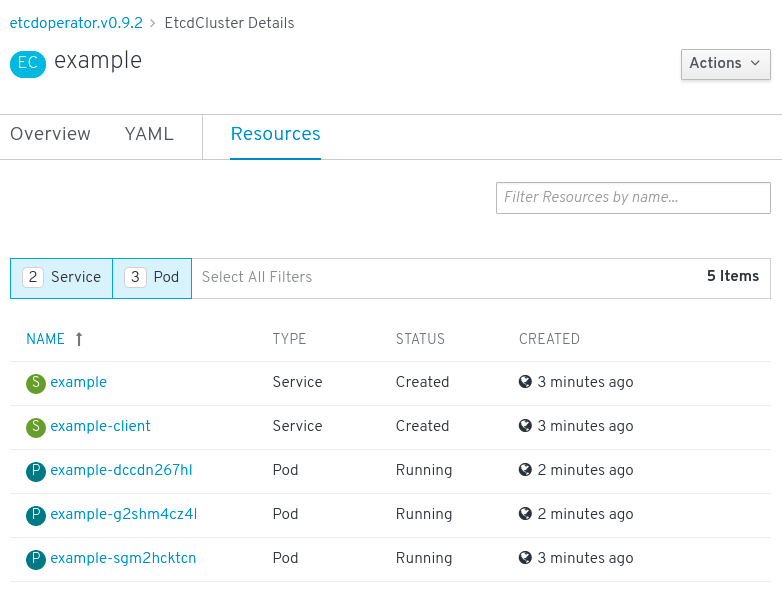

Verify that a Kubernetes service has been created that allows us to access the database from other Pods in our project.

You now have an etcd cluster that will react to failures and rebalance data as Pods become unhealthy or are migrated between nodes in the cluster. Most importantly, cluster administrators or developers with proper access can now use the database with their applications.

In the Kubernetes API, a resource is an endpoint that stores a collection of API objects of a certain kind. For example, the built-in Pods resource contains a collection of Pod objects.

A Custom Resource Definition (CRD) object defines a new, unique object Kind in the cluster and lets the Kubernetes API server handle its entire lifecycle.

Custom Resource (CR) objects are created from Custom Resource Definitions that have been added to the cluster by a cluster administrator, allowing all cluster users to add the new resource type into projects.

Operators in particular make use of Custom Resource Definitions by packaging them with any required RBAC policy and other software-specific logic. Cluster administrators can also add Custom Resource Definitions manually to the cluster outside of an Operator's lifecycle, making them available to all users.

While only cluster administrators can create Custom Resource Definitions, developers can create the CR from an existing Custom Resource Definition if they have read and write permission to it.

After a Custom Resource Definition (CRD) has been added to the cluster, Custom Resources (CRs) can be created with the CLI from a file using the CR specification.

Prerequisites

Example YAML file for a CR

We can inspect Custom Resource (CR) objects that exist in the cluster using the CLI.

Prerequisites

For example:

Resource names are not case-sensitive, and we can use either the singular or plural forms defined in the Custom Resource Definition, as well as any short name. For example:

We can create an OpenShift application from components that include source or binary code, images, and templates using the OpenShift CLI. The set of objects created by new-app depends on the artifacts passed as input:

With the new-app command we can create applications from source code in a local or remote Git repository.

The new-app command creates a build configuration, which itself creates a new application image from your source code. The new-app command typically also creates a deployment configuration to deploy the new image, and a service to provide load-balanced access to the deployment running the image.

OpenShift automatically detects whether the Pipeline or Source build strategy should be used, and in the case of Source builds, detects an appropriate language builder image.

To create an application from a Git repository in a local directory:

For a local Git repository, the repository must have a remote named origin that points to a URL that is accessible by the OpenShift cluster. If there is no recognized remote, running the new-app command will create a binary build.

To create an application from a remote Git repository:

To create an application from a private remote Git repository:

For a private remote Git repository, we can use the --source-secret flag to specify an existing source clone secret that will get injected into the BuildConfig to access the repository.

We can use a subdirectory of the source code repository by specifying a --context-dir flag. To create an application from a remote Git repository and a context subdirectory:

Also, when specifying a remote URL, we can specify a Git branch to use by appending #<branch_name> to the end of the URL:

If a Jenkinsfile exists in the root or specified context directory of the source repository when creating a new application, OpenShift generates a Pipeline build strategy.

Otherwise, it generates a Source build strategy.

Override the build strategy by setting the --strategy flag to either pipeline or source.

The oc command requires that files containing build sources are available in a remote Git repository. For all source builds, use git remote -v.

If we use the Source build strategy, new-app attempts to determine the language builder to use by the presence of certain files in the root or specified context directory of the repository:

Languages Detected by new-app

After a language is detected, new-app searches the OpenShift server for imagestreamtags that have a supports annotation matching the detected language, or an imagestream that matches the name of the detected language. If a match is not found, new-app searches the Docker Hub registry for an image that matches the detected language based on name.

We can override the image the builder uses for a particular source repository by specifying the image, either an imagestream or container specification, and the repository with a ~ as a separator. Note that if this is done, build strategy detection and language detection are not carried out.

For example, to use the myproject/my-ruby imagestream with the source in a remote repository:

To use the `openshift/ruby-20-centos7:latest `container imagestream with the source in a local repository:

Language detection requires the Git client to be locally installed so that the repository can be cloned and inspected. If Git is not available, we can avoid the language detection step by specifying the builder image to use with the repository with the <image>~<repository> syntax.

The -i <image> <repository> invocation requires that new-app attempt to clone repository in order to determine what type of artifact it is, so this will fail if Git is not available.

The -i <image> --code <repository> invocation requires new-app clone repository in order to determine whether image should be used as a builder for the source code, or deployed separately, as in the case of a database image.

We can deploy an application from an existing image. Images can come from imagestreams in the OpenShift server, images in a specific registry, or images in the local Docker server.

The new-app command attempts to determine the type of image specified in the arguments passed to it. However, we can explicitly tell new-app whether the image is a container image using the --docker-image argument or an imagestream using the -i|--image argument.

If we specify an image from the local Docker repository, we must ensure that the same image is available to the OpenShift cluster nodes.

Create an application from the DockerHub MySQL image, for example:

Create an application using an image in a private registry, specify the full container image specification:

Create an application from an existing imagestream and optional imagestreamtag:

We can create an application from a previously stored template or from a template file, by specifying the name of the template as an argument. For example, we can store a sample application template and use it to create an application.

Create an application from a stored template, for example:

To directly use a template in the local file system, without first storing it in OpenShift, use the -f|--file argument. For example:

When creating an application based on a template, use the -p|--param argument to set parameter values defined by the template:

We can store parameters in a file, then use that file with --param-file when instantiating a template. To read the parameters from standard input, use --param-file=-:

The new-app command generates OpenShift objects that build, deploy, and run the application that is created. Normally, these objects are created in the current project and assigned names that are derived from the input source repositories or the input images. However, with new-app we can modify this behavior.

new-app output objects

When generating applications from a template, source, or an image, we can use the -e|--env argument to pass environment variables to the application container at run time:

The variables can also be read from file using the --env-file argument:

Additionally, environment variables can be given on standard input by using --env-file=-:

Any BuildConfig objects created as part of new-app processing are not updated with environment variables passed with the -e|--env or --env-file argument.

When generating applications from a template, source, or an image, we can use the --build-env argument to pass environment variables to the build container at run time:

The variables can also be read from a file using the --build-env-file argument:

Additionally, environment variables can be given on standard input by using --build-env-file=-:

When generating applications from source, images, or templates, we can use the -l|--label argument to add labels to the created objects. Labels make it easy to collectively select, configure, and delete objects associated with the application.

To see a dry-run of running the new-app command, we can use the -o|--output argument with a yaml or json value. We can then use the output to preview the objects that are created or redirect it to a file that we can edit. After we are satisfied, we can use oc create to create the OpenShift objects.

To output new-app artifacts to a file, edit them, then create them:

Objects created by new-app are normally named after the source repository, or the image used to generate them. We can set the name of the objects produced by adding a --name flag to the command:

Normally, new-app creates objects in the current project. However, we can create objects in a different project using the -n|--namespace argument:

The new-app command allows creating multiple applications specifying multiple parameters to new-app. Labels specified in the command line apply to all objects created by the single command. Environment variables apply to all components created from source or images.

To create an application from a source repository and a Docker Hub image:

If a source code repository and a builder image are specified as separate arguments, new-app uses the builder image as the builder for the source code repository. If this is not the intent, specify the required builder image for the source using the ~ separator.

The new-app command allows deploying multiple images together in a single Pod. In order to specify which images to group together, use the + separator. The --group command line argument can also be used to specify the images that should be grouped together. To group the image built from a source repository with other images, specify its builder image in the group:

To deploy an image built from source and an external image together:

To search for images, templates, and other inputs for the oc new-app command, add the --search and --list flags. For example, to find all of the images or templates that include PHP:

Create projects using the web console

Create projects using the CLI

$ oc new-project <project_name> --description="<description>" --display-name="<display_name>"

$ oc new-project hello-openshift --description="My example project" --display-name="Hello OpenShift"

View projects using the web console

View projects using the CLI

$ oc get projects

$ oc project <project_name>

Add to a project

Check project status using the web console

Check project status using the CLI

$ oc status

Delete a project using the web console

Delete a project using the CLI

$ oc delete project <project_name>

Create a project as another user

API impersonation

Impersonate a user when creating a project

$ oc new-project <project> --as=<user> --as-group=system:authenticated --as-group=system:authenticated:oauth

Configure project creation

About project creation

Parameter

Description

PROJECT_NAME

The name of the project. Required.

PROJECT_DISPLAYNAME

The display name of the project. May be empty.

PROJECT_DESCRIPTION

The description of the project. May be empty.

PROJECT_ADMIN_USER

The user name of the administrating user.

PROJECT_REQUESTING_USER

The user name of the requesting user.

Modify the template for new projects

$ oc adm create-bootstrap-project-template -o yaml > template.yaml

$ oc create -f template.yaml -n openshift-config

$ oc edit project.config.openshift.io/cluster

apiVersion: config.openshift.io/v1

kind: Project

metadata:

...

spec:

projectRequestTemplate:

name: <template_name>

Disable project self-provisioning

$ oc describe clusterrolebinding.rbac self-provisioners

Name: self-provisioners

Labels: <none>

Annotations: rbac.authorization.kubernetes.io/autoupdate=true

Role:

Kind: ClusterRole

Name: self-provisioner

Subjects:

Kind Name Namespace

---- ---- ---------

Group system:authenticated:oauth

$ oc patch clusterrolebinding.rbac self-provisioners -p '{"subjects": null}'

$ oc adm policy remove-cluster-role-from-group self-provisioner system:authenticated:oauth

$ oc edit clusterrolebinding.rbac self-provisioners

apiVersion: authorization.openshift.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "false"

...$ oc patch clusterrolebinding.rbac self-provisioners -p '{ "metadata": { "annotations": { "rbac.authorization.kubernetes.io/autoupdate": "false" } } }'

$ oc new-project test

Error from server (Forbidden): You may not request a new project via this API.

Customize the project request message

You may not request a new project via this API.

$ oc edit project.config.openshift.io/cluster

apiVersion: config.openshift.io/v1

kind: Project

metadata:

...

spec:

projectRequestMessage: <message_string>

apiVersion: config.openshift.io/v1

kind: Project

metadata:

...

spec:

projectRequestMessage: To request a project, contact your system administrator at projectname@example.com.

Operators

Operator Framework

Operator Lifecycle Manager

ClusterServiceVersions (CSVs)

Operator Lifecycle Manager architecture

Resource

Short name

Owner

Description

ClusterServiceVersion

csv

Operator Lifecycle Manager

Application metadata: name, version, icon, required resources, installation, etc.

InstallPlan

ip

Catalog

Calculated list of resources to be created in order to automatically install or upgrade a CSV.

CatalogSource

catsrc

Catalog

A repository of ClusterServiceVersions, Custom Resource Definitions, and packages that define an application.

Subscription

sub

Catalog

Keeps ClusterServiceVersions up to date by tracking a channel in a package.

OperatorGroup

og

Operator Lifecycle Manager

Configures all Operators deployed in the same namespace as the OperatorGroup object to watch for their Custom Resource (CR) in a list of namespaces or cluster-wide.

Resource

Owner

Deployments

Operator Lifecycle Manager

ServiceAccounts

(Cluster)Roles

(Cluster)RoleBindings

Custom Resource Definitions (CRDs)

Catalog

ClusterServiceVersions (CSVs)

Operator Lifecycle Manager Operator

Catalog Operator

Catalog Registry

OperatorGroups

OperatorGroup membership

InstallModeType

Description

OwnNamespace

The Operator can be a member of an OperatorGroup that selects its own namespace.

SingleNamespace

The Operator can be a member of an OperatorGroup that selects one namespace.

MultiNamespace

The Operator can be a member of an OperatorGroup that selects more than one namespace.

AllNamespaces

The Operator can be a member of an OperatorGroup that selects all namespaces (target namespace set is the empty string "").

Troubleshoot OperatorGroup membership

Target namespace selection

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: my-group

namespace: my-namespace

spec:

selector:

matchLabels:

cool.io/prod: "true"

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: my-group

namespace: my-namespace

spec:

targetNamespaces:

- my-namespace

- my-other-namespace

- my-other-other-namespace

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: my-group

namespace: my-namespace

OperatorGroup ClusterServiceVersion annotations

Annotation

Description

olm.operatorGroup=<group_name>

Contains the name of the OperatorGroup.

olm.operatorGroupNamespace=<group_namespace>

Contains the namespace of the OperatorGroup.

olm.targetNamespaces=<target_namespaces>

Contains a comma-delimited string that lists the OperatorGroup's target namespace selection.

Provided APIs annotation

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

annotations:

olm.providedAPIs: PackageManifest.v1alpha1.packages.apps.redhat.com

name: olm-operators

namespace: local

...

spec:

selector: {}

serviceAccount:

metadata:

creationTimestamp: null

targetNamespaces:

- local

status:

lastUpdated: 2019-02-19T16:18:28Z

namespaces:

- local

Role-based access control

ClusterRole

Label to match

<operatorgroup_name>-admin

olm.opgroup.permissions/aggregate-to-admin: <operatorgroup_name>

<operatorgroup_name>-edit

olm.opgroup.permissions/aggregate-to-edit: <operatorgroup_name>

<operatorgroup_name>-view

olm.opgroup.permissions/aggregate-to-view: <operatorgroup_name>

ClusterRole

Sets

<kind>.<group>-<version>-admin

Verbs on <kind>:

<kind>.<group>-<version>-edit

Verbs on <kind>:

<kind>.<group>-<version>-view

Verbs on <kind>:

<kind>.<group>-<version>-view-crdview

Verbs on apiextensions.k8s.io customresourcedefinitions <crd-name>:

ClusterRole

Sets

<kind>.<group>-<version>-admin

Verbs on <kind>:

<kind>.<group>-<version>-edit

Verbs on <kind>:

<kind>.<group>-<version>-view

Verbs on <kind>:

Copied ClusterServiceVersions

Static OperatorGroups

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: cluster-monitoring

namespace: cluster-monitoring

annotations:

olm.providedAPIs: Alertmanager.v1.monitoring.coreos.com,Prometheus.v1.monitoring.coreos.com,PrometheusRule.v1.monitoring.coreos.com,ServiceMonitor.v1.monitoring.coreos.com

spec:

staticProvidedAPIs: true

selector:

matchLabels:

something.cool.io/cluster-monitoring: "true"

OperatorGroup intersection

Rules for intersection

Metrics

Name

Description

csv_count

Number of ClusterServiceVersions successfully registered.

install_plan_count

Number of InstallPlans.

subscription_count

Number of Subscriptions.

csv_upgrade_count

Monotonic count of CatalogSources.

OperatorHub

Category

Description

Red Hat Operators

Red Hat products packaged and shipped by Red Hat. Supported by Red Hat.

Certified Operators

Products from leading independent software vendors (ISVs). Red Hat partners with ISVs to package and ship. Supported by the ISV.

Community Operators

Optionally-visible software maintained by relevant representatives in the operator-framework/community-operators GitHub repository. No official support.

Custom Operators

Operators we add to the cluster. If we have not added any Custom Operators, the Custom category does not appear in the Web console on the OperatorHub.

OperatorHub architecture

OperatorSource

Field

Description

type

To identify the data store as an application registry, set type to appregistry.

endpoint

Currently, Quay is the external data store used by the OperatorHub, so the endpoint is set to https://quay.io/cnr for the Quay.io appregistry.

registryNamespace

For a Community Operator, this is set to community-operator.

displayName

Optionally set to a name that appears in the OperatorHub user interface for the Operator.

publisher

Optionally set to the person or organization publishing the Operator, so it can be displayed on the OperatorHub.

CatalogSourceConfig

Field

Description

targetNamespace

Location where the Operator will be deployed and updated, such as openshift-operators. This namespace is watched by the Operator Lifecycle Manager.

packages

Comma-separated list of packages in the Operator.

Add Operators to a cluster

Install Operators from the OperatorHub

Install from the OperatorHub using the web console

Install from the OperatorHub using the CLI

$ oc get packagemanifests -n openshift-marketplace

NAME AGE

amq-streams 14h

packageserver 15h

couchbase-enterprise 14h

mongodb-enterprise 14h

etcd 14h

myoperator 14h

...

apiVersion: operators.coreos.com/v1

kind: CatalogSourceConfig

metadata:

name: example

namespace: openshift-marketplace

spec:

targetNamespace: openshift-operators 1

packages: myoperator 2

$ oc apply -f csc.cr.yaml

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: myoperator

namespace: openshift-operators

spec:

channel: alpha

name: myoperator 1

source: example 2

sourceNamespace: openshift-operators

$ oc apply -f myoperator-sub.yaml

apiVersion: operators.coreos.com/v1

kind: CatalogSourceConfig

metadata:

name: example

namespace: openshift-marketplace

spec:

targetNamespace: global

packages: myoperator,another-operator 1

$ oc apply -f csc.cr.yaml

Delete Operators from a cluster

Delete Operators from a cluster using the web console

Delete Operators from a cluster using the CLI

$ oc get subscription jaeger -n openshift-operators -o yaml | grep currentCSV

currentCSV: jaeger-operator.v1.8.2

$ oc delete subscription jaeger -n openshift-operators

subscription.operators.coreos.com "jaeger" deleted

$ oc delete clusterserviceversion jaeger-operator.v1.8.2 -n openshift-operators

clusterserviceversion.operators.coreos.com "jaeger-operator.v1.8.2" deleted

$ oc get catalogsourceconfig -n openshift-marketplace \

installed-community-openshift-operators -o yaml

spec:

csDisplayName: Community Operators

csPublisher: Community

packages: jaeger,descheduler

targetNamespace: openshift-operators

$ oc edit catalogsourceconfig -n openshift-marketplace installed-community-openshift-operators

packages: descheduler

$ oc delete catalogsourceconfig -n openshift-marketplace installed-community-openshift-operators

Create applications from installed Operators

Create an etcd cluster using an Operator

$ oc get csv

$ oc policy add-role-to-user edit <user> -n <target_project>

Manage resources from Custom Resource Definitions

Create Custom Resources from a file

apiVersion: "stable.example.com/v1" 1

kind: CronTab 2

metadata:

name: my-new-cron-object 3

finalizers: 4

- finalizer.stable.example.com

spec: 5

cronSpec: "* * * * /5"

image: my-cron-image

$ oc create -f <file_name>.yaml

Inspect Custom Resources

$ oc get <kind>

$ oc get crontab

NAME KIND

my-new-cron-object CronTab.v1.stable.example.com$ oc get crontabs

$ oc get crontab

$ oc get ct$ oc get <kind> -o yaml

$ oc get ct -o yaml

apiVersion: v1

items:

- apiVersion: stable.example.com/v1

kind: CronTab

metadata:

clusterName: ""

creationTimestamp: 2017-05-31T12:56:35Z

deletionGracePeriodSeconds: null

deletionTimestamp: null

name: my-new-cron-object

namespace: default

resourceVersion: "285"

selfLink: /apis/stable.example.com/v1/namespaces/default/crontabs/my-new-cron-object

uid: 9423255b-4600-11e7-af6a-28d2447dc82b

spec:

cronSpec: '* * * * /5' 1

image: my-cron-image 2

Create applications

Create an application using the CLI

Create an application from source code

Local

$ oc new-app /<path to source code>

Remote

$ oc new-app https://github.com/sclorg/cakephp-ex

$ oc new https://github.com/youruser/yourprivaterepo --source-secret=yoursecret

$ oc new-app https://github.com/sclorg/s2i-ruby-container.git

--context-dir=2.0/test/puma-test-app

$ oc new-app https://github.com/openshift/ruby-hello-world.git#beta4

Build strategy detection

$ oc new-app /home/user/code/myapp --strategy=docker

Language Detection

Language

Files

dotnet

project.json, *.csproj

jee

pom.xml

nodejs

app.json, package.json

perl

cpanfile, index.pl

php

composer.json, index.php

python

requirements.txt, setup.py

ruby

Gemfile, Rakefile, config.ru

scala

build.sbt

golang

Godeps, main.go

$ oc new-app myproject/my-ruby~https://github.com/openshift/ruby-hello-world.git

$ oc new-app openshift/ruby-20-centos7:latest~/home/user/code/my-ruby-app

Create an application from an image

DockerHub MySQL image

$ oc new-app mysql

Image in a private registry

$ oc new-app myregistry:5000/example/myimage

Existing imagestream and optional imagestreamtag

$ oc new-app my-stream:v1

Create an application from a template

$ oc create -f examples/sample-app/application-template-stibuild.json

$ oc new-app ruby-helloworld-sample

$ oc new-app -f examples/sample-app/application-template-stibuild.json

Template Parameters

$ oc new-app ruby-helloworld-sample -p ADMIN_USERNAME=admin -p ADMIN_PASSWORD=mypassword

$ cat helloworld.params

ADMIN_USERNAME=admin

ADMIN_PASSWORD=mypassword

$ oc new-app ruby-helloworld-sample --param-file=helloworld.params

$ cat helloworld.params | oc new-app ruby-helloworld-sample --param-file=-

Modify application creation

Object

Description

BuildConfig

A BuildConfig is created for each source repository that is specified in the command line. The BuildConfig specifies the strategy to use, the source location, and the build output location.

ImageStreams

For BuildConfig, two ImageStreams are usually created. One represents the input image. With Source builds, this is the builder image. With Docker builds, this is the FROM image. The second one represents the output image. If a container image was specified as input to new-app, then an imagestream is created for that image as well.

DeploymentConfig

A DeploymentConfig is created either to deploy the output of a build, or a specified image. The new-app command creates emptyDir volumes for all Docker volumes that are specified in containers included in the resulting DeploymentConfig.

Service

The new-app command attempts to detect exposed ports in input images. It uses the lowest numeric exposed port to generate a service that exposes that port. In order to expose a different port, after new-app has completed, simply use the oc expose command to generate additional services.

Other

Other objects can be generated when instantiating templates, according to the template.

Specify environment variables

$ oc new-app openshift/postgresql-92-centos7 \

-e POSTGRESQL_USER=user \

-e POSTGRESQL_DATABASE=db \

-e POSTGRESQL_PASSWORD=password

$ cat postgresql.env

POSTGRESQL_USER=user

POSTGRESQL_DATABASE=db

POSTGRESQL_PASSWORD=password

$ oc new-app openshift/postgresql-92-centos7 --env-file=postgresql.env

$ cat postgresql.env | oc new-app openshift/postgresql-92-centos7 --env-file=-

Specify build environment variables

$ oc new-app openshift/ruby-23-centos7 --build-env HTTP_PROXY=http://myproxy.net:1337/ --build-env GEM_HOME=~/.gem

$ cat ruby.env

HTTP_PROXY=http://myproxy.net:1337/

GEM_HOME=~/.gem

$ oc new-app openshift/ruby-23-centos7 --build-env-file=ruby.env

$ cat ruby.env | oc new-app openshift/ruby-23-centos7 --build-env-file=-

Specify labels

$ oc new-app https://github.com/openshift/ruby-hello-world -l name=hello-world

View the output without creation

$ oc new-app https://github.com/openshift/ruby-hello-world \

-o yaml > myapp.yaml

$ vi myapp.yaml

$ oc create -f myapp.yamlCreate objects with different names

$ oc new-app https://github.com/openshift/ruby-hello-world --name=myapp

Create objects in a different project

$ oc new-app https://github.com/openshift/ruby-hello-world -n myproject

Create multiple objects

$ oc new-app https://github.com/openshift/ruby-hello-world mysql

Grouping images and source in a single Pod

$ oc new-app ruby+mysql

$ oc new-app \

ruby~https://github.com/openshift/ruby-hello-world \

mysql \

--group=ruby+mysqlSearching for images, templates, and other inputs

$ oc new-app --search php

Install the service catalog

The service catalog is deprecated in OpenShift 4. Equivalent and better functionality is present in the Operator Framework and Operator Lifecycle Manager (OLM).

When developing microservices-based applications to run on cloud native platforms, there are many ways to provision different resources and share their coordinates, credentials, and configuration, depending on the service provider and the platform.

To give developers a more seamless experience, OpenShift includes a service catalog, an implementation of the Open Service Broker API (OSB API) for Kubernetes. This allows users to connect any of their applications deployed in OpenShift to a wide variety of service brokers.

The service catalog allows cluster administrators to integrate multiple platforms using a single API specification. The OpenShift web console displays the cluster service classes offered by service brokers in the service catalog, allowing users to discover and instantiate those services for use with their applications.

As a result, service users benefit from ease and consistency of use across different types of services from different providers, while service providers benefit from having one integration point that gives them access to multiple platforms.

The service catalog is not installed by default in OpenShift 4.

Install service catalog

If you plan on using any of the services from the OpenShift Ansible Broker or Template Service Broker, we must install the service catalog by completing the following steps.

The custom resources for the service catalog's API server and controller manager are created by default in OpenShift, but initially have a managementState of Removed. To install the service catalog, we must change the managementState for these resources to Managed.

- Enable the service catalog API server.

- Edit the service catalog API server resource.

- $ oc edit servicecatalogapiservers

- Under spec, set the managementState field to Managed:

spec: logLevel: Normal managementState: Managed

- Save the file to apply the changes.

The Operator installs the service catalog API server component. As of OpenShift 4, this component is installed into the openshift-service-catalog-apiserver namespace.

- Edit the service catalog API server resource.

- Enable the service catalog controller manager.

- Edit the service catalog controller manager resource.

- $ oc edit servicecatalogcontrollermanagers

- Under spec, set the managementState field to Managed:

spec: logLevel: Normal managementState: Managed

- Save the file to apply the changes.

The Operator installs the service catalog controller manager component. As of OpenShift 4, this component is installed into the openshift-service-catalog-controller-manager namespace.

- Edit the service catalog controller manager resource.

Install the Template Service Broker

We can install the Template Service Broker to gain access to the template applications that it provides.

The Template Service Broker is deprecated in OpenShift 4. Equivalent and better functionality is present in the Operator Framework and Operator Lifecycle Manager (OLM).

Prerequisites

About the Template Service Broker

The Template Service Broker gives the service catalog visibility into the default Instant App and Quickstart templates that have shipped with OpenShift since its initial release. The Template Service Broker can also make available as a service anything for which an OpenShift template has been written, whether provided by Red Hat, a cluster administrator or user, or a third-party vendor.

By default, the Template Service Broker shows objects that are globally available from the openshift project. It can also be configured to watch any other project that a cluster administrator chooses.

The Template Service Broker is not installed by default in OpenShift 4.

Install the Template Service Broker Operator

Prerequisites

- You have installed the service catalog.

The following procedure installs the Template Service Broker Operator using the web console.

- Create a namespace.

- Navigate in the web console to Administration - Namespaces and click Create Namespace.

- Enter openshift-template-service-broker in the Name field and click Create.

The namespace must start with openshift-.

- From the web console navigate to the Catalog - OperatorHub page. Verify that the openshift-template-service-broker project is selected.

- Select Template Service Broker Operator.

- Read the information about the Operator and click Install.

- Review the default selections and click Subscribe.

Next, we must start the Template Service Broker in order to access the template applications it provides.

Starting the Template Service Broker

After we have installed the Template Service Broker Operator, we can start the Template Service Broker using the following procedure.

Prerequisites

- You have installed the service catalog.

- You have installed the Template Service Broker Operator.

- Navigate in the web console to Catalog - Installed Operators and select the openshift-template-service-broker project.

- Select the Template Service Broker Operator.

- Under Provided APIs, click Create New for Template Service Broker.

- Review the default YAML and click Create.

- Verify that the Template Service Broker has started.

After the Template Service Broker has started, we can view the available template applications by navigating to Catalog - Developer Catalog and selecting the Service Class checkbox. Note that it may take a few minutes for the Template Service Broker to start and the template applications to be available.

If we do not yet see these Service classes, we can check the status of the following items:

- Template Service Broker Pod status

- From the Workloads - Pods page for the openshift-template-service-broker project, verify that the Pod that starts with apiserver- has a status of Running and readiness of Ready.

- Cluster service broker status

- From the Catalog - Broker Management - Service Brokers page, verify that the template-service-broker service broker has a status of Ready.

- Service catalog controller manager Pod logs

- From the Workloads - Pods page for the openshift-service-catalog-controller-manager project, review the logs for each of the Pods and verify that you see a log entry with the message Successfully fetched catalog entries from broker.

- Template Service Broker Pod status

Provision template applications

Provision template applications

The following procedure provisions an example PostgreSQL template application that was made available by the Template Service Broker.

Prerequisites

- The service catalog is installed.

- The Template Service Broker is installed.

- Create a project.

- Navigate in the web console to Home - Projects and click Create Project.

- Enter test-postgresql in the Name field and click Create.

- Create a service instance.

- From the web console navigate to the Catalog - Developer Catalog page.

- Select the PostgreSQL (Ephemeral) template application and click Create Service Instance.

- Review the default selections and set any other required fields, and click Create.

- Go to Catalog - Provisioned Services and verify that the postgresql-ephemeral service instance is created and has a status of Ready.

We can check the progress on the Home - Events page. After a few moments, you should see an event for postgresql-ephemeral with the message "The instance was provisioned successfully".

- Create a service binding.

- From the Provisioned Services page, click postgresql-ephemeral and click Create Service Binding.

- Review the default service binding name and click Create.

This creates a new secret for binding using the name provided.

- Review the secret that was created.

- From the web console navigate to Workloads - Secrets and verify that a secret named postgresql-ephemeral was created.

- Click postgresql-ephemeral and review the key-value pairs in the Data section, which are used for binding to other apps.

Uninstall the Template Service Broker

We can uninstall the Template Service Broker if you no longer require access to the template applications that it provides.

The Template Service Broker is deprecated in OpenShift 4. Equivalent and better functionality is present in the Operator Framework and Operator Lifecycle Manager (OLM).

The following procedure uninstalls the Template Service Broker and its Operator using the web console.

Do not uninstall the Template Service Broker if there are any provisioned services from it in the cluster, otherwise we might encounter errors when trying to manage the services.

Prerequisites

This procedure assumes that we installed the Template Service Broker into the openshift-template-service-broker project.

The Template Service Broker is now uninstalled, and template applications will soon be removed from the Developer Catalog.

The Template Service Broker Operator is no longer installed in the cluster.

After the Template Service Broker is uninstalled, users will no longer have access to the template applications provided by the Template Service Broker.

Uninstall the Template Service Broker

Install the OpenShift Ansible Broker

We can install the OpenShift Ansible Broker to gain access to the service bundles that it provides.

The OpenShift Ansible Broker is deprecated in OpenShift 4. Equivalent and better functionality is present in the Operator Framework and Operator Lifecycle Manager (OLM).

Prerequisites

About the OpenShift Ansible Broker

The OpenShift Ansible Broker is an implementation of the Open Service Broker (OSB) API that manages applications defined by Ansible playbook bundles (APBs). APBs provide a method for defining and distributing container applications in OpenShift, and consist of a bundle of Ansible playbooks built into a container image with an Ansible runtime. APBs leverage Ansible to create a standard mechanism to automate complex deployments.

The OpenShift Ansible Broker follows this basic workflow:

- A user requests the list of available applications from the service catalog using the OpenShift web console.

- The service catalog requests the list of available applications from the OpenShift Ansible Broker.

- The OpenShift Ansible Broker communicates with a defined container image registry to learn which APBs are available.

- The user issues a request to provision a specific APB.

- The OpenShift Ansible Broker fulfills the user's provision request by invoking the provision method on the APB.

The OpenShift Ansible Broker is not installed by default in OpenShift 4.

An Ansible playbook bundle (APB) is a lightweight application definition that allows us to leverage existing investment in Ansible roles and playbooks.

APBs use a simple directory with named playbooks to perform OSB API actions, such as provision and bind. Metadata defined in the apb.yml file contains a list of required and optional parameters for use during deployment.

Additional resources

Ansible playbook bundles

Install the OpenShift Ansible Service Broker Operator

Prerequisites

- You have installed the service catalog.

The following procedure installs the OpenShift Ansible Service Broker Operator using the web console.

- Create a namespace.

- Navigate in the web console to Administration - Namespaces and click Create Namespace.

- Enter openshift-ansible-service-broker in the Name field and openshift.io/cluster-monitoring=true in the Labels field and click Create.

The namespace must start with openshift-.

- Create a cluster role binding.

- From the web console navigate to Administration - Role Bindings and click Create Binding.

- For the Binding Type, select Cluster-wide Role Binding (ClusterRoleBinding).

- For the Role Binding, enter ansible-service-broker in the Name field.

- For the Role, select admin.

- For the Subject, choose the Service Account option, select the openshift-ansible-service-broker namespace, and enter openshift-ansible-service-broker-operator in the Subject Name field.

- Click Create.

- Create a secret to connect to the Red Hat Container Catalog.

- From the web console navigate to Workloads - Secrets. Verify that the openshift-ansible-service-broker project is selected.

- Click Create - Key/Value Secret.

- Enter asb-registry-auth as the Secret Name.

- Add a Key of username and a Value of the Red Hat Container Catalog user name.

- Click Add Key/Value and add a Key of password and a Value of the Red Hat Container Catalog password.

- Click Create.

- From the web console navigate to the Catalog - OperatorHub page. Verify that the openshift-ansible-service-broker project is selected.

- Select OpenShift Ansible Service Broker Operator.

- Read the information about the Operator and click Install.

- Review the default selections and click Subscribe.

Next, we must start the OpenShift Ansible Broker in order to access the service bundles it provides.

Starting the OpenShift Ansible Broker

After we have installed the OpenShift Ansible Service Broker Operator, we can start the OpenShift Ansible Broker using the following procedure.

Prerequisites

- You have installed the service catalog.

- You have installed the OpenShift Ansible Service Broker Operator.

- Navigate in the web console to Catalog - Installed Operators and select the openshift-ansible-service-broker project.

- Select the OpenShift Ansible Service Broker Operator.

- Under Provided APIs, click Create New for Automation Broker.

- Add the following to the spec field in the default YAML provided:

registry: - name: rhcc type: rhcc url: https://registry.redhat.io auth_type: secret auth_name: asb-registry-authThis references the secret that was created when installing the OpenShift Ansible Service Broker Operator, which allows us to connect to the Red Hat Container Catalog.

- Set any additional OpenShift Ansible Broker configuration options and click Create.

- Verify that the OpenShift Ansible Broker has started.

After the OpenShift Ansible Broker has started, we can view the available service bundles by navigating to Catalog - Developer Catalog and selecting the Service Class checkbox. Note that it may take a few minutes for the OpenShift Ansible Broker to start and the service bundles to be available.

If we do not yet see these Service classes, we can check the status of the following items:

- OpenShift Ansible Broker Pod status

- From the Workloads - Pods page for the openshift-ansible-service-broker project, verify that the Pod that starts with asb- has a status of Running and readiness of Ready.

- Cluster service broker status

- From the Catalog - Broker Management - Service Brokers page, verify that the ansible-service-broker service broker has a status of Ready.

- Service catalog controller manager Pod logs

- From the Workloads - Pods page for the openshift-service-catalog-controller-manager project, review the logs for each of the Pods and verify that you see a log entry with the message Successfully fetched catalog entries from broker.

- OpenShift Ansible Broker Pod status

OpenShift Ansible Broker configuration options

We can set the following options for the OpenShift Ansible Broker.

OpenShift Ansible Broker configuration options

| YAML key | Description | Default value |

|---|---|---|

| brokerName | The name used to identify the broker instance. | ansible-service-broker |

| brokerNamespace | The namespace where the broker resides. | openshift-ansible-service-broker |

| brokerImage | The fully qualified image used for the broker. | docker.io/ansibleplaybookbundle/origin-ansible-service-broker:v4.0 |

| brokerImagePullPolicy | The pull policy used for the broker image itself. | IfNotPresent |

| brokerNodeSelector | The node selector string used for the broker's deployment. | '' |

| registries | Expressed as a yaml list of broker registry configs, allowing the user to configure the image registries the broker will discover and source its APBs from. | See the default registries array. |

| logLevel | The log level used for the broker's logs. | info |

| apbPullPolicy | The pull policy used for APB Pods. | IfNotPresent |

| sandboxRole | The role granted to the service account used to execute APBs. | edit |

| keepNamespace | Whether the transient namespace created to run the APB is deleted after the conclusion of the APB, regardless of the result. | false |

| keepNamespaceOnError | Whether the transient namespace created to run the APB is deleted after the conclusion of the APB, only in the event of an error result. | false |

| bootstrapOnStartup | Whether or not the broker should run its bootstrap routine on startup. | true |

| refreshInterval | The interval of time between broker bootstraps, refreshing its inventory of APBs. | 600s |

| launchApbOnBind | Experimental: Toggles the broker executing APBs on bind operations. | false |

| autoEscalate | Whether the broker should escalate the permissions of a user while running the APB. This should typically remain false since the broker performs originating user authorization to ensure that the user has permissions granted to the APB sandbox. | false |

| outputRequest | Whether to output the low level HTTP requests that the broker receives. | false |

Default array for registries

- type: rhcc name: rhcc url: https://registry.redhat.io white_list: - ".*-apb$" auth_type: secret auth_name: asb-registry-auth

Configure the OpenShift Ansible Broker

The OpenShift Ansible Broker is deprecated in OpenShift 4. Equivalent and better functionality is present in the Operator Framework and Operator Lifecycle Manager (OLM).

The following procedure customizes the settings for the OpenShift Ansible Broker.

Prerequisites

This procedure assumes that you used ansible-service-broker both as the OpenShift Ansible Broker name and the project that it was installed into.

For example:

We can set the following options for the OpenShift Ansible Broker.

OpenShift Ansible Broker configuration options

Default array for registries

In order for Prometheus to monitor the OpenShift Ansible Broker, create the following resources to grant Prometheus permission to access the namespace where the OpenShift Ansible Broker was installed.

Prerequisites

This procedure assumes that we installed the OpenShift Ansible Broker into the openshift-ansible-service-broker namespace.

Prometheus will now have access to OpenShift Ansible Broker metrics.

Configure the OpenShift Ansible Broker

spec:

keepNamespace: true

sandboxRole: edit

OpenShift Ansible Broker configuration options

YAML key

Description

Default value

brokerName

The name used to identify the broker instance.

ansible-service-broker

brokerNamespace

The namespace where the broker resides.

openshift-ansible-service-broker

brokerImage

The fully qualified image used for the broker.

docker.io/ansibleplaybookbundle/origin-ansible-service-broker:v4.0

brokerImagePullPolicy

The pull policy used for the broker image itself.

IfNotPresent

brokerNodeSelector

The node selector string used for the broker's deployment.

''

registries

Expressed as a yaml list of broker registry configs, allowing the user to configure the image registries the broker will discover and source its APBs from.

See the default registries array.

logLevel

The log level used for the broker's logs.

info

apbPullPolicy

The pull policy used for APB Pods.

IfNotPresent

sandboxRole

The role granted to the service account used to execute APBs.

edit

keepNamespace

Whether the transient namespace created to run the APB is deleted after the conclusion of the APB, regardless of the result.

false

keepNamespaceOnError

Whether the transient namespace created to run the APB is deleted after the conclusion of the APB, only in the event of an error result.

false

bootstrapOnStartup

Whether or not the broker should run its bootstrap routine on startup.

true

refreshInterval

The interval of time between broker bootstraps, refreshing its inventory of APBs.

600s

launchApbOnBind

Experimental: Toggles the broker executing APBs on bind operations.

false

autoEscalate

Whether the broker should escalate the permissions of a user while running the APB. This should typically remain false since the broker performs originating user authorization to ensure that the user has permissions granted to the APB sandbox.

false

outputRequest

Whether to output the low level HTTP requests that the broker receives.

false

- type: rhcc

name: rhcc

url: https://registry.redhat.io

white_list:

- ".*-apb$"

auth_type: secret

auth_name: asb-registry-auth

Configure monitoring for the OpenShift Ansible Broker

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: prometheus-k8s

namespace: openshift-ansible-service-broker

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- pods

verbs:

- get

- list

- watch

Provision service bundles

Provision service bundles

The following procedure provisions an example PostgreSQL service bundle (APB) that was made available by the OpenShift Ansible Broker.

Prerequisites

- The service catalog is installed.

- The OpenShift Ansible Broker is installed.

- Create a project.

- Navigate in the web console to Home - Projects and click Create Project.

- Enter test-postgresql-apb in the Name field and click Create.

- Create a service instance.

- From the web console navigate to the Catalog - Developer Catalog page.

- Select the PostgreSQL (APB) service bundle and click Create Service Instance.

- Review the default selections and set any other required fields, and click Create.

- Go to Catalog - Provisioned Services and verify that the dh-postgresql-apb service instance is created and has a status of Ready.

We can check the progress on the Home - Events page. After a few moments, you should see an event for dh-postgresql-apb with the message "The instance was provisioned successfully".

- Create a service binding.

- From the Provisioned Services page, click dh-postgresql-apb and click Create Service Binding.

- Review the default service binding name and click Create.

This creates a new secret for binding using the name provided.