Containers - OpenShift Container Platform

OpenShift Container Platform runs containerized applications. Containers use small, dedicated Linux operating systems without a kernel. Their file system, networking, cgroups, process tables, and namespaces are separate from the host Linux system.

Kubernetes is used to manage applications. OpenShift provides enhancements...

- Hybrid cloud deployments.

- Integrated Red Hat technology.

- Open source development model.

Red Hat Enterprise Linux CoreOS (RHCOS)

OpenShift uses the Red Hat Enterprise Linux CoreOS (RHCOS) operating system, which combines features from the CoreOS and Red Hat Atomic Host operating systems...

| Ignition | Firstboot system configuration for bringing up nodes. |

| cri-o | Container engine developed in tandem with Kubernetes releases. Provides facilities for running, stopping, and restarting containers. Fully replaces the Docker Container Engine. |

| Kubelet | Primary node agent for Kubernetes. Responsible for launching and monitoring containers. |

Use RHCOS for all control plane machines. We can use RHEL as the OS for compute, or worker, machines.

Installation and update process

We can deploy a production cluster in supported clouds by running a single command and providing a few values. We can also customize the cloud installation or install the cluster in the data center.

For clusters that use RHCOS for all machines, updating, or upgrading, OpenShift is a highly-automated process that completely controls the systems and services running on each machine, including the operating system itself, from a central control plane, upgrades are designed to become automatic events. If the cluster contains RHEL worker machines, the control plane benefits from the streamlined update process, but perform more tasks to upgrade the RHEL machines.

Other features

Operators deploy applications and software components for applications to use, replacing manual upgrades of operating systems and control plane applications. Operators such as the Cluster Version Operator and Machine Config Operator allow cluster-wide management of those components. Operator Lifecycle Manager (OLM) and the OperatorHub provide facilities for storing and distributing Operators to people developing and deploying applications.

The Red Hat Quay Container Registry is a Quay.io container registry that serves most of the container images and Operators to OpenShift clusters. Quay.io is a public registry version of Red Hat Quay that stores millions of images and tags.

OpenShift lifecycle

- Create an OpenShift cluster

- Manage the cluster

- Deploy applications

- Scale up applications

Internet and Telemetry access

The Telemetry component provides...

- metrics about cluster health

- success of updates

- subscription management (including legally entitling purchase from Red Hat)

There is no disconnected subscription management. Machines must have direct internet access to install the cluster.

Internet access is required to:

- Access the OpenShift Infrastructure Providers page to download the installation program

- Access quay.io to obtain the packages required to install the cluster

- Obtain the packages required to perform cluster updates

- Access Red Hat's software as a service page to perform subscription management

Installation and update

| Install type | Description | Platforms |

|---|---|---|

| installer-provisioned | Use the installation program to provision infrastructure and deploy a cluster. | Amazon Web Services (AWS) |

| user-provisioned | Deploy a cluster on previously provisioned infrastructure | Amazon Web Services (AWS)

VMware vSphere Bare metal |

The OpenShift 4.x Tested Integrations page contains details about integration testing for different platforms.

To pull container images and to provide telemetry data to Red Hat, all machines, including the computer running the installation process, need direct internet access. We cannot specify a proxy server for OpenShift.

The main assets generated by the installation program are the Ignition config files for the bootstrap, master, and worker machines.

The installation program uses a set of targets and dependencies. Because each target is only concerned with its own dependencies, the installation program can act to achieve multiple targets in parallel. The ultimate target is a running cluster. By meeting dependencies instead of running commands, the installation program is able to recognize and use existing components instead of running the commands to create them again.

After installation, each cluster machine uses Red Hat Enterprise Linux CoreOS (RHCOS) as the operating system. RHCOS is the immutable container host version of Red Hat Enterprise Linux (RHEL) and features a RHEL kernel with SELinux enabled by default.

RHCOS includes the kubelet, which is the Kubernetes node agent, and the CRI-O container runtime, which is optimized for Kubernetes.

Every control plane machine in a cluster must use RHCOS, which includes a critical first-boot provisioning tool called Ignition. This tool enables the cluster to configure the machines. Operating system updates are delivered as an Atomic OSTree repository embedded in a container image rolled out across the cluster by an Operator. Actual operating system changes are made in-place on each machine as an atomic operation rpm-ostree.

RHCOS is used as the operating system for all cluster machines, the cluster manages all aspects of its components and machines, including the operating system. Because of this, only the installation program and the Machine Config Operator can change machines. The installation program uses Ignition config files to set the exact state of each machine, and the Machine Config Operator completes more changes to the machines, such as the application of new certificates or keys, after installation.

Download the installation program from the OpenShift Infrastructure Providers page. This site manages:

The installation program is a Go binary file that performs a series of file transformations on a set of assets. The way we interact with the installation program differs depending on the installation type.

We use three sets of files during installation:

It is possible to modify Kubernetes and the Ignition config files that control the underlying RHCOS operating system during installation. However, no validation is available to confirm the suitability of any modifications made to these objects. If we modify these objects, we might render the cluster non-functional. Because of this risk, modifying Kubernetes and Ignition config files is not supported unless we are following documented procedures or are instructed to do so by Red Hat support.

The installation configuration file is transformed into Kubernetes manifests, and then the manifests are wrapped into Ignition config files. The installation program uses these Ignition config files to create the cluster.

The installation configuration files are all pruned when we run the installation program, so be sure to back up all configuration files that we want to use again.

We cannot modify the parameters set during installation, but we can modify many cluster attributes after installation.

We can install a cluster that uses installer-provisioned infrastructure on only Amazon Web Services (AWS).

The default installation type uses installer-provisioned infrastructure on AWS. By default, the installation program acts as an installation wizard, prompting you for values that it cannot determine on its own and providing reasonable default values for the remaining parameters. We can also customize the installation process to support advanced infrastructure scenarios. The installation program provisions the underlying infrastructure for the cluster.

We can install either a standard cluster or a customized cluster. With a standard cluster, we provide minimum details required to install the cluster. With a customized cluster, we can specify more details about the platform, such as the number of machines that the control plane uses, the type of virtual machine that the cluster deploys, or the CIDR range for the Kubernetes service network.

If possible, use this feature to avoid having to provision and maintain the cluster infrastructure. In all other environments, we use the installation program to generate the assets required to provision the cluster infrastructure.

With installer-provisioned infrastructure clusters, OpenShift manages all aspects of the cluster, including the operating system itself. Each machine boots with a configuration that references resources hosted in the cluster that it joins. This configuration allows the cluster to manage itself as updates are applied.

Use the installation program to generate the assets required to provision the cluster infrastructure, create the cluster infrastructure, and then deploy the cluster to the infrastructure that we provided.

If we do not use infrastructure that the installation program provisioned, manage and maintain the cluster resources ourselves, including:

If the cluster uses user-provisioned infrastructure, we have the option of adding RHEL worker machines to the cluster.

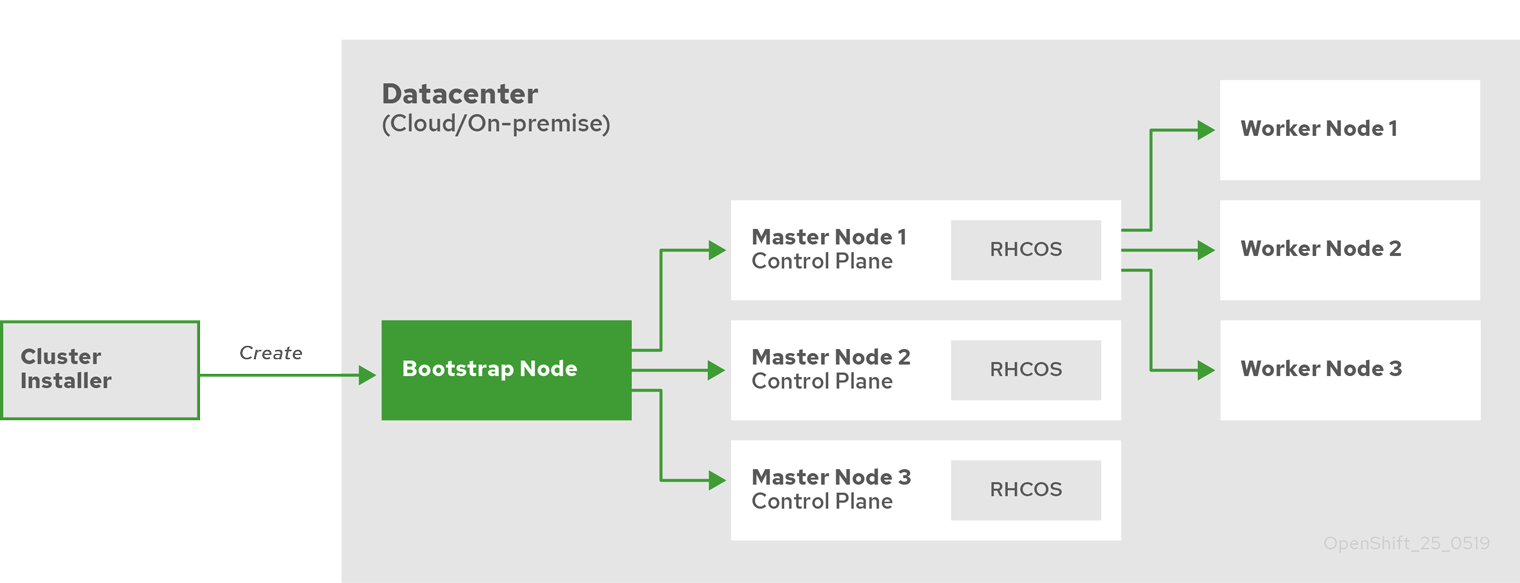

Because each machine in the cluster requires information about the cluster when it is provisioned, OpenShift uses a temporary bootstrap machine during initial configuration to provide the required information to the permanent control plane. It boots by using an Ignition config file that describes how to create the cluster. The boostrap machine creates the master machines that make up the control plane. The control plane machines then create the compute, or worker, machines.

After the cluster machines initialize, the bootstrap machine is destroyed. All clusters use the bootstrap process to initialize the cluster, but if we provision the infrastructure for the cluster, we must complete many of the steps manually.

The Ignition config files that the installation program generates contain certificates that expire after 24 hours. Complete the cluster installation and keep the cluster running for 24 hours in a non-degraded state to ensure that the first certificate rotation has finished.

Bootstrapping a cluster...

The result of this bootstrapping process is a fully running OpenShift cluster. The cluster then downloads and configures remaining components needed for the day-to-day operation, including the creation of worker machines in supported environments.

Installation

The installation process with installer-provisioned infrastructure

The installation process with user-provisioned infrastructure

Installation process details

Control plane

The control plane, which is composed of master machines, manages the OpenShift cluster. The control plane machines manage workloads on the compute, or worker, machines. The cluster itself manages all upgrades to the machines by the actions of the Cluster Version Operator, the Machine Config Operator, and set of individual Operators.

OpenShift assigns hosts different roles. These roles define the function of the machine within the cluster. The cluster contains definitions for the standard master and worker role types.

The cluster also contains the definition for the bootstrap role. Because the bootstrap machine is used only during cluster installation, its function is explained in the cluster installation documentation.

In a Kubernetes cluster, the worker nodes are where the actual workloads requested by Kubernetes users run and are managed. The worker nodes advertise their capacity and the scheduler, which is part of the master services, determines on which nodes to start containers and Pods. Important services run on each worker node, including...

MachineSets control the worker machines. Machines with the worker role drive compute workloads governed by a specific machine pool that autoscales them. Because OpenShift has the capacity to support multiple machine types, the worker machines are classed as compute machines.

In this release, the terms "worker machine" and "compute machine" are used interchangeably because the only default type of compute machine is the worker machine. In future versions of OpenShift, different types of compute machines, such as infrastructure machines, might be used by default.

In a Kubernetes cluster, the master nodes run services required to control the Kubernetes cluster. In OpenShift, the master machines are the control plane and contain more than just the Kubernetes services. All of the machines with the control plane role are master machines. The terms "master" and "control plane" are used interchangeably to describe them. Instead of being grouped into a MachineSet, master machines are defined by a series of standalone machine API resources. Extra controls apply to master machines to prevent you from deleting all master machines and breaking the cluster.

Kubernetes services that run on the control plane...

Some of these services on the master machines run as systemd services, while others run as static Pods. Systemd services are appropriate for services we need to always come up on that particular system shortly after restart. For master machines it includes services such as:

Because CRI-O and Kubelet need to be running before we can run other containers, we run them on the host as systemd services

Operators manage services on the control plane, integrating with Kubernetes APIs and CLI tools such as the kubectl and oc commands. Operators perform health checks, manage over-the-air updates, and ensure that the applications remain in a specified state.

Because CRI-O and the Kubelet run on every node, almost every other cluster function can be managed on the control plane by using Operators. Components added to the control plane by using Operators include critical networking and credential services.

OpenShift 4.1 uses different classes of Operators to perform cluster operations and run services on the cluster for applications to use.

The Cluster Version Operator manages the other Operators in a cluster.

Cluster functions are divided into a series of Platform Operators, which manage a particular area of cluster functionality, such as cluster-wide application logging, management of the Kubernetes control plane, or the machine provisioning system.

Each Operator provides an API for determining cluster functionality. The Operator hides the details of managing the lifecycle of that component. Operators can manage a single component or tens of components, but the end goal is always to reduce operational burden by automating common actions. Operators also offer a more granular configuration experience. We configure each component by modifying the API that the Operator exposes instead of modifying a global configuration file.

The Cluster Operator Lifecycle Management (OLM) component manages Operators available for use in applications. OLM does not manage the Operators that comprise OpenShift. OLM is a framework that manages Kubernetes-native applications as Operators. Instead of managing Kubernetes manifests, it manages Kubernetes Operators. OLM manages two classes of Operators, Red Hat Operators and certified Operators.

Some Red Hat Operators drive the cluster functions, like the scheduler and problem detectors. Others are provided to manage ourselves and use in applications, like etcd OpenShift also offers certified Operators, which the community built and maintains. These certified Operators provide an API layer to traditional applications so we can manage the application through Kubernetes constructs.

The update service provides over-the-air updates to both OpenShift and Red Hat Enterprise Linux CoreOS (RHCOS). It provides a graph, or diagram that contain vertices and the edges that connect them, of component Operators. The edges in the graph show which versions we can safely update to, and the vertices are update payloads that specify the intended state of the managed cluster components.

The Cluster Version Operator (CVO) in the cluster checks with the update service to see the valid updates and update paths based on current component versions and information in the graph. When requesting an update, the CVO uses the release image for that update to upgrade the cluster. The release artifacts are hosted in Quay as container images.

To provide only compatible updates, a release verification pipeline exists. Each release artifact is verified for compatibility with supported cloud platforms and system architectures as well as other component packages. After the pipeline confirms the suitability of a release, the update service notifies status on availability.

During continuous update mode, two controllers run. One continuously updates the payload manifests, applies them to the cluster, and outputs the status of the controlled rollout of the Operators, whether they are available, upgrading, or failed. The second controller polls the update service to determine if updates are available.

Reverting the cluster to a previous version, or a rollback, is not supported. Only upgrading to a newer version is supported.

OpenShift integrates operating system and cluster management. Clusters manage their own updates, including updates to RHCOS on cluster nodes.

Three DaemonSets and controllers use Kubernetes-style constructs to manage nodes, orchestrating OS updates and configuration changes to the hosts.

The machine configuration is a subset of the Ignition configuration. The machine-config-daemon reads the machine configuration to see if it needs to do an OSTree update or if it must apply a series of systemd kubelet file changes, configuration changes, or other changes to the operating system or OpenShift configuration.

When performing node management operations, we create KubeletConfig Custom Resource (CR).

Machine roles

Cluster workers

Service

Description

CRI-O

Container runtime. Optimized for Kubernetes.

Kubelet

Kubernetes node agent. Accepts and fulfills requests for running and stopping container workloads.

Service proxy

Manages communication for pods across workers.

Cluster masters

Component

Description

Kubernetes API Server

Configures data for pods, services, and replication controllers. Provides a focal point for cluster's shared state.

etcd

etcd stores the persistent master state while other components watch etcd for changes to bring themselves into the specified state.

Controller Manager Server

Watches etcd for changes to objects such as replication, namespace, and serviceaccount controller objects, and then uses the API to enforce the specified state. Several such processes create a cluster with one active leader at a time.

HAProxy services

sshd

Allows remote login

CRI-O container engine (crio)

Runs the containers. Used instead of the Docker Container Engine.

Kubelet (kubelet)

Accepts requests for managing containers on the machine from master services.

Operators

Platform Operators

Operators managed by Cluster Operator Lifecycle Management (OLM)

OpenShift update service

Machine Config Operator

machine-config-controller

Coordinates machine upgrades from the control plane. Monitors all of the cluster nodes and orchestrates their configuration updates.

machine-config-daemon

Runs on each node in the cluster. Updates machine to a configuration defined by MachineConfig as instructed by the MachineConfigController. When the node sees a change, it drains off its pods, applies the update, and reboots. These changes come in the form of Ignition configuration files that apply the specified machine configuration and control kubelet configuration. The update itself is delivered in a container.

machine-config-server

Provides the Ignition config files to master nodes as they join the cluster.

Develop containerized applications

- Build a container and store it in a registry

- Create a Kubernetes manifest and save it to a Git repository

- Make an Operator to share application with others

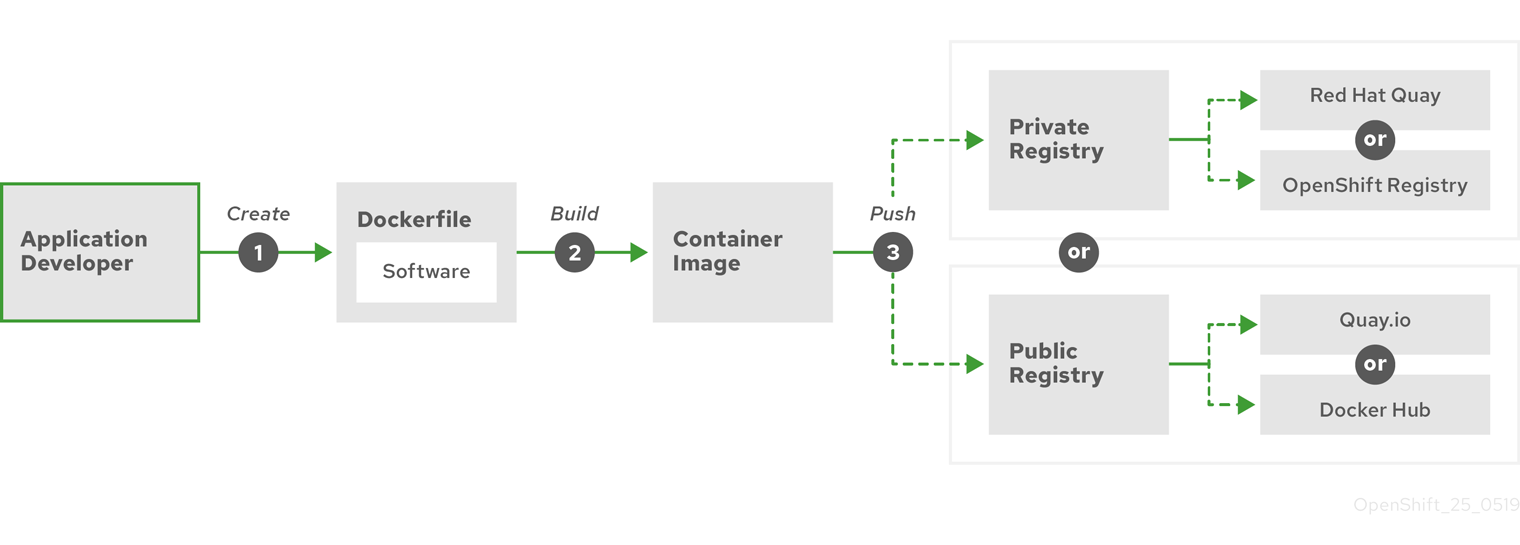

Build a container

- Select an application to containerize.

- Acquire a tool for building a container, like buildah or docker.

- Create a file that describes what goes in the container. Typically a Dockerfile.

- Acquire a location to push the resulting container image (container registry).

- Create a containerized application and push it to the registry...

- Pull the application to run anywhere we want it to run.

For computers running Red Hat Enterprise Linux (RHEL), to create containerized application...

- Install container build tools

RHEL contains a set of tools that includes podman, buildah, and skopeo used to build and manage containers.

- Create a Dockerfile to combine base image and software

Information about building the container goes into a file named Dockerfile. In that file, we identify the base image we build from, the software packages we install, and the software we copy into the container. We also identify parameter values like network ports that we expose outside the container and volumes that we mount inside the container. Put the Dockerfile and the software we want to containerized in a directory on the RHEL system.

- Run buildah or docker build

To pull your chosen base image to the local system and create a container image stored locally

-

buildah build-using-dockerfile

...or...

-

docker build

We can also build container without a Dockerfile by using buildah.

- Tag and push to a registry

Add a tag to the new container image that identifies the location of the registry in which we want to store and share the container. Then push that image to the registry by running the podman push or docker push command.

- Pull and run the image

From any system that has a container client tool, such as podman or docker, run a command that identifies the new image. For example, run...

-

podman run <image_name>

...or...

-

docker run <image_name>

Here <image_name> is the name of the new container image, which resembles...

-

quay.io/myrepo/myapp:latest

The registry might require credentials to push and pull images.

Container build tool options

When running containers in OpenShift, we use the CRI-O container engine. CRI-O runs on every worker and master machine in an OpenShift cluster. CRI-O is not yet supported as a standalone runtime outside of OpenShift.

Alternatives to the Docker Container Engine and docker command are available. Other container tools include podman, skopeo, and buildah. The buildah, podman, and skopeo commands are daemonless, and can be run without root privileges.

Base image options

The base image contains software that resembles a Linux system to applications. When building an image, software is placed into that file system.

Red Hat provides Red Hat Universal Base Images (UBI). These images are based on RHEL and are freely redistributable without a Red Hat subscription.

UBI images have standard, init, and minimal versions. We can also use the Red Hat Software Collections images as a foundation for applications that rely on specific runtime environments such as Node.js, Perl, or Python. Special versions of some of these runtime base images are referred to as Source-to-image (S2I) images.

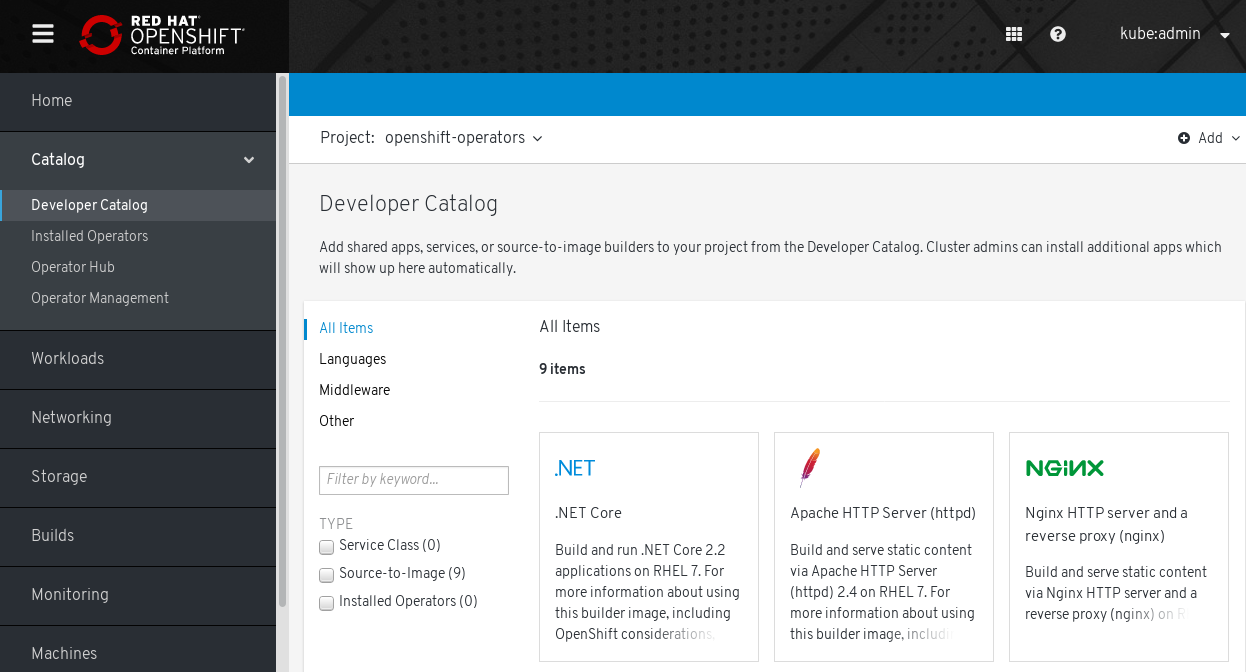

S2I images are available to use directly from the OpenShift web UI by selecting...

-

Catalog | Developer Catalog

Registry options

Container Registries store container images for sharing with others. Public container registries offer free accounts or a premium version that offer more storage and special features. We can install our own registry that can be exclusive to our organization or selectively shared with others.

To get Red Hat and certified partner images, draw from the Red Hat Registry...

| registry.access.redhat.com | unauthenticated and deprecated |

| registry.redhat.io | requires authentication |

The Red Hat Container Catalog lists Red Hat container images along with information about the contents and quality of those images, including health scores based on applied security updates.

Public registries include Docker Hub and Quay.io. The Quay.io registry is owned and managed by Red Hat. Many of the components used in OpenShift are stored in Quay.io, including container images and the Operators used to deploy OpenShift itself. Quay.io can store other types of content, including Helm Charts.

A private container registry is installed with OpenShift and runs on its cluster. Red Hat also offers a private version of the Quay.io registry called Red Hat Quay. Red Hat Quay includes geo replication, Git build triggers, and Clair image scanning.

Create a Kubernetes Manifest

Kubernetes Pods and services

Pods can contain one or more than one container.

We can deploy a container in a Pod, and include logging and monitoring in the Pod. When we run the Pod and need to scale up an additional instance, those other containers are scaled up with it. For namespaces, containers in a Pod share the same network interfaces, shared storage volumes, and resource limitations, such as memory and CPU, which makes it easier to manage the contents of the Pod as a single unit. Containers in a Pod can also communicate with each other by using standard inter-process communications, such as System V semaphores or POSIX shared memory.

While individual Pods represent a scalable unit in Kubernetes, a service provides a means of grouping together a set of Pods to create a complete, stable application that can complete tasks such as load balancing. A service is also more permanent than a Pod because the service remains available from the same IP address until you delete it. When the service is in use, it is requested by name and the OpenShift cluster resolves that name into the IP addresses and ports where we can reach the Pods that compose the service.

By their nature, containerized applications are separated from the operating systems where they run and, by extension, their users. Part of the Kubernetes manifest describes how to expose the application to internal and external networks by defining network policies that allow fine-grained control over communication with the containerized applications. To connect incoming requests for HTTP, HTTPS, and other services from outside the cluster to services inside the cluster, we can use an Ingress resource.

If the container requires on-disk storage instead of database storage, which might be provided through a service, we can add volumes to the manifests to make that storage available to the Pods. We can configure the manifests to create persistent volumes (PVs) or dynamically create volumes that are added to the Pod definitions.

After defining a group of Pods that compose our application, we can define those Pods in deployments and deploymentconfigs.

Application types

To determine the appropriate workload for our application, consider if the application is:

- Meant to run to completion and be done.

An example an application that starts up to produce a report and exits when the report is complete. The application might not run again then for a month. Suitable OpenShift objects for these types of applications include Jobs and CronJob objects.

- Expected to run continuously.

For long-running applications, we can write a Deployment or a DeploymentConfig.

- Required to be highly available.

If our application requires high availability, then we want to size our deployment to have more than one instance. A Deployment or DeploymentConfig can incorporate a ReplicaSet for that type of application. With ReplicaSets, Pods run across multiple nodes to make sure the application is always available, even if a worker goes down.

- Need to run on every node.

Some types of Kubernetes applications are intended to run in the cluster itself on every master or worker node. DNS and monitoring applications are examples of applications that need to run continuously on every node. We can run this type of application as a DaemonSet. We can also run a DaemonSet on a subset of nodes, based on node labels.

- Require life-cycle management.

To hand off our application so that others can use it, consider creating an Operator. Operators let you build in intelligence, so it can handle things like backups and upgrades automatically. Coupled with the Operator Lifecycle Manager (OLM), cluster managers can expose Operators to selected namespaces so that users in the cluster can run them.

- Have identity or numbering requirements.

A requirement to run exactly three instances of the application and to name the instances 0, 1, and 2. A StatefulSet is suitable for this application. StatefulSets are most useful for applications that require independent storage, such as databases and zookeeper clusters.

Available supporting components

Supporting components can include a database or logging. The required component may be available from the following Catalogs available via the web console:

| OperatorHub | Makes Operators available from Red Hat, certified Red Hat partners, and community members to the cluster operator. The cluster operator can make those Operators available in all or selected namespaces in the cluster, so developers can launch them and configure them with their applications. |

| Service Catalog | Alternatives to Operators. Use to get supporting applications if we are an existing OpenShift 3 customer and are invested in Service Catalog applications, or if we have a Cloud Foundry environment from which we are interested in consuming brokers from other ecosystems. |

| Templates | Useful for a one-off type of application, where the lifecycle of a component is not important after it is installed. A template provides a way to get started developing a Kubernetes application with minimal overhead. A template can be a list of resource definitions, which could be deployments, services, routes, or other objects. If to change names or resources, we can set these values as parameters in the template. The Template Service Broker Operator is a service broker that we can use to instantiate our own templates. We can also install templates directly from the command line. |

We can configure the supporting Operators, Service Catalog applications, and templates to the specific needs of the development team and then make them available in the namespaces in which the developers work. Many people add shared templates to the openshift namespace because it is accessible from all other namespaces.

Apply the manifest

We write manifests as YAML files and deploy them by applying them to the cluster, for example, by running the oc apply command.

Automation

To automate the container development process we can create a CI pipeline that builds the images and pushes them to a registry. For example a GitOps pipeline to integrate the container development with the Git repositories used to store the software required to build our applications.

- Write some YAML then run the oc apply command to apply that YAML to the cluster and test that it works.

- Put the YAML container configuration file into the Git repository. From there, people who want to install or improve the app can pull down the YAML and apply it to the cluster.

- Consider writing an Operator for our application.

Develop for Operators

Packaging and deploying applications as an Operator might be preferred if we make our application available for others to run. Operators add a lifecycle component to our application that acknowledges that the job of running an application is not complete as soon as it is installed.

When creating an application as an Operator, we can build in features for upgrading the application, backing it up, scaling it, or keeping track of its state. Maintenance tasks, like updating the Operator, can happen automatically and invisibly to the Operator's users. For example create an Operator to automatically back up data at particular times.

Any application maintenance that has traditionally been completed manually, like backing up data or rotating certificates, can be completed automatically with an Operator.

Red Hat Enterprise Linux CoreOS (RHCOS)

Red Hat Enterprise Linux CoreOS (RHCOS) is the only supported operating system for OpenShift control plane (master), machines. Compute (worker) machines can use RHEL as their operating system.

RHCOS images are downloaded to the target platform during installation. Ignition config files are used to deploy the machines.

RHCOS software is in RPM packages, and each RHCOS system starts up with a RHEL kernel and a set of services managed by the systemd init system.

Management is performed remotely from the cluster. When setting up the RHCOS machines, we can modify only a few system settings. This controlled immutability allows OpenShift to store the latest state of RHCOS systems in the cluster so it is always able to create additional machines and perform updates based on the latest RHCOS configurations.

RHCOS incorporates the CRI-O container engine instead of the Docker container engine. CRI-O offers a smaller footprint and reduced attack surface than is possible with container engines that offer a larger feature set. At the moment, CRI-O is only available as a container engine within OpenShift clusters.

RHCOS replaces the Docker CLI tool with a compatible set of container tools. The podman CLI tool supports container runtime features, such as running, starting, stopping, listing, and removing containers and container images. The skopeo CLI tool can copy, authenticate, and sign images. Use the crictl CLI tool to work with containers and pods from the CRI-O container engine. While direct use of these tools in RHCOS is discouraged, we can use them for debugging purposes.

RHCOS features transactional upgrades and rollbacks using the rpm-ostree upgrade system. Updates are delivered via container images and are part of the OpenShift update process. When deployed, the container image is pulled, extracted, and written to disk, then the bootloader is modified to boot into the new version. The machine will reboot into the update in a rolling manner to ensure cluster capacity is minimally impacted.

For RHCOS systems, the layout of the rpm-ostree file system has the following characteristics:

- /usr is where the operating system binaries and libraries are stored and is read-only. We do not support altering this.

- /etc, /boot, /var are writable on the system but only intended to be altered by the Machine Config Operator.

- /var/lib/containers is the graph storage location for storing container images.

The Machine Config Operator handles operating system upgrades. Instead of upgrading individual packages, as is done with yum upgrades, rpm-ostree delivers upgrades as an atomic unit. The downloaded tree goes into effect on the next reboot. If something goes wrong with the upgrade, a single rollback and reboot returns the system to the previous state. RHCOS upgrades in OpenShift are performed during cluster updates.

Configure RHCOS

RHCOS images are set up initially with a feature called Ignition, which runs only on the system's first boot. After first boot, RHCOS systems are managed by the Machine Config Operator (MCO) that runs in the OpenShift cluster.

Because RHCOS systems in OpenShift are designed to be fully managed from the cluster, directly logging into a RHCOS machine is discouraged. Limited direct access to RHCOS machines in a cluster can be completed for debugging purposes.

Ignition utility

Ignition is the utility used by RHCOS to complete common disk tasks, including partitioning, formatting, writing files, and configuring users. On first boot, Ignition reads configuration from the installation media, or the location specified, and applies the configuration to the machines.

Ignition performs the initial configuration of the cluster machines. For each machine, Ignition takes the RHCOS image and boots the RHCOS kernel. Options on the kernel command line identify the type of deployment and the location of the Ignition-enabled initial Ram Disk (initramfs).

OpenShift uses Ignition version 2 and Ignition config version 2.3

The OpenShift installation program creates the Ignition config files needed to deploy the cluster. These files are based on the information provided to the installation program directly or through an install-config.yaml file.

The way that Ignition configures machines is similar to how tools like cloud-init or Linux Anaconda kickstart configure systems, but with some important differences:

Because of that, Ignition can repartition disks, set up file systems, and perform other changes to the machine's permanent file system. In contrast, cloud-init runs as part of a machine's init system when the system boots, so making foundational changes to things like disk partitions cannot be done as easily. With cloud-init, it is also difficult to reconfigure the boot process while we are in the middle of the node's boot process.

After a machine initializes and the kernel is running from the installed system, the Machine Config Operator from the OpenShift cluster completes all future machine configuration.

If a machine's setup fails, the initialization process does not finish, and Ignition does not start the new machine. The cluster will never contain partially-configured machines. If Ignition cannot complete, the machine is not added to the cluster. We must add a new machine instead. This behavior prevents the difficult case of debugging a machine when the results of a failed configuration task are not known until something that depended on it fails at a later date.

The Ignition process for an RHCOS machine in a OpenShift cluster involves the following steps:

The machine is then ready to join the cluster and does not require a reboot.

Ignition config files

The Ignition sequence

View Ignition configuration files

To see the Ignition config file used to deploy the bootstrap machine, run:

- $ openshift-install create ignition-configs --dir $HOME/testconfig

After answering a few questions, the bootstrap.ign, master.ign, and worker.ign files appear in the directory entered.

To see the contents of the bootstrap.ign file, pipe it through the jq filter. Here's a snippet from that file:

$ cat $HOME/testconfig/bootstrap.ign | jq

\\{

"ignition": \\{

"config": \\{},

…

"storage": \\{

"files": [

\\{

"filesystem": "root",

"path": "/etc/motd",

"user": \\{

"name": "root"

},

"append": true,

"contents": \\{

"source": "data:text/plain;charset=utf-8;base64,VGhpcy...NlcnZpY2UK",

To decode the contents of a file listed in the bootstrap.ign file, pipe the base64-encoded data string representing the contents of that file to the base64 -d command. Here's an example using the contents of the /etc/motd file added to the bootstrap machine from the output shown above:

- $ echo VGhpcy...C1iIC1mIC11IGJvb3RrdWJlLnNlcnZpY2UK | base64 -d

This is the bootstrap machine; it will be destroyed when the master is fully up.

The primary service is "bootkube.service". To watch its status, run, e.g.:

- journalctl -b -f -u bootkube.service

Repeat those commands on the master.ign and worker.ign files to see the source of Ignition config files for each of those machine types. You should see a line like the following for the worker.ign, identifying how it gets its Ignition config from the bootstrap machine:

- "source": "https://api.myign.develcluster.example.com:22623/config/worker",

Here are a few things we can learn from the bootstrap.ign file:

- Format

The format of the file is defined in the Ignition config spec. Files of the same format are used later by the MCO to merge changes into a machine's configuration.

- Contents

Because the bootstrap machine serves the Ignition configs for other machines, both master and worker machine Ignition config information is stored in the bootstrap.ign, along with the bootstrap machine's configuration.

- Size

The file is more than 1300 lines long, with path to various types of resources.

- The content of each file that will be copied to the machine is actually encoded into data URLs, which tends to make the content a bit clumsy to read. (Use the jq and base64 commands shown previously to make the content more readable.)

- Configuration

The different sections of the Ignition config file are generally meant to contain files that are just dropped into a machine's file system, rather than commands to modify existing files. For example, instead of having a section on NFS that configures that service, you would just add an NFS configuration file, which would then be started by the init process when the system comes up.

- users

A user named core is created, with your ssh key assigned to that user. This will allow you to log into the cluster with that user name and your credentials.

- storage

The storage section identifies files that are added to each machine. A few notable files include /root/.docker/config.json (which provides credentials the cluster needs to pull from container image registries) and a bunch of manifest files in /opt/openshift/manifests that are used to configure the cluster.

- systemd

The systemd section holds content used to create systemd unit files. Those files are used to start up services at boot time, as well as manage those services on running systems.

- Primitives

Ignition also exposes low-level primitives that other tools can build on.

Change Ignition Configs after installation

Machine Config Pools manage a cluster of nodes and their corresponding Machine Configs. Machine Configs contain configuration information for a cluster. To list all known Machine Config Pools:

$ oc get machineconfigpools NAME CONFIG UPDATED UPDATING DEGRADED master master-1638c1aea398413bb918e76632f20799 False False False worker worker-2feef4f8288936489a5a832ca8efe953 False False False

To list all Machine Configs:

$ oc get machineconfig NAME GENERATEDBYCONTROLLER IGNITIONVERSION CREATED OSIMAGEURL 00-master 4.0.0-0.150.0.0-dirty 2.2.0 16m 00-master-ssh 4.0.0-0.150.0.0-dirty 16m 00-worker 4.0.0-0.150.0.0-dirty 2.2.0 16m 00-worker-ssh 4.0.0-0.150.0.0-dirty 16m 01-master-kubelet 4.0.0-0.150.0.0-dirty 2.2.0 16m 01-worker-kubelet 4.0.0-0.150.0.0-dirty 2.2.0 16m master-1638c1aea398413bb918e76632f20799 4.0.0-0.150.0.0-dirty 2.2.0 16m worker-2feef4f8288936489a5a832ca8efe953 4.0.0-0.150.0.0-dirty 2.2.0 16m

The Machine Config Operator acts somewhat differently than Ignition when it comes to applying these machineconfigs. The machineconfigs are read in order (from 00* to 99*). Labels inside the machineconfigs identify the type of node each is for (master or worker). If the same file appears in multiple machineconfig files, the last one wins. So, for example, any file that appears in a 99* file would replace the same file that appeared in a 00* file. The input machineconfig objects are unioned into a "rendered" machineconfig object, which will be used as a target by the operator and is the value we can see in the machineconfigpool.

To see what files are being managed from a machineconfig, look for "Path:" inside a particular machineconfig. For example:

$ oc describe machineconfigs 01-worker-container-runtime | grep Path: Path: /etc/containers/registries.conf Path: /etc/containers/storage.conf Path: /etc/crio/crio.conf

To change a setting in one of those files, for example to change pids_limit to 1500 (pids_limit = 1500) inside the crio.conf file, create a new machineconfig containing only the file to change.

Give the machineconfig a later name (such as 10-worker-container-runtime). The content of each file is in URL-style data. Then apply the new machineconfig to the cluster.

Quick Links

Help

Site Info

Related Sites