Administer - Business Process Management

- Administer deployment environments

- Modify the deployment topology

- Modify deployment environment definition parameters

- Manage deployment environment resources

- Stop and restart the deployment manager

- Stop and restart a cluster member

- Administer the BPM document store

- Overview

- Get document store status

- Migrate document attachments to the BPM document store

- Manage tracing for the BPM document store

- Update the BPM document store application

- Modify configuration parameters

- Limitations in administering the BPM document store

- Administer Process Portal

- Process Portal dashboards: Authorization overview

- Enable Process Portal to run in an HTML frame

- Enable email for Process Portal notifications

- Configure IBM Connections integration for task notifications

- Configure Sametime Connect integration

- Create and maintain saved searches for Process Portal

- Reset the Process Portal start page for a user

- Set the Process Portal tab order for a user group

- Configure the My Team Performance dashboard (deprecated)

- Administer the Process Portal index

- Update the Process Portal index

- Configure the Process Portal index

- Administer Process Portal spaces

- Enable tracing for widgets in Process Portal spaces

- Review logs for messages

- Disable automatic wiring in Process Portal spaces

- Work with templates in Process Portal spaces

- Remove widgets from Process Portal spaces

- Cleanup procedures for Business Process Choreographer

- How time zones are handled in Business Process Choreographer

- Enable logging for Business Process Choreographer

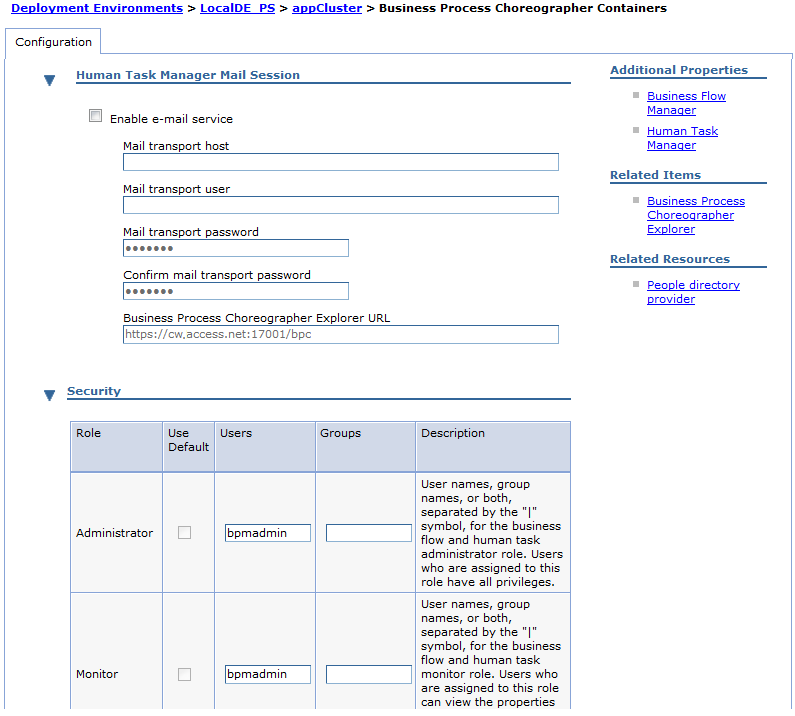

- Use the administrative console to administer Business Process Choreographer

- Enable Common Base Events, the audit trail, and the task history using the administrative console

- Query and replay failed messages, using the administrative console

- Refresh people query results, using the administrative console

- Refresh people query results, using the refresh daemon

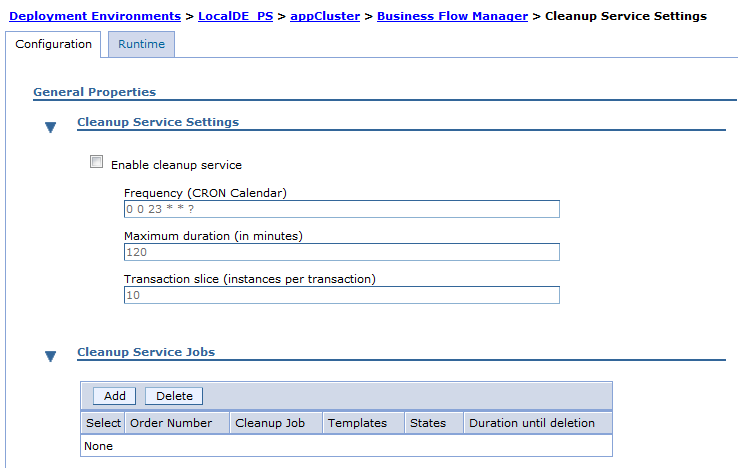

- Configure the cleanup service and cleanup jobs

- Administer the compensation service

- Use scripts to administer Business Process Choreographer

- Archive completed BPEL process and task instances

- Query and replay failed messages, using administrative scripts

- Refresh people query results, using administrative scripts

- Delete Business Process Choreographer objects

- Administer query tables

- Add support for shared work items

- Business Process Choreographer data migration script for shared work items

- Remove redundant indexes

- Administer the Process Center index

Administer deployment environments

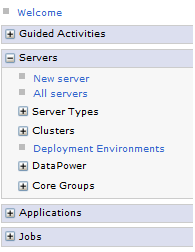

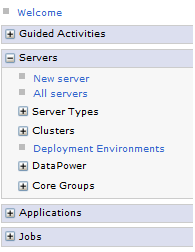

- In the administrative console, click...

-

Servers > Deployment Environments

- To display the components of a deployment environment, click its name.

- For existing environments, select the check box next to the deployment environments to manage and click one of the following buttons:

Function Task Start or Stop Start and stop deployment environments - To add new deployment environments to the deployment manager, click New.

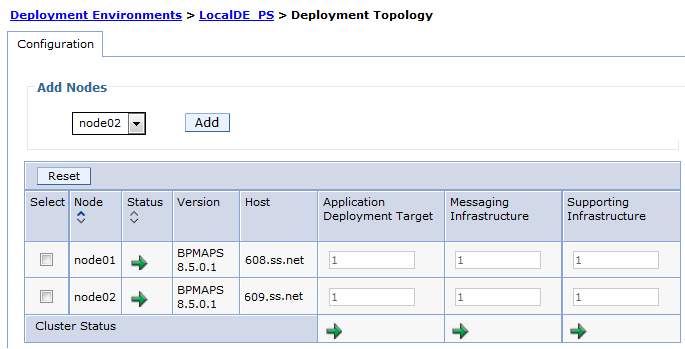

Modify the deployment topology

By adding nodes you can increase the overall work capacity of the system.

-

Servers | Deployment Environments | env_name | Additional Properties | Deployment Topology

Modify deployment environment definition parameters

- Identify the configuration type and the corresponding attributes.

AdminConfig selects default parameters based on the common database (CommonDB).

- Query an existing configuration object to obtain a configuration ID to use.

- Modify the existing configuration object or create a new one.

- Save the configuration.

-

$AdminConfig Save

Manage deployment environment resources

From the dmgr console, click...

- Servers | Deployment Environments

Start or stop nodes...

The Deployment Environment Configuration page lists:

- Name

- Pattern

- Description

- Status

- Functions

- Links

| Deployment Topology | Change the configuration of a deployment environment based on IBM-supplied patterns. |

| Deferred Configuration | Determine any manual steps needed to complete the configuration of this deployment environment.

|

| Related Items | Authentication Aliases |

Stop and restart the deployment manager

-

cd DMGR_PROFILE/bin

./stopManager -username wasadmin -password password

./startManager

Stop and restart a cluster member

Before stopping, prevent new work from entering the cluster member. For example...

- Remove the cluster member from plugin_cfg.xml

- For IIOP traffic, set the runtime weight to zero for the cluster member.

Stop cluster member...

- Servers | Server Types | WebSphere application servers | server | Stop

Stop cluster...

-

Servers | Clusters | WebSphere application server clusters | cluster | Stop

Start cluster member...

- Servers | Server Types | WebSphere application servers | server | Start

Start cluster...

-

Servers | Clusters | WebSphere application server clusters | cluster | Start

Administer the BPM document store

BPM document store technical user

A run-as technical user is required for creating default configurations for the domain, object store, and document class definition. A technical user is also required when BPM connects to the BPM document store using CMIS. Credentials are saved in an authentication alias mapped to the BPM role type...

-

EmbeddedECMTechnicalUser

The default authentication alias is DeAdminAlias. The technical user must have the WAS administrator role.

Change the password of the technical user

The credentials of the technical user are saved in an authentication alias. The password of the technical user in the authentication alias must be changed together with the password in the user repository where the technical user is defined (such as FileRegistry or LDAP).

The BPM document store may still use the old credentials for a short period of time (less than a minute). Access to the BPM document store may fail in this short timeframe.

Change the technical user

To change the password or change the technical user, it is not sufficient to simply change the authentication alias. The BPM document store is protected against access from unknown users. New technical users must first be authorized.

-

AdminTask.maintainDocumentStoreAuthorization('[-deName myDEname -add cn=newTechnicalUser,o=defaultWIMFileBasedRealm]')

To list currently authorized principals...

- AdminTask.maintainDocumentStoreAuthorization('[-deName myDEname -list]')

After the new technical user is authorized, you can modify the authentication alias with the new principal name and password.

To remove access for old user...

- AdminTask.maintainDocumentStoreAuthorization('[-deName myDEname -remove cn=oldTechnicalUser,o=defaultWIMFileBasedRealm]')

Change the authentication alias

To change the authentication alias mapped to the EmbeddedECMTechnicalUser role:

- AdminTask.updateDocumentStoreApplication('[-deName myDEname]')

If your new authentication alias uses a different user than the original user, also follow the instructions in the above section "Changing the technical user."

Reconfiguring the user registry

Authorization to the BPM document store is based on unique IDs. If the BPM document store was initialized during initial server startup, only the same user (with the same unique ID) can manage the BPM document store, and access its documents. If you change your user registry configuration ( by removing the file-based repository in order to use only an LDAP server in federated repositories), a user with the same user ID and password in LDAP will not have access to the BPM document store. This is also true if you simply delete a user and recreate one with the same user ID. In this situation, you lose access to the BPM document store, and you need to rollback the configuration change.

Duplicate users are not permitted in federated repositories, which means that you cannot connect to an LDAP server that contains the same users that you have in your file-based repository. You need to remove the file-based and add LDAP. A user in LDAP with the same user ID does not have access to the BPM document store. As a result, you may choose to authorize all authenticated users to work with the BPM document store for the duration of reconfiguration (while access has been shut down through the HTTP server).

You can use the special key word #AUTHENTICATED-USERS to authorize all users to the BPM document store who successfully authenticate:

- AdminTask.maintainDocumentStoreAuthorization('[-deName De1 -add #AUTHENTICATED-USERS]')

After this configuration has been completed, you can safely re-configure your user registry without losing access to the BPM document store. After the configuration change is complete and the cell is restarted, you can authorize a new user and remove the old user as well as the #AUTHENTICATED-USERS entry.

Get BPM document store status

The getDocumentStoreStatus command is used to obtain:

- The availability of the BPM document store.

- The status of document migration to the BPM document store.

- The status of the IBM_BPM_DocumentStore application and whether it is up-to-date in comparison to the authentication alias and the EAR file version.

To get status...

-

AdminTask.getDocumentStoreStatus('[-deName myDeName]')

CWTDS2018I: The BPM document migration has not yet started. '{0}' documents need to be migrated.

Migrate document attachments to the BPM document store

The startDocumentStoreMigration command is used to migrate document attachments from the BPM database to the BPM document store. The migration of document attachments to the BPM document store is considered to be a required BPM post-migration task, and it should be done after the database migration has been completed.

The original versions of the document attachments will continue to reside in the BPM database until all documents have been migrated.

After the migration is complete, use either coaches or heritage coaches to work with BPM documents in the BPM document store.

When document attachments are being migrated to the BPM document store, they are temporarily stored twice in the BPM database. Ensure the database has sufficient storage to accommodate the twice-stored documents before starting the document migration.

The BPM document store must be used with a Federated Repositories user registry. If you migrate your documents to the BPM document store, and you later change to another form of user registry, such as a stand-alone LDAP registry or a custom registry, you may lose access to your documents.

The BPM document store restricts document size to 1 gigabyte or less. If the content of any document attachment in the BPM database exceeds 1 gigabyte, you cannot migrate the document attachment to the BPM document store. The document attachment will remain in the BPM database, but a reference to the document attachment will be created in the BPM document store. You can access the content of the document attachment through APIs and CMIS operations as if the document had been completely migrated.

To migrate document attachments to the BPM document store:

- Run the getDocumentStoreStatus command.

This wsadmin command returns the command syntax that can be used as well as the status of any document migration:

AdminTask.getDocumentStoreStatus('[-deName myDeName]')

CWTDS2018I: The BPM document migration has not yet started. '{0}' documents need to be migrated.To pass more parameters for the command:

cd profile_root/bin/

./wsadmin -user my_user_name -password my_password -lang jython -c "print AdminTask.getDocumentStoreStatus('[-deName myDeName]')"For example:

-

cd DMGR_PROFILE/bin

./wsadmin -user tw_admin -password tw_admin -lang jython -c "print AdminTask.getDocumentStoreStatus('[-deName De1]')" - Run the startDocumentStoreMigration command.

This wsadmin command returns the command syntax that can be used:

AdminTask.startDocumentStoreMigration('[-deName myDeName]')

To specify other parameters for the command:

-

cd profile_root/bin/

./wsadmin -user my_user_name -password my_password -lang jython -c "print AdminTask.startDocumentStoreMigration('[-deName myDeName]')"For example:

cd DMGR_PROFILE/bin

./wsadmin -user tw_admin -password tw_admin -lang jython -c "print AdminTask.startDocumentStoreMigration('[-deName De1]')" - Run the getDocumentStoreStatus command again to check the status of the document migration. If the migration is proceeding successfully or has completed successfully, the command will return one of the following messages:

CWTDS2019I: The BPM document migration is running. '{0}' of '{1}' documents are already migrated.

CWTDS2020I: The BPM document migration is running. '{0}' of '{1}' documents are already migrated. A cleanup is currently in progress.

CWTDS2021I: The BPM document migration has finished. '{0}' documents were migrated.If one or more documents fail to migrate successfully, the getDocumentStoreStatus command may return one of the following messages:

CWTDS2022I: The BPM document migration has stopped with an error. '{0}' of '{1}' documents are already migrated. For '{2}' documents, the migration failed.

CWTDS2023I: The migration failed for document '{0}'. Details: '{1}'. - If a message indicates that one or more of the documents has failed to migrate successfully, complete one of the following steps:

- If all of the documents failed to migrate successfully, check the migration configuration and the logs for a general problem, such as a problem with the database connection.

- If the logs indicate an OutOfMemoryError condition, try increasing the heap size of the JVM for the period of time in which the migration will take place. Alternatively, try reducing the maximum number of documents that can be migrated in parallel to the BPM document store.

- If the logs indicate transaction timeouts, there may be very large documents that failed to migrate within one transaction. Try raising the transaction timeout temporarily by following the instructions in the topic "Transaction service settings." Alternatively, you can run the startDocumentStoreMigration command with the -keepFailedDocuments option.

- If some of the documents failed to migrate successfully, you can choose to retain the content of these documents in the BPM database, and only create references for the documents in the BPM document store. The legacy document APIs and ECM operations will continue to work with the documents in the BPM database. To retain the content of the documents in the BPM database, and only create references for the documents in the BPM document store, run the startDocumentStoreMigration command with the -keepFailedDocuments option:

AdminTask.startDocumentStoreMigration('[-deName myDeName -keepFailedDocuments]')

After the document migration has completed, the database tables LSW_BPD_INSTANCE_DOCUMENTS and LSW_BPD_INSTANCE_DOC_PROPS should be empty in the Process Server database. However, if the database contained documents larger than 1 gigabyte or if the -keepFailedDocuments option was used, the database may contain a few remaining rows. If you want, you can optionally reorganize the tables to release the disk space that was used by the deleted table rows. For example, for DB2 databases, you can reorganize the tables using the REORG and RUNSTATS commands.

Manage tracing for the BPM document store

The maintainDocumentStoreTrace command is used to enable or disable tracing for an individual component or all components of the BPM document store.

Update the BPM document store application

The updateDocumentStoreApplication command is used to update the installed application IBM_BPM_DocumentStore in a deployment target. An update of the application is generally required if an iFix has been installed for the BPM document store or if the role type mapping has changed for the embedded ECMTechnicalUser.

Modify configuration parameters

The BPM document store has some configuration parameters that can be read and modified using wsadmin scripting.

These configuration parameters are:

- cmisUrl

- numberOfParallelDocumentMigrationWorker

The cmisURL parameter is used to customize the CMIS URL used by the BPM server. By default, the value of the parameter is not set and a local HTTPS connection is used with /fncmis as the context root. For performance reasons, you could replace the cmisUrl with an unencrypted local URL:

http://local_Http_Proxy_Server_In_Secure_Network_Zone/fncmis

However, the credentials of the technical user will be sent in an HTTP basic authentication header and WS-Security Username Token over this connection. As a result, using an unencrypted URL is discouraged from a security perspective.

The numberOfParallelDocumentMigrationWorker parameter specifies the maximum number of documents that can be migrated in parallel to the BPM document store. By default, the value of this parameter is set to 10.

Examples of how to read and modify the configuration properties for the BPM document store using wsadmin scripting are shown in the following examples:

-

// List all BPM document stores. If there is only one deployment environment, then there is only one document store.

docStores = AdminUtilities.convertToList(AdminConfig.list("BPMDocumentStore"))

// Read the first BPM document store

docStore = docStores[0]

// Show one specific attribute of the BPM document store

AdminConfig.showAttribute(docStore, "cmisUrl")

// Update one specific attribute of the BPM document store

AdminConfig.modify(docStore, [ ["cmisUrl", "new value"] ])

AdminConfig.modify(docStore, [ ["numberOfParallelDocumentMigrationWorker" ,5] ])

// Save the configuration

AdminConfig.save()

Limitations in administering the BPM document store

The document store is only available when Federated Repositories is used as the user registry

An exception may be thrown during document store logging and tracing operations

Although the BPM document store is not available in these situations, you can continue to work with your document attachments in the BPM database or you can configure an external ECM system for storing your documents. In either situation, the legacy document APIs will continue to be used. Any ECM operations that specify the BPM document store as a server will fail.

The document store is only available when Federated Repositories is used as the user registry

The BPM document store is only available when Business Process Manager is configured to use Federated Repositories as the user registry. If you are using a different user registry configuration, you should disable the autostart mechanism of the IBM_BPM_DocumentStore application.To use the BPM document store with your LDAP users and groups, configure LDAP as a repository in Federated Repositories instead of using stand-alone LDAP as the user registry.

An exception may be thrown during document store logging and tracing operations

When the server is started or the BPM document store commands maintainDocumentStoreTrace or updateDocumentStoreApp are run, the following SystemErr exception may be logged:-

[3/19/13 23:31:12:548 PDT] 00000084 SystemErr R log4j:WARN No appenders could be found for logger (filenet_error.api.com.filenet.apiimpl.util.ConfigValueLookup).

[3/19/13 23:31:12:548 PDT] 00000084 SystemErr R log4j:WARN Please initialize the log4j system properly.

[3/19/13 23:31:14:482 PDT] 00000084 SystemErr R log4j:WARN Configuring default logging to the file E:\IBM\WebSphere\AppServer\profiles\Custom01\FileNet\testDE1.AppCluster.WIN-E6GDL89KDJDNode01.0\p8_server_error.log

[3/19/13 23:31:49:536 PDT] 00000084 SystemErr R log4j:WARN No appenders could be found for logger (filenet_error.api.com.filenet.apiimpl.util.ConfigValueLookup).

[3/19/13 23:31:49:536 PDT] 00000084 SystemErr R log4j:WARN Please initialize the log4j system properly.

[3/19/13 23:33:25:867 PDT] 00000108 SystemErr R SLF4J: Class path contains multiple SLF4J bindings.

The exception is caused by the tracing and logging mechanism used with the BPM document store. The exception does not result in any operational problems and can be safely ignored.

Administer Process Portal

You can configure various aspects of the Process Portal environment, such as setting up access to various functions, and creating saved searches.

Process Portal dashboards: Authorization overview

Process Portal includes a Process Performance and a Team Performance dashboard. Users must be authorized to access the dashboards and for the management actions that are available in each of the dashboards.

Access to Process Portal dashboards

Access to the Team Performance and Process Performance dashboards is determined by the teams who are assigned to the dashboards in the Process Portal application. These teams and the default security groups that are assigned to them are defined in the System toolkit. You can change the default security groups or members of the team in the Process Admin Console.

Default authorization for each of the dashboards.

| Dashboard | Team | Security groups in the team |

|---|---|---|

| Process Performance | Process Owner | tw_process_owners tw_allusers

All users typically need access to the dashboard so they can navigate to the details for a process instance. To restrict access to process owners only, use the Process Admin Console to remove the tw_allusers group from the Process Portal snapshot (Installed Apps > Team Bindings). |

| Team Performance | Managers | tw_managers

The tw_managers group includes the tw_allusers group by default. To restrict access to a set of manager users only, use the Process Admin Console to remove the tw_allusers group and include a set of managers...

|

Actions in the Process Performance dashboard

To manage a process and its instances, users require authorization to access the individual process and they must be authorized for actions on the process and its instances.

Required authorization...

| Action | Authorization |

|---|---|

| Access the dashboard for a specific process and its instances | A member of the team that is assigned to the Expose Performance Metrics setting for the business process in Process Designer. |

| Act on a process instance, for example, change the projected path or the instance due date | A member of the security group that is assigned to the following Process Portal action policies:

See Configuration properties for Process Portal action policies . |

In addition, some features in the dashboards are available only when certain settings are applied to the business process in Process Designer. See Enable process instance management.

Actions in the Team Performance dashboard

To manage the work for a team, users must be a member of a team of managers and they must be authorized for some actions on tasks.

Required authorization...

| Action | Authorization |

|---|---|

| Access the dashboard for a specific team and its members | A member of a team of managers defined in Process Designer. See Defining team managers. |

| Change the due date or the priority of a task | A member of the security group that is assigned to the following Process Portal action policies:

See Configuration properties for Process Portal action policies |

The System Data toolkit also contains an All Users team. The Managers of All Users team is the manager team for the All Users team and the teams in the sample that is delivered with BPM.

The tw_allusers_managers group is the security group for the Managers of All Users team. This security group includes the tw_admins group by default. Members of the tw_admins group can therefore see the All Users team and the sample teams in the Team Performance dashboard. To remove the tw_admins group or add members to the tw_allusers_managers group, use the Process Admin Console.

Enable Process Portal to run in an HTML frame

By default, login pages and index pages cannot be displayed inside an HTML frame. Enable Process Portal to run inside an HTML frame by changing the variable...

- com.ibm.bpm.social.enableRunInFrame

When someone tries to display a Process Portal frame inside an HTML frame, login pages and index pages are configured by default to automatically redirect the browser to display the page itself instead of the frame. The configuration alleviates security concerns. However, your configuration might have legitimate requirements to display Process Portal pages inside HTML frames.

For v8.5.0.1, the team might want to view Process Portal data inside a Microsoft SharePoint site.

- Open the administrative console and click...

-

Resources > Resource Environment | Resource Environment Provider | Mashups_ConfigService | Additional Properties | Custom properties

- Change the variable...

- com.ibm.bpm.social.enableRunInFrame = true

- Click OK and then save your changes to the master configuration.

- Restart the application server instance.

Process Portal can run inside an HTML frame.

For v8.5.0.1 you can view data from Process Portal inside a Microsoft SharePoint site.

Enable email for Process Portal notifications

Process Portal users can set their preferences to receive an email notification when a new task is assigned to them. The configuration works for all types of email. To use this capability, enable the email environment to send notifications.

Verify the following components are stopped:

- Process Center server

- Process Server

If Process Portal users are using email with an IBM Lotus Domino V9 server, they can complete Process Portal tasks directly from their email notifications. To make sure the integration is set up correctly, complete the following prerequisite tasks:

- Configure single sign-on with an LTPA token on IBM WAS and IBM Lotus Domino.

- Verify the Domino server is set up properly by following the steps in the IBM Lotus Domino documentation:

- Create an XML file that you import into IBM Lotus Domino

Customize the following example:

<?xml version="1.0" encoding="UTF-8"?> <webcontextConfiguration version="1.1"> <palleteItem contributeTabOnStartup="false" contributeToSideshelfOnStartup="false" description="Embedded Experience OpenSocial gadget used to display Coach" hideThumbnail="false" imageUrl="" providerId="com.ibm.rcp.toolbox.opensocial.provider.internal.OpenSocialPalleteProvider" singletonSidebar="false" url="http://bpm80.swg.usma.ibm.com:9080/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml" viewImageUrl=""> <preferences/> <data> <object-capabilities url="http://[hostname]:[WC_defaulthost port]/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml"> <grant-feature id="opensocial-data"/> <grant-feature id="opensocial-templates"/> <grant-feature id="opensocial-1.0"/> <grant-feature id="dynamic-height"/> <grant-feature id="embedded-experiences"/> <grant-feature id="open-views"/> <grant-feature id="settitle"/> <grant-feature id="osapi"/> <grant-feature id="content-rewrite"/> <grant-feature id="embedded-experiences-render"/> <grant-feature id="core"/> </object-capabilities> </data> </palleteItem> </webcontextConfiguration> - Set up Domino Web SSO authentication between the iNotes server and IM server Part of overall topic for setting up iNotes and IM.

- Configure the component

- Complete the configuration

- Set up SSO in IBM Lotus Notes.

- Create an XML file that you import into IBM Lotus Domino

- Add the email server to the trusted servers list to prevent problems with sizing in Process Portal.

- The email facility (used for task notification, task assignment, and so on) must be configured to use a local SMTP server that is listening on the default port (25) and does not require authentication. This local SMTP server can then be used to forward emails to any other external SMTP that requires authentication.

- If you are using BPM V8.5.0.0,

complete the following additional tasks:

- Make sure that SSO is configured with the same domain in both BPM and IBM Lotus Domino. The BPM domain specified in 99Local.xml must match your Domino server. If the domain does not match, edit 100Custom.xml.

Update the domain in the <gadget-link> tab that is in the <email> element; for example, <gadget-link>http://bpm80.swg.usma.ibm.com:9080/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml</gadget>.

Edit the files according to the following procedure.

- Use the same security protocol for both the BPM server and the email server prevents an issue where Process Portal users see a blank task completion view in email. For example, use HTTPS for both the BPM server and the email server, or use HTTP for both the BPM server and the email server. If the environment uses HTTPS for one of the servers, and HTTP for the other server, copy the BPM server URLs from 99Local.xml under the <email> section and paste them into 100Custom.xml. Then edit the URLs so the SSL protocol matches your email server.

For editing 100Custom.xml, follow the instructions in The 99Local.xml and 100Custom.xml..

- Make sure that SSO is configured with the same domain in both BPM and IBM Lotus Domino. The BPM domain specified in 99Local.xml must match your Domino server. If the domain does not match, edit 100Custom.xml.

Update the domain in the <gadget-link> tab that is in the <email> element; for example, <gadget-link>http://bpm80.swg.usma.ibm.com:9080/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml</gadget>.

Edit the files according to the following procedure.

The entries in the email properties section in the 99Local.xml configuration file define the properties for your email environment. Make all required modifications to 100Custom.xml.

Do not edit 99Local.xml.

If you are using BPM V8.5.0.1...

- Open 99Local.xml and locate the email properties section.

- Open 100Custom.xml and copy and paste the email properties section into 100Custom.xml.

- In the <email> element, insert values appropriate for the environment.

- <smtp-server> element - Valid SMTP server.

- <default-from-address> element - Valid email address.

Values set for the <email> element in 100Custom.xml.

<server merge="mergeChildren"> <email merge="mergeChildren"> <!-- SMTP server that mail should be sent to --> <smtp-server merge="replace">smtp.example.com</smtp-server> <valid-from-required merge="replace">true</valid-from-required> <default-from-address merge="replace">username@example.com</default-from-address> <send-external-email merge="replace">true</send-external-email> </email> </server>

Save changes.

- To force URLs included in emails to go through a network router, see the following scenario keys...

- SERVER_EMAIL_GADGET_LINK

- SERVER_EMAIL_PORTAL_LINK

- SERVER_EMAIL_PORTAL_PROCESS_INFO_LINK

- SERVER_EMAIL_PORTAL_RUN_TASK_LINK

- SERVER_EMAIL_TEMPLATE_CLIENT_LINK

- Restart the cluster.

If you are using BPM V8.5.0.0...

- Open 99Local.xml and locate the email properties section.

- Open 100Custom.xml and copy and paste the email properties section into 100Custom.xml.

- In the <email> element, insert values appropriate for the environment.

- <smtp-server> element - Valid SMTP server.

- <default-from-address> element - Valid email address.

- To change the URL for the Process Portal Server, modify the BPMVirtualHostInfo configuration object

You might change the BPMVirtualHostInfo configuration object to be sure a fully qualified host name is used for the BPM server, to change the default transport protocol setting to use HTTPS instead of HTTP, or if your Domino server is on a different domain than the Process Portal server.

For example:

cd INSTALL_HOME/bin ./wsadmin -conntype NONE -lang jython wsadmin>print AdminConfig.showall(AdminConfig.list('BPMVirtualHostInfo')) [port -1] [transportProtocol https] wsadmin>AdminConfig.modify(AdminConfig.list('BPMVirtualHostInfo'),[['port','9443']]) wsadmin>AdminConfig.modify(AdminConfig.list('BPMVirtualHostInfo'),[['hostname','myhostname.ibm.com']]) wsadmin>AdminConfig.modify(AdminConfig.list('BPMVirtualHostInfo'),[['transportProtocol','https']]) wsadmin>print AdminConfig.showall(AdminConfig.list('BPMVirtualHostInfo')) [hostname myhostname.ibm.com] [port 9443] [transportProtocol https] wsadmin>AdminConfig.save() - Restart the cluster.

If the email gadget fails to render in the Notes Client or iNotes, verify the following items:

- SSO has been configured properly between the Domino server and the BPM server.

- Check the Domino server error logs.

The Domino server logs can be found in...

-

Domino_root/data/domino/workspace/logs

If you see an error similar to the following error, import the BPM certificate into the Domino server:

-

Certificate, OU=Cellname, OU=Dmgr, O=IBM, C=US, is not trusted. Validation failed with error 3659.

To import the certificate into the Domino server:

- Import an Internet certifier into the Domino Directory.

- Create an Internet cross-certificate in the Domino Directory from a certifier document.

To have process participants receive email notifications, ask them to update their user preferences.

Configure IBM Connections integration for task notifications

Process Portal users can set their preferences to receive a notification in IBM Connections when a new task is assigned to them. To use this capability, configure the integration with IBM Connections.

To have Process Portal users receive notifications about tasks in IBM Connections, have IBM Connections V4 or later. You can use only business cards in IBM Connections.

BPM must be configured to use the same user repository the Connections server uses.

When configuring IBM Connections, consider the following guidance:

- Verify the Connections user ID specified in the Connections Server profile in Process Designer has authority to post to the Connections stream, which means the user is a member of the trustedExternalApplication security role in the WidgetContainer application running on Connections.

- Verify the Connections access role is configured properly on the Connections server. Follow the steps in Configure widgets and select the option...

- Use SSO token

This ensures that users can open tasks from links in the task notifications visible in the Connections stream.

- Verify the correct port is specified in the Process Designer IBM Connections server definition.

If no port is specified, the default port 443 is used.

- Verify the Connections HTTP server is running.

- Verify the BPM domain specified in 99Local.xml matches the Connections server.

- Verify the same realm name is set for the BPM server and the Connections server.

- Add the Connections server to the trusted servers list to prevent problems with sizing in Process Portal.

- If you are using BPM V8.5.0.0, using the same security protocol for both the BPM server and the Connections server prevents an issue where Process Portal users see a blank task completion view in IBM Connections server.

For example, use HTTPS for both the BPM server and the Connections server, or use HTTP for both the BPM server and the Connections server. If the environment uses HTTPS for one of the servers, and HTTP for the other server, copy the relevant BPM server URLs from 99Local.xml under the <connections-task-notification> section and paste them into 100Custom.xml. Then edit the URLs so the SSL protocol matches the Connections server.

To configure the integration for task notifications in IBM Connections:

- Configure the BPM server to use the same LDAP server the Connections server uses.

- Enable SSO for the BPM server.

- In the administrative console, select...

-

Security | Global Security | Authentication cache settings | Web and SIP security | Single sign-on (SSO) | Enabled | Interoperability Mode | Web inbound security attribute

Make sure to include the correct domain name and change the cookie names to match the environment.

Add a period before the domain name, for example .ibm.com.

- In the administrative console, select...

- Configure cross-cell security for the BPM server and the Connections server.

- Extract the root SSL certificate from the Connections server.

- Select...

-

Security | SSL certificate and key management | Key stores and certificates | DefaultTrustStore | Signer certificates | Retrieve from port

- Set the host name and SSL port (the admin host secure port) of the remote Process Center server.

- Specify an alias to use for the root signer.

- Click Retrieve signer information and verify the retrieved signer information is correct.

Save the root signer in the local truststore.

To retrieve the root signer of the Process Portal server, repeat the previous steps on the Connections server.

- Select...

- Export the LTPA key from the Connections server, and import it into the keystore of the Process Portal server.

Sharing LTPA keys is required for configuring cross-cell security for the BPM server and the Connections server.

When multiple cells are involved, one set of LTPA keys is shared among them. Therefore, administrators must plan which set of LTPA keys to use in the organization and ensure the automatic LTPA key generation is turned off. Otherwise, if a new set is generated, the cells can become unsynchronized.

- In the administrative console of the remote IBM Connections server, select...

-

Security | Global Security | Authentication | LTPA

- In the Cross-cell single sign-on section, type a new password and a fully qualified key file name.

- Click Export keys.

- Transfer the exported key file in binary mode to the file system of the local Process Portal server by repeating the previous steps on the administrative console of the Process Portal server and clicking Import keys.

- In the administrative console of the remote IBM Connections server, select...

- Verify that cross-cell security is configured correctly.

- Log in to the Connections server.

- In the same browser session, launch the URL for the BPM server.

If security is configured correctly, you are not prompted to log in to the BPM server.

- Extract the root SSL certificate from the Connections server.

- If you are using BPM V8.5.0.1, and you want to customize the URLs of links to gadgets, configure the optional scenario key SERVER_ACTIVITY_STREAM_IMAGE_LINK and the SERVER_TASK_NOTIFICATION_GADGET_LINK.

By default, links to widgets are generated using the EXTERNAL_CLIENT scenario key, which points to the BPM server or, if you have one, the web server.

- Enable task notifications in IBM Connections on the server by editing 100Custom.xml.

- Insert the following code in the <server> section of the file:

<connections-task-notification merge="mergeChildren"> <!-- Change the value to true in order to enable connections task notification--> <enable-connections-task-notification merge="replace">true</enable-connections-task-notification> </connections-task-notification>

- If you are using BPM V8.5.0.0

and if the Connections server and the BPM server are on different domains, edit the <connections-task-notification> section of 100Custom.xml to use the Connections server instead of the BPM server:

change

- <gadget-link>http:/bpm_host.ibm.com:9081/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml</gadget-link>

to

- <gadget-link>http://bpm_host.other_domain.com:9081/ProcessPortal/gadgets/OpenSocial/BPMOpenSocialGadget.xml</gadget-link>

- Restart the deployment manager, nodes, and clusters.

- Insert the following code in the <server> section of the file:

In Process Designer, enable the Connections integration.

For all Process Portal users, make sure the email addresses in their BPM user profiles match the email addresses in their IBM Connections user profiles.

So that process participants receive notifications in IBM Connections, ask them to update their user preferences.

Configure Sametime Connect integration

Before you begin, start Process Portal.

Requirements...

- The two environments must share a common user registry, and it must be available as a base entry in the BPM federated repositories.

- To support the use of short names and email addresses for system login, the federated repository entry must contain login properties of either uid, mail, or uid;mail

- The two environments must share the same federated repositories realm name.

- The two environments must share an LtpaToken for single sign-on functionality. This requires that common domain names and interoperability mode are enabled globally.

- You must import LTPA keys from one environment to the other.

- If you use SSL communication, it must be enabled on all the servers in the configuration, including the Process Center server, Process Server, and Sametime Connect server. Normal SSL configuration is required, including certificate exchange across all servers.

If you are using BPM V8.5.0.1 to configure Sametime Connect integration:

- Configure BPM and Sametime Connect to share the same user registry and the same federated repositories realm name.

- Configure BPM and Sametime Connect to share an LtpaToken for SSO functionality, which would include common domain names and interoperability mode to be enabled in...

-

Global security | Single sign-on (SSO)

- Import LTPA keys from one environment to the other.

For example, either import the Sametime Connect LTPA keys into BPM or import the BPM LTPA keys into Sametime Connect.

- Configure the Sametime Proxy Server so the domain specified in the SSO settings is in the allowed list of domains.

All subsequent access to Process Portal, and Sametime Connect must be done through a host name that ends in this domain name.

- Log in to the Sametime System Console as the Sametime administrator and select...

-

Sametime Servers | Sametime Proxy Servers | server_name

- In the Domain list of Sametime Proxy Server section, enter the same domain name used in the WAS SSO settings, for example, ibm.com.

- Click OK and restart all the WAS-based Sametime servers.

- Log in to the Sametime System Console as the Sametime administrator and select...

- Configure the Process Portal endpoint.

Configure the PROCESS_PORTAL_JS scenario key to use the strategy...

...and point to a virtual host information object that identifies the Process Portal server.

See the entry for PROCESS_PORTAL_JS.

- Restart the BPM servers.

f you are using BPM V8.5.0.0 to configure Sametime Connect integration:

- Configure BPM and Sametime Connect to share the same user registry and the same federated repositories realm name.

- Configure BPM and Sametime Connect to share an LtpaToken for SSO functionality, which would include common domain names and interoperability mode to be enabled in...

-

Global security | Single sign-on (SSO)

- Import LTPA keys from one environment to the other.

For example, either import the Sametime Connect LTPA keys into BPM or import the BPM LTPA keys into Sametime Connect.

- Configure the Sametime Proxy Server so the domain specified in the SSO settings is in the allowed list of domains. All subsequent access to Process Portal, and Sametime Connect must be done through a host name that ends in this domain name.

- Log in to the Sametime System Console as the Sametime administrator.

- Click...

-

Sametime Servers | Sametime Proxy Servers | server_name

- In the Domain list of Sametime Proxy Server section, enter the same domain name used in the WAS SSO settings, for example, ibm.com.

- Click OK and restart all the WAS-based Sametime servers.

Process Portal is now configured for Sametime Connect. When you use Process Portal, you see the standard Sametime Connect icons and team member information integrated within the expert and participant information.

In Process Designer, enable the process applications to expose in Process Portal for Sametime Connect integration.

Create and maintain saved searches for Process Portal

By saving searches, you can provide Process Portal users with customized views of their tasks, for example, to include specific business data. Saved searches are displayed in the Saved Searches tab of the Process Portal interface. In addition, the Tasks and Processes widgets in Business Space use saved searches to list process instances, and tasks.

The Saved Search Admin BPM system application (SSA) contains the human service that allows users to saved searches. The human service is exposed as an administrative service, in order for it to appear within the Process Admin console.

To enable the globalization of saved search names, use localization keys for the Process Portal application. Define the keys in Process Designer by expanding Process Portal, clicking Setup, and opening the resource bundle group called ProcessPortal. Consider the following guidance:

- Do not use special characters (for example: * or !)other

than a period.

- Start the saved search name with a letter.

- Do not use more than 30 characters.

- After you add a key to a resource bundle group to use in a saved search, restart Process Center and restart the server.

- For Process Server, use a resource bundle group that is in the default Process Portal version. Restart Process Server after the snapshot is activated.

- If you define a value for a localization key, define a default value. Defining a default value prevents the problem of the Organize

tabs list displaying blank items for the saved search names when Process Portal users sign in with a language that is not part of the Process Portal globalization

plan.

- Use localization keys in the following format:

- savedsearch.label.name_of_saved_search

- In the Server Admin area of the Process Admin Console, click Saved Search Admin.

Save a search.

- In the Select Search section, select Define New Search, click Select, and name the saved search.

- Choose the columns that are displayed in the search results by clicking Add in the Columns section.

- Set the search conditions by clicking Add in the Conditions section. The search conditions determine which tasks are shown on the Saved Searches tab in Process Portal.

- Select the columns to sort the results by, and then select the sort order.

- Go to the Search Organized By list and select Task.

If you select ProcessInstance from the list, only the first task of the process instance that matches the search criteria is returned.

- Test the search to make sure that it returns the results that you were expecting by clicking Search.

- To make the search available in Process Portal, and Business Space, click...

-

Save New Search

- Update an existing saved search. Select a search from the list in the Select Search section and click Select. Change the search criteria, and save the search.

Process Portal users see the new or updated saved search the next time their Saved Searches content is refreshed.

Reset the Process Portal start page for a user

The My Tasks > Open Tasks view is the Process Portal default start page. Users can bookmark a different view or page as their start page, and then return to using the default start page as needed.

Although users can reset their own start page in Process Portal, sometimes, it might be necessary for someone with administrative privileges to reset the start page on a user's behalf.

- In the Server Admin area of the Process Admin Console, click...

-

User Management | Bulk User Attribute Assignment | View by Attribute | Portal Default Page attribute

- Enter the user ID for the user in the User field, and click Search. The current value for the user's Process Portal start page is shown, for example:

-

/tasks/queries?query=name_of_saved_search

- Reset the start page to the Process Portal default start page.

In the Specify a Value and Assign it to the Selected Users section, delete the entry in the Value field, and click Assign.

The next time the user logs on to Process Portal, the My Tasks > Open Tasks view is displayed as the user's start page.

Set the Process Portal tab order for a user group

To modify the Process Portal tab order for users, use Bulk User Attribute Assignment. Users can reorder tabs in Process Portal, and the order is saved when they log out. However, someone with administrative privileges might need to apply a tab order for all users in a group so that all users see the same dashboards and saved searches in the same order. To apply the tab order from one user to all users in a selected user group, copy the attribute value from the user and paste it in as the value for a user group.

- In the Server Admin area of the Process Admin Console, click...

-

User Management | Bulk User Attribute Assignment | View by User

- To copy the tab order from a user, select the user ID, and copy the value for the user's Portal Dashboard Display Order attribute.

For example, if you decide the order of the tabs that you saved when you logged in to Process Portal should be the order that all users in a group see by default, select the user ID and copy the attribute value.

- Click View by Attribute, and then select the attribute...

-

Portal Dashboard Display Order

- Select the name of the user group.

- In the section...

-

Specify a Value and Assign it to the Selected Users

...delete any existing entry, and paste the entry that you copied in step 3 into the attribute value for the tab order.

- Click Assign.

The next time that users in the user group log in to Process Portal, the tabs are displayed in the new order. Users in the group still can reorder tabs as they like, but the default order is what you specified.

Configure the My Team Performance dashboard (deprecated)

You can configure certain aspects of the My Team Performance dashboard, for example, which tasks are visible to team managers and the maximum number of tasks that is displayed in the task list.

These configuration settings apply only to the deprecated My Team Performance dashboard (known as the My Team Performance scoreboard in releases earlier than BPM V8.0). This dashboard is deprecated in BPM V8.5 and not enabled by default.

Verify the following components are stopped:

- Process Center server

- Process Server

To configure the dashboard settings, update...

-

PROFILE_HOME/config/cells/cell/nodes/node/servers/server/process-server/config/100Custom.xml

Dashboard settings.

- <my-team-performance-task-visibility-for-user-assigned-tasks>

- <my-team-performance-task-visibility-for-related-groups>

- These elements control who can see tasks in the dashboard. The default value for both elements is false.

When the default is set for both elements, the manager can see tasks that are assigned to the manager's groups, regardless of whether the tasks are claimed.

To include tasks that are assigned directly to users in the manager's groups, even if the tasks were not initially assigned to the group, set the value of the element for user-assigned tasks to true.

To include tasks that are assigned to related groups, set the value of the element for related groups to true. A related group is a group that any user in a manager's group also belongs to.

If you change the default value of the following element, the performance of the scoreboard might be affected because more groups must be queried for the list of assigned tasks.

- <my-team-performance-max-task-list-size>

- This element controls the maximum number of tasks that is displayed in the task list. The default value is 1000. You can change this value. However, the larger the value, the longer it takes to populate the list.

- Open the 99Local.xml and 100Custom.xml files in a text editor.

Do not edit 99Local.xml. Change only 100Custom.xml.

- Copy the appropriate section from 99Local.xml to 100Custom.xml.

<properties> <server merge="mergeChildren"> <portal merge="mergeChildren"> <my-team-performance-task-visibility-for-user-assigned-tasks merge="replace">false</my-team-performance-task-visibility-for-user-assigned-tasks> <my-team-performance-task-visibility-for-related-groups merge="replace">false</my-team-performance-task-visibility-for-related-groups> <my-team-performance-max-task-list-size merge="replace">1000</my-team-performance-max-task-list-size> </portal> </server> </properties>Save changes.

- Start Process Center server or Process Server.

Administer the Process Portal index

The Process Portal index allows process participants who are working in Process Portal to search business processes for instance data. The index is also used to provide data for the charts in the Process Performance and Team Performance dashboards.

Indexing is enabled by default. Process instances and tasks are indexed according to a time interval that you can specify. To change the indexing behavior, edit 100Custom.xml. If a problem occurs with the index, commands are available for updating and rebuilding it.

Tasks and process instances are indexed in the following situations:

- Tasks

- A task is assigned.

- A task is completed and the business data is updated.

- The due date or at-risk date of a task is changed.

- The priority of a task is changed.

- Process instances

- An instance is started, completed, suspended, resumed, terminated, or restarted.

- An instance failed.

- The due date or at-risk date of an instance is changed.

For example, business data for a process instance that exists when a task, and its corresponding process instance activity are completed gets indexed with both the task, and instance. Process participants can find the task or instance by searching the instance business data. If a task form consists of several Coaches but only one Coach is complete, the updates from this Coach are not searchable until all the Coaches in the task form are complete.

By default, the previous tasks in a process instance are not re-indexed when later tasks are completed and the business data for the process instance is updated. To have the updated business data to be searchable from previously completed tasks, change the value of the <task-index-update-completed-tasks> configuration setting to true in 100Custom.xml. If the process instance has a lot of previous tasks, re-indexing these tasks might degrade the system performance.

To make particular business data searchable in Process Portal, use Process Designer to make the appropriate process instance variable available in the search and to set the search alias name used to search for the business data.

Update the Process Portal index

If a problem occurs with the Process Portal index, you might need to run a command to rebuild it. You can also update the index for an instance or task, or remove an instance or task from the index.

Index administration

In a network deployment environment, all cluster members on the same node share the index by default. Run the commands for updating the index on the deployment manager. To specify where the command runs, include the -host host_name parameter of the node and the -port SOAP_port parameter of an application cluster member. Repeat the command for each node in the cluster. The default values for these parameters are -host localhost -port 8880.

Rebuilding the index

If there are problems with the index or searches in Process Portal, you might need to rebuild the index. To rebuild the index...

- processIndexFullReIndex.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port

The command deletes the existing index and creates a new index. While the index is being built, the search facility in Process Portal is unavailable.

For compatibility with previous releases of Process Portal, the taskIndexFullReIndex command is still available. However, this command produces the same result as the processIndexFullReIndex command.

Freeing up space in the index by removing deleted tasks and instances

When tasks and instances are deleted from the database, they are also automatically deleted from the index. You can, however, manually delete tasks and instances from the index that were previously deleted from the database.

To remove the deleted tasks and instances from the index:

- processIndexRemoveDeleted.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port

For compatibility with previous releases of Process Portal, the taskIndexRemoveDeletedTasks command is still available. However, this command produces the same result as the processIndexRemoveDeleted command.

Update the index for a specific instance or task

If you do not change the default configuration settings in 100Custom.xml, the index is updated every 10 seconds. However, you can also trigger updates to the index when a specific instance or task is updated, for example, if you doubt the correctness of the search index record for a specific instance or task.

To update the index...

- For instances

- processIndexUpdateInstance.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port taskID

- For tasks

- taskIndexUpdate.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port taskID

The index is updated for the specified instance or task, regardless of its state. For example, if the specified task is in the completed state and the value for the <task-index-update-completed-tasks> element in 100Custom.xml is set to false, the index is still updated for the task.

Delete a specific instance or task

To delete a specific instance or task from the index.

- For instances

- processIndexDeleteInstance.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port taskID

- For tasks

- taskIndexDeleteTask.sh -user DeAdmin_user -password DeAdmin_user -host host_name -port SOAP_port taskID

Configure the Process Portal index

You can change where the index is stored by modifying an environment variable. To change the index behavior, such as the length of the update interval or including completed tasks in the index, edit 100Custom.xml.

The index allows process participants in IBM Process Portal to search for tasks or process instances that contain particular metadata or instance data. The index is also used for historic data in the Process Performance and Task Performance dashboards.

Set the location of the index

The location of the index is determined by the value of the BPM_SEARCH_TASK_INDEX environment variable. This variable has cell scope on a stand-alone server and cluster scope on a cluster.

- On a cluster

The default location is...

-

$BPM_SEARCH_TASK_INDEX_ROOT/cluster

So that an index does not have to be maintained for each cluster member, one index can be shared across nodes. The index can be maintained in only one cluster member at a time, which is enforced by a locking strategy on the index. To set up the index, use a shared network storage solution for your index and change the value of the cell scoped BPM_SEARCH_TASK_INDEX_ROOT variable to point to the common location.

For a cluster member to have a separate index, you can change the value of the cell scoped BPM_SEARCH_TASK_INDEX_ROOT variable to point to a different location.

To locate the environments variables, in the administrative console click...

-

Environment | WebSphere Variables

- On a stand-alone server

The default location of the task index is...

-

BPM_SEARCH_TASK_INDEX_ROOT/WAS_SERVER_NAME

If the BPM_SEARCH_TASK_INDEX variable is not set, the location defaults to...

-

USER_INSTALL_ROOT/searchIndex/task/WAS_SERVER_NAME

which results in one index per server.

To change the index location, in the administrative console click...

-

Environment | WebSphere Variables

If the BPM_SEARCH_TASK_INDEX variable does not exist, define it with cell scope then set the value to the new location.

Change the index behavior

The index configuration includes the following default settings:

- Indexing is enabled.

- The index is updated every 5 seconds.

- For tasks, the index is updated only for open tasks; it is not updated for completed tasks.

To change the index behavior, perform the following actions:

- Edit 100Custom.xml for the appropriate server:

-

<process_center_profile>/config/cells/<Cell>/nodes/<node>/servers/<server>/process-server/config/system/100Custom.xml

- Add or edit the following code snippet as required:

<search-index> <task-index-enabled>true</task-index-enabled> <task-index-update-interval>5</task-index-update-interval> <task-index-update-completed-tasks>false</task-index-update-completed-tasks> <task-index-store-fields>false</task-index-store-fields> <task-index-work-manager>wm/default</task-index-work-manager> <task-index-include-system-tasks>falsetrue</task-index-include-system-tasks> <process-index-instance-completion-best-effort>false</process-index-instance-completion-best-effort> </search-index> - In the <search-index> section, modify the appropriate task index tags for the configuration settings to change.

XML tag Configuration setting description <task-index-enabled> Whether indexing is enabled. Default is true. If the index does not exist, it is created. To turn off indexing, change the value to false. If the index does not exist, it is not created. If indexing is turned off, the search field in the Process Portal user interface is hidden.

<task-index-update-interval> Time between index updates in seconds. The specified interval determines when the state of the instance variables is captured for tasks that completed since the last index update. Only those tasks that are completed during the current interval are searchable with the latest instance data. The default value for the update interval is 5 seconds. The minimum value is one second.

<task-index-update-completed-tasks> Whether the index is updated for completed tasks. Default is false, which means that only information about open tasks is updated. If set to true, instance-level updates, such as business data that is updated later in the process, is propagated to completed tasks. <task-index-store-fields> Whether the actual field values are stored as separate fields. Default is false, which means the actual field values are not stored as separate fields. Change to true for debugging purposes, as it improves the readability for people and it allows queries by other search tools. <task-index-work-manager> JNDI name of the work manager used by the indexing process to manage the search index. The default value is wm/default, which is the default work manager for WebSphere Application Server. To improve the performance of the index creation, in the administrative console you can create a dedicated work manager with a greater number of available threads. You can then use this tag to switch to the new work manager.

<task-index-include-system-tasks> Whether system tasks are indexed. To enable system tasks to be displayed in Gantt charts in Process Portal, ensure the value of this tag is set to true. If the value of this tag is to false, system tasks are not displayed in Gantt charts. <process-index-instance-completion-best-effort> Whether completion dates are created when instances that are migrated from previous versions of BPM are indexed. The default setting is false. If set to true, the last completion date of the associated tasks is used for the instance completion date. If no associated tasks exist, the last modified time stamp of the instance is used as the completion date.

Save changes.

- Restart the server to activate the changes.

Administer Process Portal spaces

Administering spaces involves enabling tracing, working with templates, and removing widgets.

Enable tracing for widgets in Process Portal spaces

Enable trace on the application server instance where Business Space is installed.

- Open the administrative console and click...

-

Resources | Resource Environment | Resource Environment Provider | Mashups_ConfigService | Additional Properties | Custom properties | isDebug

- Change the Value field to true, and then click OK.

- Click traceConfig.

In the Value field, add the components to trace, separated by commas with no spaces in between.

For Business Space

-

com.ibm.mm.iwidget.*,com.ibm.mashups.*,com.ibm.bspace.*

For IBM Business Monitor.

-

com.ibm.wbimonitor.*

Browser performance degradation can occur when too many components are listed.

- Click OK and then save your changes to the master configuration.

- Restart the application server instance.

A debugging console is displayed at the bottom of the page the next time you log in to Process Portal spaces.

When tracing has been enabled in addition to the Debug Console, Firebug Lite is also loaded. Because Firebug Lite opens as a pop-up window, you might receive an error message if your browser has been configured to block pop-ups. This can be resolved by disabling the pop-up blocker for your space URL and then restarting the browser.

To save a copy of the trace file, click Save in the debugging console.

Review logs for messages

You can review logs for information or error messages to see what is happening in Process Portal spaces. When an important event or error occurs, an information or error marker is displayed. When you click the marker, the System Message window is opened to display the message for the event or error.

The event or error is also recorded using the logging capabilities of WebSphere Application Server.

You can review these messages in log files in the following locations.

- Check the log files in profile_root/logs/ffdc.

- Check the log files in profile_root/logs/server.

Disable automatic wiring in Process Portal spaces

Widgets in a space communicate with each other using wires. When you add widgets to a page in a space, they are automatically wired to each other in certain situations. If you prefer to determine how widgets interact with one another, you can disable automatic wiring. When you add widgets to a page, they are automatically wired to one another when the following conditions apply:

- Automatic wiring is enabled. This setting is the default wiring configuration.

- The definitions for the two widgets allow them to be automatically wired.

- Event names sent by one widget match event names received by the other widget.

- One of the two widgets is already on the page and the user adds the other widget to the page.

You can disable this automatic wiring by changing a setting in a configuration file.

- Change the autoWiringDefaultEnabled setting to false in the configuration file.

- For a stand-alone server:

- profile_root\BusinessSpace\node\server\mm.runtime.prof\config\ConfigService.properties

- For a cluster:

- deployment_manager_profile_root\BusinessSpace\cluster\mm.runtime.prof\config\ConfigService.properties

- For a stand-alone server:

- Run the updatePropertyConfig command in the wsadmin environment of the profile.

For Windows, the value for the propertyFileName parameter must be the full path to the file, and all backslashes must be double, for example:

- AdminTask.updatePropertyConfig('[-serverName server -nodeName node -propertyFileName "profile_root\\BusinessSpace\\node\\server\\mm.runtime.prof\\config\\ConfigService.properties" -prefix "Mashups_"]')

- For a stand-alone server:

Jython:

AdminTask.updatePropertyConfig('[-serverName server -nodeName node -propertyFileName "profile_root\BusinessSpace\node\server \mm.runtime.prof\config\ConfigService.properties" -prefix "Mashups_"]') AdminConfig.save()Jacl:

$AdminTask updatePropertyConfig {-serverName server -nodeName node -propertyFileName "profile_root\BusinessSpace\node\server \mm.runtime.prof\config\ConfigService.properties" -prefix "Mashups_"} $AdminConfig save - For a cluster:

Jython:

AdminTask.updatePropertyConfig('[-clusterName cluster -propertyFileName "deployment_manager_profile_root\BusinessSpace\cluster\mm.runtime.prof\ config\ConfigService.properties" -prefix "Mashups_"]') AdminConfig.save()Jacl:

$AdminTask updatePropertyConfig {-clusterName cluster -propertyFileName "deployment_manager_profile_root\BusinessSpace\cluster\mm.runtime.prof\ config\ConfigService.properties" -prefix "Mashups_"} $AdminConfig save

- For a stand-alone server:

- Run $AdminConfig save.

Work with templates in Process Portal spaces

If you log in to a space using a superuser ID, you can manage the templates that are available to users.

Create templates in Process Portal spaces

If you have the appropriate role, you can create templates that other users can use to create their spaces.

The ID that you use to log into a space must belong to the superuser role.

- Create a space and its pages.

- Apply a layout style to each page and add widgets to the pages. Configure the widgets where necessary or appropriate.

- When the space is ready, click Manage Spaces. The Space Manager opens.

- For the space, click Actions > Save as Template.

When a user creates a space based on a template, the list of templates includes the template that you created. The name of the template is the same as the name of the space used to create it.

Update templates for Process Portal spaces

If you have the appropriate role, you can update an existing template.

The ID that you use to log into a space must belong to the superuser role.

When you update a template, you are creating another version of the template. The original template and the updated version coexist. When you open the Template Manager or when you create a space, you see both versions. However, both versions will have the same name. You can resolve this problem by performing one or more of the following actions before you make your update:

- Delete the original template. To preserve a copy of the old version, export the template first. You can then import the old version when you need it.

- Rename the space used to create the template. For example, if you have a space named Shipping and you rename it to something like Shipping 2.0, when you update the template, there will be a template named Shipping and one named Shipping 2.0.

- Update the description of the space to provide a version and perhaps describe what has changed.

- Update the space that was used to create the template. If you do not have the space that was used to create the template any longer, you can create a space based on the template to update.

- When the space is ready, click Manage Spaces. The space opens.

- For the space, click Actions > Save as Template.

Delete templates in Process Portal spaces

If you have the appropriate role, you can delete templates so they are no longer available.

The ID that you use to log into a space must belong to the superuser role.

To preserve a copy of the template first, export the template before you delete it. You can then import the backup template when you need it.

- In the banner, click Actions > Manage Templates. The Template Manager opens.

- For the existing template, click Actions > Delete.

- In the confirmation window, click Yes.

Export templates from Process Portal spaces

If you have the appropriate role, you can export templates so they are available for importing at a later time.

The ID that you use to log into a space must belong to the superuser role.

- In the banner, click Actions > Manage Templates. The Template Manager opens.

- For the existing template, click Actions > Export.

- In the confirmation window, click Yes. The file name for the exported template reflects the name of the template itself.

Import templates into Process Portal spaces

If you have the appropriate role, you can import templates that have been previously exported.

The ID that you use to log into a space must belong to the superuser role.

When you import a template file that you created in a space in BPM Advanced, version 7, the import process adds it to the list of templates in the Template Manager. If you import a version 6 template file, it is added as a space instead of as a template. To re-create the template, an administrator can use the Actions menu in the Space Manager to save the space as a template.

- In the banner, click Actions > Manage Templates. The Template Manager opens.

- Click Import Template.

- Use the window that opens, select the space and import it.

Remove widgets from Process Portal spaces

You can remove widgets from a space by uninstalling them or by disabling them.

Disable widgets in Process Portal spaces

You can disable a widget by unregistering it. An unregistered widget is no longer available to users. Related widgets are grouped into a catalog. To disable a widget, you edit its definition in the catalog XML file, and then update the space. Disabling the widget prevents it from displaying on the widget palette and on pages while maintaining the code.

Disable the widget instead of uninstalling it allows the product to update the widget with enhancements and fixes so that it is at the correct level if you enable it again. If the product changes the catalog containing the widget, you might have to re-disable the widget.

- Navigate to the profile_root/BusinessSpace/node/server/widgets/installs.timestamp directory and open the catalog file containing the widget definition.

Save a copy of the file as a backup.

- Comment out the catalog entry (from <entry> to </entry>)

for the widget you want to disable. Ensure the file is still a valid XML file after the change.

Save the edited catalog file to an empty directory. The name of the file must be catalog_name.xml where name can be any name.

- At a command prompt, change directories to the profile_root/bin or cluster_root/bin directory.

- Enter wsadmin.bat -conntype NONE and then enter the appropriate command:

- To disable a widget in a stand-alone server:

- $AdminTask updateBusinessSpaceWidgets {-nodeName node -serverName server -catalog fullpath}

- To disable a widget in a cluster:

- $AdminTask updateBusinessSpaceWidgets {-clusterName cluster -catalog fullpath}

fullpath is the path to the directory containing the edited catalog file.

For information on updateBusinessSpaceWidgets, see updateBusinessSpaceWidgets command.

- To disable a widget in a stand-alone server:

- Enter Exit.

- To see the changes in the browser, logout of the space, clear the cache in the browser, and then log in again.

Uninstall custom widgets individually from Process Portal spaces

Use this procedure to uninstall a custom widget from a space that is not the only widget defined in its catalog. You can move a widget from a space in one of the following ways:

- Disable the widget, which deregisters the widget so that it is no longer available to users but it keeps the widget code on the server. To remove a widget provided by BPM Advanced, this is the way to do it. For information, see disablingwidgets.html.

- Uninstall the custom widget and the catalog that contains it, completely removes the widget (along with all of the other widgets in the catalog) and the catalog. For information, see Uninstall custom widgets and catalogs.

- Uninstall the custom widget individually, removes the widget from its catalog but maintains the catalog. Do not choose this method if you are uninstalling the last widget defined in the catalog. Instead, uninstall the widget and its catalog using the procedure in Uninstall custom widgets and catalogs.

When the custom widget is installed, the following actions occur:

- The EAR for the widget is installed in the profile_root/installedApps/node directory.

- The catalog file for the widget is added to, or updated in the profile_root/BusinessSpace/node/server/mm.runtime.prof/config directory. This action registers the widget. The catalog in your file is also added to the default catalog using an include tag.

- The endpoints used by the widget (if it uses them and needs custom endpoints) are added or updated in the profile_root/BusinessSpace/node/server/mm.runtime.prof/endpoints directory.

- The help files for the widget (if it uses the information center for its help) are added to the profile_root/config/BusinessSpace/help/eclipse/plugins directory.

When you individually uninstall a custom widget, you are deleting the widget files and removing the definition for that widget from its catalog file. You are also updating the endpoints and widget help if you include them in the uninstallation.

- Uninstall the WAR containing the widget.

- Edit the catalog XML (widget registration) file containing the widget and remove its entries. Copy the edited file into a catalog directory.