Plan the cluster network setup

Design a network setup for the Red Hat OpenShift on IBM Cloud cluster that meets the needs of the workloads and environment.

Get started by planning your setup for a VPC or a classic cluster.

With Red Hat OpenShift on IBM Cloud clusters in VPC, we can create the cluster in the next

generation of the IBM Cloud platform, in Virtual Private Cloud for Generation 2 compute resources. VPC gives you the security of a private cloud environment with the dynamic scalability of a public

cloud.

With Red Hat OpenShift on IBM Cloud clusters in VPC, we can create the cluster in the next

generation of the IBM Cloud platform, in Virtual Private Cloud for Generation 2 compute resources. VPC gives you the security of a private cloud environment with the dynamic scalability of a public

cloud.

With Red Hat OpenShift on IBM Cloud classic clusters, we can create the cluster on

classic infrastructure. Classic clusters include all of the Red Hat OpenShift on IBM Cloud mature and robust features for compute, networking, and storage.

With Red Hat OpenShift on IBM Cloud classic clusters, we can create the cluster on

classic infrastructure. Classic clusters include all of the Red Hat OpenShift on IBM Cloud mature and robust features for compute, networking, and storage.

First time creating a cluster? First, try out the tutorial for creating OpenShift clusters. Then, come back here when you’re ready to plan out your production-ready clusters.

Understand network basics of VPC clusters

- Worker-to-worker communication: All worker nodes must be able to communicate with each other on the private network through VPC subnets.

- Worker-to-master and user-to-master communication: Your worker nodes and your authorized cluster users can communicate with the Kubernetes master securely over the private network through a private service endpoint, or the public network with TLS through a public service endpoint.

- Worker communication to other services or networks: Allow your worker nodes to securely communicate with other IBM Cloud services, such as IBM Cloud Container Registry, to on-premises networks, to other VPCs, or to classic infrastructure resources.

- External communication to apps that run on worker nodes: Allow public or private requests into the cluster as well as requests out of the cluster to a public endpoint.

Worker-to-worker communication: VPC subnets

When you create a cluster, you specify an existing VPC subnet for each zone. Each worker node that you add in a cluster is deployed with a private IP address from the VPC subnet in that zone. After the worker node is provisioned, the worker node IP address persists after a reboot operation, but the worker node IP address changes after replace and update operations.

Subnets provide a channel for connectivity among the worker nodes within the cluster. Additionally, any system that is connected to any of the private subnets in the same VPC can communicate with workers. For example, all subnets in one VPC can communicate through private layer 3 routing with a built-in VPC router. If we have multiple clusters that must communicate with each other, we can create the clusters in the same VPC. However, if the clusters do not need to communicate, we can achieve better network segmentation by creating the clusters in separate VPCs. We can also create access control lists (ACLs) for the VPC subnets to mediate traffic on the private network. ACLs consist of inbound and outbound rules that define which ingress and egress is permitted for each VPC subnet.

To run default OpenShift components such as the web console or OperatorHub, we must attach a public gateway to one or more subnets that the worker nodes are deployed to.

The default IP address range for VPC subnets is 10.0.0.0 – 10.255.255.255. For a list of IP address ranges per VPC zone, see the VPC default address prefixes. If you enable your VPC with classic access, or access to classic infrastructure resources, the default IP ranges per VPC zone are different. For more information, see Classic access VPC default address prefixes.

Need to create the cluster by using custom-range subnets? Check out this guidance on custom address prefixes. If you use custom-range subnets for the worker nodes, we must ensure that your worker node subnets do not overlap with the cluster's pod subnet.

Do not delete the subnets that you attach to the cluster during cluster creation or when you add worker nodes in a zone. If you delete a VPC subnet that the cluster used, any load balancers that use IP addresses from the subnet might experience issues, and we might be unable to create new load balancers.

When you create VPC subnets for the clusters, keep in mind the following features and limitations. For more information about VPC subnets, see Characteristics of subnets in the VPC.

- The default CIDR size of each VPC subnet is /24, which can support up to 253 worker nodes. If we plan to deploy more than 250 worker nodes per zone in one cluster, consider creating a subnet of a larger size.

- After creating a VPC subnet, we cannot resize it or change its IP range.

- Multiple clusters in the same VPC can share subnets.

- VPC subnets are bound to a single zone and cannot span multiple zones or regions.

- After creating a subnet, we cannot move it to a different zone, region, or VPC.

- If we have worker nodes that are attached to an existing subnet in a zone, we cannot change the subnet for that zone in the cluster.

- The 172.16.0.0/16, 172.18.0.0/16, 172.19.0.0/16, and 172.20.0.0/16 ranges are prohibited.

Worker-to-master and user-to-master communication: Service endpoints

To secure communication over public and private service endpoints, Red Hat OpenShift on IBM Cloud automatically sets up an OpenVPN connection between the Kubernetes master and the worker node when the cluster is created. Workers securely talk to the master through TLS certificates, and the master talks to workers through the OpenVPN connection. Note that we must enable your account to use service endpoints. To enable service endpoints, run ibmcloud account update --service-endpoint-enable true.

In VPC clusters in Red Hat OpenShift on IBM Cloud, we cannot disable the private service endpoint or set up a cluster with the public service endpoint only.

Public and private service endpoints

- Communication between worker nodes and master is established over the private network through the private service endpoint only.

- By default, all calls to the master that are initiated by authorized cluster users are routed through the public service endpoint. If authorized cluster users are in your VPC network or are connected through a VPC VPN connection, the master is privately accessible through the private service endpoint.

Private service endpoint only

- Communication between worker nodes and master is established over the private network through the private service endpoint.

- To access the master through the private service endpoint, authorized cluster users must either be in your VPC network or are connected through a VPC VPN connection.

Your VPC cluster is created with both a public and a private service endpoint by default. To create a VPC cluster with only a private service endpoint, create the cluster in the CLI and include the --disable-public-service-endpoint flag. If you include this flag, the cluster is created with routers and Ingress controllers that expose our apps on the private network only by default. If you later want to expose apps to a public network, we must manually create public routers and Ingress controllers.

Worker communication to other services or networks

Communication with other IBM Cloud services over the private or public network

Your worker nodes can automatically and securely communicate with other IBM Cloud services that support private service endpoints, such as IBM Cloud Container Registry, over the private network. If an IBM Cloud service does not support private service endpoints, worker nodes can securely communicate with the services over the public network through the subnet's public gateway.

Note that if you use access control lists (ACLs) for the VPC subnets, we must create inbound or outbound rules to allow your worker nodes to communicate with these services.

Communication with resources in on-premises data centers

To connect the cluster with your on-premises data center, we can use the IBM Cloud Virtual Private Cloud VPN or IBM Cloud™ Direct Link.

- To get started with the Virtual Private Cloud VPN, see Configure an on-prem VPN gateway and Create a VPN gateway in your VPC, and create the connection between the VPC VPN gateway and the local VPN gateway. If we have a multizone cluster, we must create a VPC gateway on a subnet in each zone where we have worker nodes.

- To get started with Direct Link, see Ordering IBM Cloud Direct Link Dedicated. In step 8, we can create a network connection to your VPC to be attached to the Direct Link gateway.

If we plan to connect the cluster to on-premises networks, check out the following helpful features:

We might have subnet conflicts with the IBM-provided default 172.30.0.0/16 range for pods and 172.21.0.0/16 range for services.

We can avoid subnet conflicts when you create a cluster from the CLI by specifying a custom subnet CIDR for pods in the --pod-subnet flag and a custom subnet CIDR for services in the --service-subnet flag.

If your VPN solution preserves the source IP addresses of requests, we can create custom static routes to ensure that your worker nodes can route responses from the cluster back to your on-premises network.

Communication with resources in other VPCs

To connect an entire VPC to another VPC in your account, we can use the IBM Cloud VPC VPN or IBM Cloud Transit Gateway.

- To get started with the IBM Cloud VPC VPN, follow the steps in Connecting two VPCs using VPN to create a VPC gateway on a subnet in each VPC and create a VPN connection between the two VPC gateways. Note that if you use access control lists (ACLs) for the VPC subnets, we must create inbound or outbound rules to allow your worker nodes to communicate with the subnets in other VPCs.

- To get started with IBM Cloud Transit Gateway, see the Transit Gateway documentation. Transit Gateway instances can be configured to route between VPCs that are in the same region (local routing) or VPCs that are in different regions (global routing).

Communication with IBM Cloud classic resources

For to connect the cluster to resources in your IBM Cloud classic infrastructure, we can set up a VPC with classic access or use IBM Cloud Transit Gateway.

- To get started with a VPC with classic access, see Set up access to classic infrastructure. Note that we must enable classic access when you create the VPC, and we cannot convert an existing VPC to use classic access. Additionally, we can set up classic infrastructure access for only one VPC per region, and we cannot set up more than one VPC with classic infrastructure access in a region.

- To get started with IBM Cloud Transit Gateway, see the Transit Gateway documentation. We can connect multiple VPCs to classic infrastructure, such as using IBM Cloud Transit Gateway to manage access between your VPCs in multiple regions to resources in your IBM Cloud classic infrastructure.

External communication to apps that run on worker nodes

Private traffic to cluster apps

When we deploy an app in the cluster, we might want to make the app accessible to only users and services that are on the same private network as the cluster. Private load balancing

is ideal for making the app available to requests from outside the cluster without exposing the app to the general public. We can also use private load balancing to test access, request routing, and other configurations for the app before

you later expose the app to the public with public network services.

To allow private network traffic requests from outside the cluster to our apps, we can use private Kubernetes networking services, such as creating LoadBalancer services. For example, when you create a Kubernetes LoadBalancer service in the cluster, a load balancer for VPC is automatically created in your VPC outside of the cluster. The VPC load balancer is multizonal and routes requests for the app through the private NodePorts that are automatically opened on the worker nodes. We can then modify the default VPC security group for the worker nodes to allow inbound network traffic requests from specified sources.

For more information, see Plan private external load balancing.

Public traffic to cluster apps

To make our apps accessible from the public internet, we can use public networking services. Even though your worker nodes are connected to private VPC subnets only, the VPC load balancer

that is created for public networking services can route public requests to the app on the private network by providing the app a public URL. When an app is publicly exposed, anyone that has the public URL can send a request to the app.

We can use public Kubernetes networking services, such as creating LoadBalancer services. For example, when you create a Kubernetes LoadBalancer service in the cluster, a load balancer for VPC is automatically created in your VPC outside of the cluster. The VPC load balancer is multizonal and routes requests for the app through the private NodePorts that are automatically opened on your worker nodes. We can then modify the default security group for the cluster to allow inbound network traffic requests from specified sources to your worker nodes.

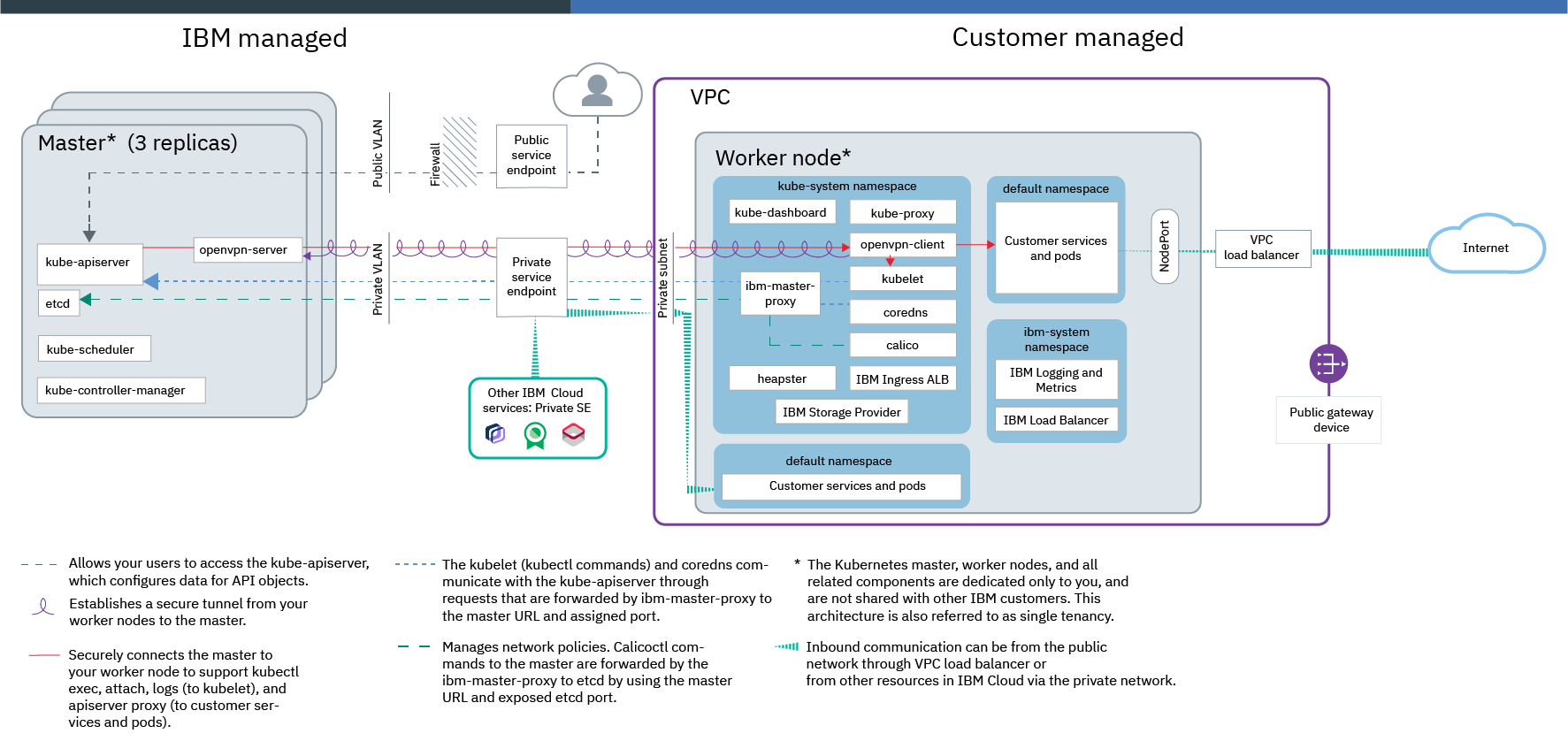

Example scenarios for VPC cluster network setups

Scenario: Run internet-facing app workloads in a VPC cluster

Worker-to-worker communication

To achieve this setup, you create VPC subnets in each zone where we want to deploy worker nodes. To run default OpenShift components such as the web console or OperatorHub, public gateways are required for these subnets. Then, you create a VPC cluster that uses these VPC subnets.

Worker-to-master and user-to-master communication

We can choose to allow worker-to-master and user-to-master communication over the public and private networks, or over the private network only.

- Public and private service endpoints: Communication between worker nodes and master is established over the private network through the private service endpoint. By default, all calls to the master that are initiated by authorized cluster users are routed through the public service endpoint.

- Private service endpoint only: Communication to master from both worker nodes and cluster users is established over the private network through the private service endpoint. Cluster users must either be in your VPC network or connect through a VPC VPN connection.

Worker communication to other services or networks

If the app workload requires other IBM Cloud services, your worker nodes can automatically, securely communicate with IBM Cloud services that support private service endpoints over the private VPC network.

External communication to apps that run on worker nodes

After you test the app, we can expose it to the internet by creating a public Kubernetes LoadBalancer service or using the default public Ingress application load balancers (ALBs). The VPC load balancer that is automatically created in your VPC outside of the cluster when you use one of these services routes traffic to the app. We can improve the security of the cluster and control public network traffic to our apps by modifying the default VPC security group for the cluster. Security groups consist of rules that define which inbound traffic is permitted for the worker nodes.

Ready to get started with a cluster for this scenario? After planning your high availability and worker node setups, see Creating VPC Gen 2 compute clusters.

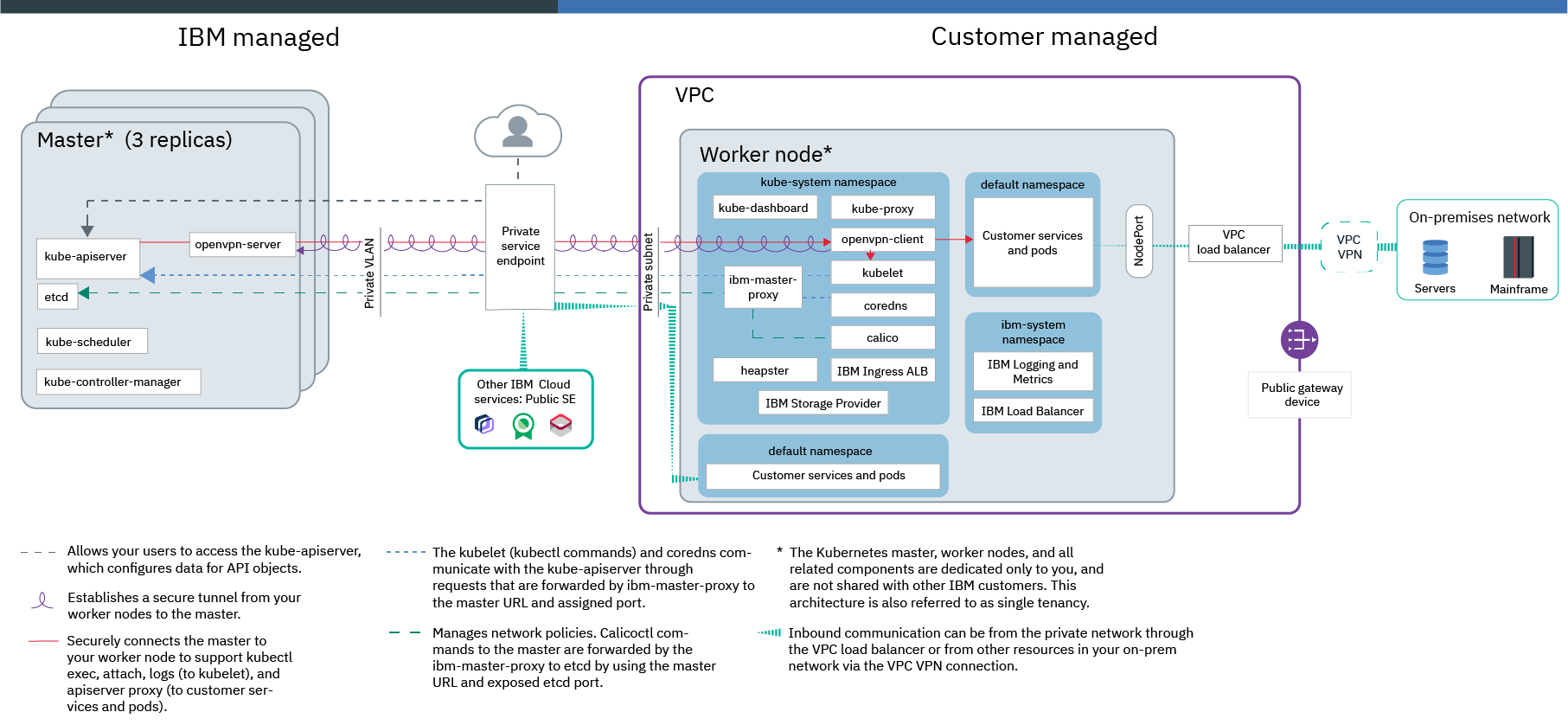

Scenario: Extend your on-premises data center to a VPC cluster

Worker-to-worker communication

To achieve this setup, you create VPC subnets in each zone where we want to deploy worker nodes. To run default OpenShift components such as the web console or OperatorHub, public gateways are required for these subnets.. Then, you create a VPC cluster that uses these VPC subnets.

Note that we might have subnet conflicts between the default ranges for workers nodes, pods, and services, and the subnets in your on-premises networks. When you create your VPC subnets, we can choose custom address prefixes and the create the cluster by using these subnets. Additionally, we can specify a custom subnet CIDR for pods and services by using the --pod-subnet and --service-subnet flags in the ibmcloud oc cluster create command when you create the cluster.

Worker-to-master and user-to-master communication

When you create the cluster, you enable private service endpoint only to allow worker-to-master and user-to-master communication over the private network. Cluster users must either be in your VPC network or connect through a VPC VPN connection.

Worker communication to other services or networks

To connect the cluster with your on-premises data center, we can set up the VPC VPN service. The IBM Cloud VPC VPN connects your entire VPC to an on-premises data center. If the app workload requires other IBM Cloud services that support private service endpoints, your worker nodes can automatically, securely communicate with these services over the private VPC network.

External communication to apps that run on worker nodes

After you test the app, we can expose it to the private network by creating a private Kubernetes LoadBalancer service or using the default private Ingress application load balancers (ALBs). The VPC load balancer that is automatically created in your VPC outside of the cluster when you use one of these services routes traffic to the app. Note that the VPC load balancer exposes the app to the private network only so that any on-premises system with a connection to the VPC subnet can access the app. We can improve the security of the cluster and control public traffic apps by modifying the default VPC security group for the cluster. Security groups consist of rules that define which inbound traffic is permitted for the worker nodes.

Ready to get started with a cluster for this scenario? After planning your high availability and worker node setups, see Creating VPC Gen 2 compute clusters.

Understand network basics of classic clusters

- Worker-to-worker communication: All worker nodes must be able to communicate with each other on the private network. In many cases, communication must be permitted across multiple private VLANs to allow workers on different VLANs and in different zones to connect with each other.

- Worker-to-master and user-to-master communication: Your worker nodes and your authorized cluster users can communicate with the Kubernetes master securely over the public network with TLS or over the private network through private service endpoints.

- Worker communication to other IBM Cloud services or on-premises networks: Allow your worker nodes to securely communicate with other IBM Cloud services, such as IBM Cloud Container Registry, and to an on-premises network.

- External communication to apps that run on worker nodes: Allow public or private requests into the cluster as well as requests out of the cluster to a public endpoint.

Worker-to-worker communication: classic VLANs and subnets

We cannot create classic Red Hat OpenShift on IBM Cloud clusters that are connected to a private VLAN only. Your worker nodes must be connected to both public and private VLANs.

VLAN connections for worker nodes

All worker nodes must be connected to a private VLAN so that each worker node can send information to and receive information from other worker nodes. The private VLAN provides private

subnets that are used to assign private IP addresses to your worker nodes and private app services. Your worker nodes also must be connected to a public VLAN. The public VLAN provides public subnets that are used to assign public IP addresses

to your worker nodes and public app services. However, if we need to secure our apps from the public network interface, several options are available to secure the cluster such as using Calico network policies or isolating external network workloads to edge worker nodes.

In standard clusters, the first time that you create a cluster in a zone, a public VLAN and a private VLAN in that zone are automatically provisioned for you in your IBM Cloud infrastructure account. For every subsequent cluster that you create in that zone, we can specify the VLAN pair that we want to use. We can reuse the same public and private VLANs that were created for you because multiple clusters can share VLANs.

For more information about VLANs, subnets, and IP addresses, see Overview of networking in Red Hat OpenShift on IBM Cloud.

Need to create the cluster by using custom subnets? Check out Using existing subnets to create a cluster.

Worker node communication across subnets and VLANs

In several situations, components in the cluster must be permitted to communicate across multiple private VLANs. For example, if we want to create a multizone cluster,

if we have multiple VLANs for a cluster, or if we have multiple subnets on the same VLAN, the worker nodes on different subnets in the same VLAN or in different VLANs cannot automatically communicate with each other. You must enable either

Virtual Routing and Forwarding (VRF) or VLAN spanning for the IBM Cloud infrastructure account.

- Virtual Routing and Forwarding (VRF): VRF enables all the private VLANs and subnets in your infrastructure account to communicate with each other.

Additionally, VRF is required to allow your workers and master to communicate over the private service endpoint, and to communicate with other IBM Cloud instances that support private service endpoints. To check whether a VRF is already

enabled, use the ibmcloud account show command. To enable VRF, run ibmcloud account update --service-endpoint-enable true. This command output prompts you to open a support case to enable your account to use VRF

and service endpoints. VRF eliminates the VLAN spanning option for the account because all VLANs are able to communicate.

When VRF is enabled, any system that is connected to any of the private VLANs in the same IBM Cloud account can communicate with the cluster worker nodes. We can isolate the cluster from other systems on the private network by applying Calico private network policies. - VLAN spanning: If we cannot or do not want to enable VRF, such as if you do not need the master to be accessible on the private network or if you use a gateway appliance to access the master over the public VLAN, enable VLAN spanning. For example, if we have an existing gateway appliance and then add a cluster, the new portable subnets that are ordered for the cluster aren't configured on the gateway appliance but VLAN spanning enables routing between the subnets. To enable VLAN spanning, we need the Network > Manage Network VLAN Spanning infrastructure permission, or we can request the account owner to enable it. To check whether VLAN spanning is already enabled, use the ibmcloud oc vlan spanning get command. We cannot enable the private service endpoint if you choose to enable VLAN spanning instead of VRF.

Worker-to-master and user-to-master communication: Service endpoints

To secure communication over public and private service endpoints, Red Hat OpenShift on IBM Cloud automatically sets up an OpenVPN connection between the Kubernetes master and the worker node when the cluster is created. Workers securely talk to the master through TLS certificates, and the master talks to workers through the OpenVPN connection.

Public service endpoint only

By default, your worker nodes can automatically connect to the Kubernetes master over the public VLAN through the public service endpoint.

- Communication between worker nodes and master is established securely over the public network through the public service endpoint.

- The master is publicly accessible to authorized cluster users only through the public service endpoint. Your cluster users can securely access your Kubernetes master over the internet to run oc commands, for example.

Version 3.11 clusters only: Public and private service endpoints

To make your master publicly or privately accessible to cluster users, we can enable the public and private service endpoints. We can enable the private

service endpoint only by using the CLI to create a cluster. Before we can create a version 3.11 cluster with public and private service endpoints, VRF is required in your IBM Cloud account, and we must enable your account to use service

endpoints. To enable VRF and service endpoints, run ibmcloud account update --service-endpoint-enable true.

- Communication between worker nodes and master is established over both the private network through the private service endpoint and the public network through the public service endpoint. By routing half of the worker-to-master traffic over the public endpoint and half over the private endpoint, your master-to-worker communication is protected from potential outages of the public or private network.

- The master is publicly accessible to authorized cluster users through the public service endpoint. The master is privately accessible through the private service endpoint if authorized cluster users are in your IBM Cloud private network or are connected to the private network through a VPN connection or IBM Cloud Direct Link. Note that we must expose the master endpoint through a private load balancer so that users can access the master through a VPN or IBM Cloud Direct Link connection.

- To create a cluster with the public and private service endpoints enabled, use the ibmcloud oc cluster create classic CLI command and include the --public-service-endpoint and --private-service-endpoint flags.

Worker communication to other IBM Cloud services or on-premises networks

Communication with other IBM Cloud services over the private or public network

Your worker nodes can automatically and securely communicate with other IBM Cloud services that support private service endpoints, such as IBM Cloud Container Registry, over your IBM Cloud infrastructure private network. If an IBM Cloud service does not support private service endpoints, your worker nodes must be connected to a public VLAN so that they can securely communicate with the services over the public network.

If you use Calico policies or a gateway appliance to control the public or private networks of our worker nodes, we must allow access to the public IP addresses of the services that support public service endpoints, and optionally to the private IP addresses of the services that support private service endpoints.

- Allow access to services' public IP addresses in Calico policies

- Allow access to the private IP addresses of services that support private service endpoints in Calico policies

- Allow access to services' public IP addresses and to the private IP addresses of services that support private service endpoints in a gateway appliance firewall

IBM Cloud Direct Link for communication over the private network with resources in on-premises data centers

To connect the cluster with your on-premises data center, such as with IBM Cloud Private, we can set

up IBM Cloud Direct Link. With IBM Cloud Direct Link, you create a direct, private connection between your remote network environments and Red Hat OpenShift on IBM Cloud without

routing over the public internet.

strongSwan IPSec VPN connection for communication over the public network with resources in on-premises data centers Set up a strongSwan IPSec VPN service directly in the cluster. The strongSwan IPSec VPN service provides a secure end-to-end communication channel over the internet that is based on the industry-standard Internet Protocol Security (IPSec) protocol suite. To set up a secure connection between the cluster and an on-premises network, configure and deploy the strongSwan IPSec VPN service directly in a pod in the cluster.

If we plan to use a gateway appliance, set up an IPSec VPN endpoint on a gateway appliance, such as a Virtual Router Appliance (Vyatta). Then, configure the strongSwan IPSec VPN service in the cluster to use the VPN endpoint on your gateway. If you do not want to use strongSwan, we can set up VPN connectivity directly with VRA.

If we plan to connect the cluster to on-premises networks, check out the following helpful features:

- We might have subnet conflicts with the IBM-provided default 172.30.0.0/16 range for pods and 172.21.0.0/16 range for services. We can avoid subnet conflicts when you create a cluster from the CLI by specifying a custom subnet CIDR for pods in the --pod-subnet flag and a custom subnet CIDR for services in the --service-subnet flag.

- If your VPN solution preserves the source IP addresses of requests, we can create custom static routes to ensure that your worker nodes can route responses from the cluster back to your on-premises network.

External communication to apps that run on worker nodes

Private traffic to cluster apps

When we deploy an app in the cluster, we might want to make the app accessible to only users and services that are on the same private network as the cluster. Private load balancing

is ideal for making the app available to requests from outside the cluster without exposing the app to the general public. We can also use private load balancing to test access, request routing, and other configurations for the app before

you later expose the app to the public with public network services. To allow private traffic requests from outside the cluster to our apps, we can create private Kubernetes networking services, such as private NodePorts, NLBs, and Ingress

ALBs. We can then use Calico pre-DNAT policies to block traffic to public NodePorts of private networking services. For more information, see Plan private external load balancing.

Public traffic to cluster apps

To make our apps externally accessible from the public internet, we can create public NodePorts, network load balancers (NLBs), and Ingress application load balancers (ALBs). Public networking

services connect to this public network interface by providing the app with a public IP address and, depending on the service, a public URL. When an app is publicly exposed, anyone that has the public service IP address or the URL that

you set up for the app can send a request to the app. We can then use Calico pre-DNAT policies to control traffic to public networking services, such as allowing traffic from only certain source IP addresses or CIDRs and blocking all

other traffic. For more information, see Plan public external load balancing.

Edge worker nodes can improve the security of the cluster by allowing fewer worker nodes that are connected to public VLANs to be accessed externally and by isolating the networking workload. When you label worker nodes as edge nodes, NLB and ALB pods are deployed to only those specified worker nodes. Router pods remain deployed to the non-edge worker nodes. To also prevent other workloads from running on edge nodes, we can taint the edge nodes. Then, we can deploy both public and private NLBs and ALBs to edge nodes.

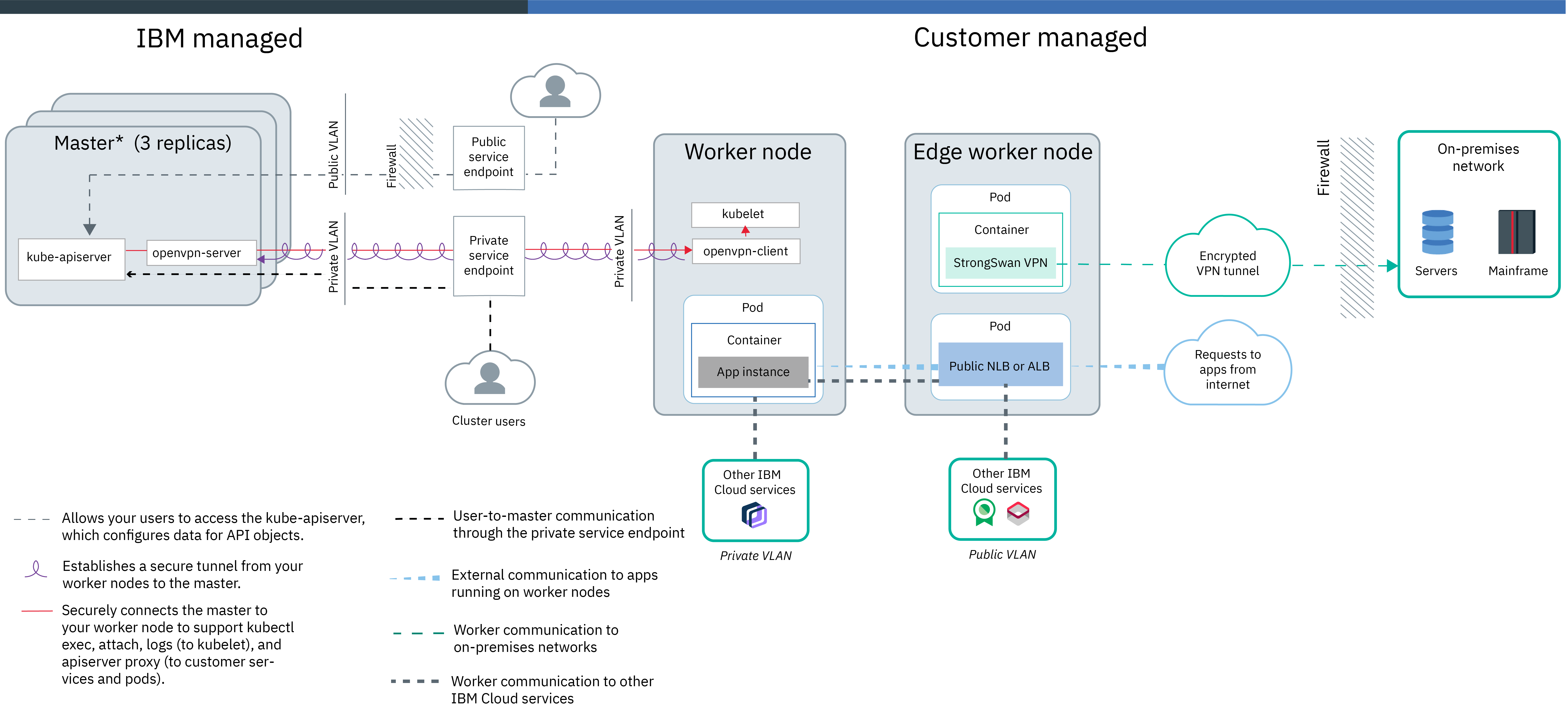

Example scenarios for classic cluster network setups

Scenario: Run internet-facing app workloads in a classic cluster

Worker-to-worker communication

To achieve this setup, you create a cluster by connecting worker nodes to public and private VLANs.

If you create the cluster with both public and private VLANs, we cannot later remove all public VLANs from that cluster. Removing all public VLANs from a cluster causes several cluster components to stop working. Instead, create a new worker pool that is connected to a private VLAN only.

Worker-to-master and user-to-master communication

We can choose to allow worker-to-master and user-to-master communication over the public and private networks, or over the public network only.

- Public and private service endpoints: Your account must be enabled with VRF and enabled to use service endpoints. Communication between worker nodes and master is established over both the private network through the private service endpoint and the public network through the public service endpoint. The master is publicly accessible to authorized cluster users through the public service endpoint.

- Public service endpoint: If you don’t want to or cannot enable VRF for the account, your worker nodes and authorized cluster users can automatically connect to the Kubernetes master over the public network through the public service endpoint.

Worker communication to other services or networks

Your worker nodes can automatically, securely communicate with other IBM Cloud services that support private service endpoints over your IBM Cloud infrastructure private network. If an IBM Cloud service does not support private service endpoints, workers can securely communicate with the services over the public network. We can lock down the public or private interfaces of worker nodes by using Calico network policies for public network or private network isolation. We might need to allow access to the public and private IP addresses of the services that we want to use in these Calico isolation policies.

If your worker nodes need to access services in private networks outside of our IBM Cloud account, we can configure and deploy the strongSwan IPSec VPN service in the cluster or leverage IBM Cloud IBM Cloud Direct Link services to connect to these networks.

External communication to apps that run on worker nodes

To expose an app in the cluster to the internet, we can create a public network load balancer (NLB) or Ingress application load balancer (ALB) service. We can improve the security of the cluster by creating a pool of worker nodes that are labeled as edge nodes. The pods for public network services are deployed to the edge nodes so that external traffic workloads are isolated to only a few workers in the cluster. We can further control public traffic to the network services that expose our apps by creating Calico pre-DNAT policies, such as allowlist and blocklist policies.

Ready to get started with a cluster for this scenario? After planning your high availability and worker node setups, see Creating clusters.

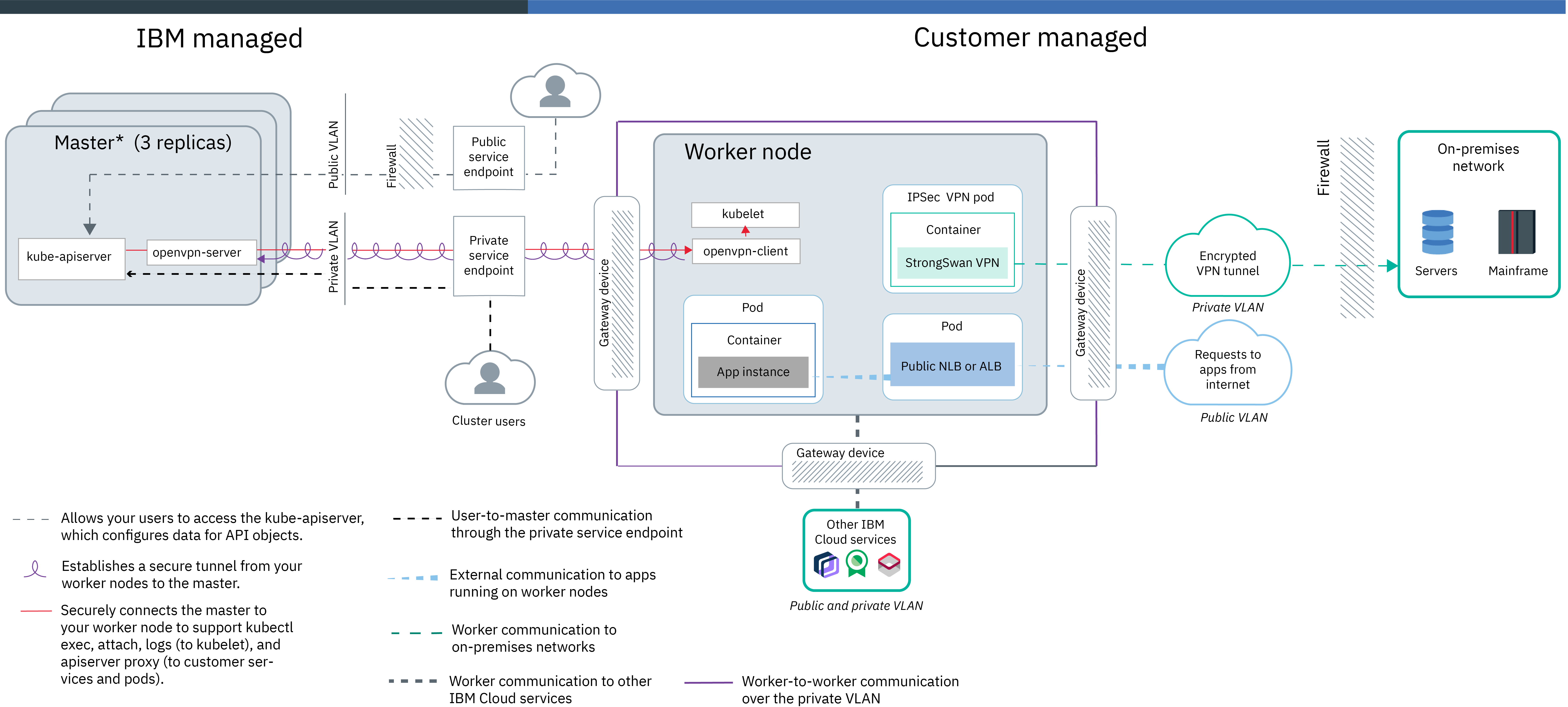

Scenario: Extend your on-premises data center to a classic cluster and add limited public access

To achieve this cluster setup, we can create a firewall by using a gateway appliance.

Using a gateway appliance

Worker-to-worker communication, worker-to-master and user-to-master communication

Configure a gateway appliance to provide network connectivity between your worker nodes and the master over the public network. For example, we might choose to set up a Virtual Router Appliance or a Fortigate Security Appliance.

We can set up your gateway appliance with custom network policies to provide dedicated network security for the cluster and to detect and remediate network intrusion. When you set up a firewall on the public network, we must open up the required ports and private IP addresses for each region so that the master and the worker nodes can communicate. If you also configure this firewall for the private network, we must also open up the required ports and private IP addresses to allow communication between worker nodes and let the cluster access infrastructure resources over the private network. You must also enable VLAN spanning for the account so that subnets can route on the same VLAN and across VLANs.

Worker communication to other services or networks

To securely connect your worker nodes and apps to an on-premises network or services outside of IBM Cloud, set up an IPSec VPN endpoint on your gateway appliance and the strongSwan IPSec VPN service in the cluster to use the gateway VPN endpoint. If you do not want to use strongSwan, we can set up VPN connectivity directly with VRA.

Your worker nodes can securely communicate with other IBM Cloud services and public services outside of IBM Cloud through your gateway appliance. We can configure the firewall allow access to the public and private IP addresses of only the services that we want to use

External communication to apps that run on worker nodes

To provide private access to an app in the cluster, we can create a private network load balancer (NLB) or Ingress application load balancer (ALB) to expose the app to the private network only. For to provide limited public access to an app in the cluster, we can create a public NLB or ALB to expose the app. Because all traffic goes through your gateway appliance firewall, we can control public and private traffic to the network services that expose our apps by opening up the service's ports and IP addresses in the firewall to permit inbound traffic to these services.

Ready to get started with a cluster for this scenario? After planning your high availability and worker node setups, see Creating clusters.