Configure the WebSphere eXtreme Scale dynamic cache grid

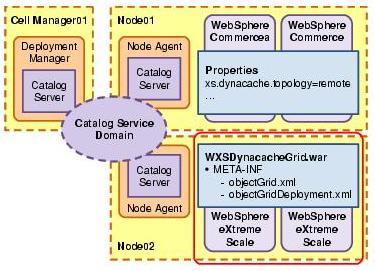

Overview

The next step is to configure the dynamic cache grid.

Create the application server cluster

Create a WebSphere Application Server cluster of two application servers to host the dynamic cache data in an eXtreme Scale grid. We have chosen to create two application server members for simplicity in describing the steps required to configure a grid. In a production environment, use at minimum three cluster members to ensure an even spread of data in the grid. Sizing considerations are outlined in 6.5, Sizing guidance, and that will dictate how many application servers you need for the grid.

- To create a new WebSphere Application Server cluster, in the administrative console, click Servers Æ Clusters Æ WebSphere Application Server clusters and click New.

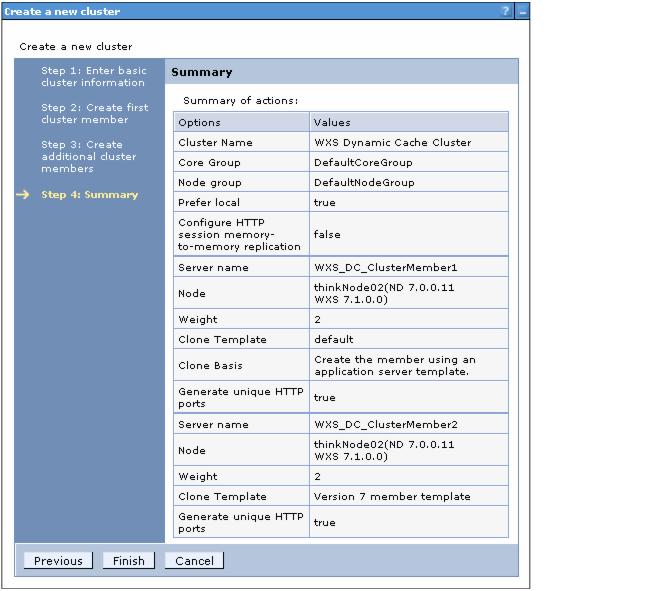

- Enter the cluster name, for example WXS Dynamic Cache Cluster, and click Next.

- Add in the first application server cluster member with appropriate details:

- Enter the member name: WXS_DC_ClusterMember1.

- Select the node that the application server is going to run on.

- Click Next.

- Add additional application server cluster members. In this scenario, we created one additional application server, to have a total of two cluster members.

- Enter the member name: WXS_DC_ClusterMember2.

- Click Add Member.

- Click Next.

- Review the summary (as shown in Figure 6-10) and click Finish.

- Save the changes.

The application servers can be further tuned after the grid has been configured. Areas of configuration include the following parameters:

The Java virtual machine heap size will be the primary tuning parameter to set. Set this at 1500 Mb as a starting point and tune is as needed.

The Java virtual machine heap size will be the primary tuning parameter to set. Set this at 1500 Mb as a starting point and tune is as needed.

Environment optimizations such as switching on the WebSphere Application Server version 7 feature "Start components as needed" (configured on the main application server configuration page in the administration console), which will not start internal components not used by the server, will leave more memory for eXtreme Scale.

Environment optimizations such as switching on the WebSphere Application Server version 7 feature "Start components as needed" (configured on the main application server configuration page in the administration console), which will not start internal components not used by the server, will leave more memory for eXtreme Scale.

Deploy the dynamic cache grid

The next step is to deploy the eXtreme Scale dynamic cache grid configuration. When you deploy the configuration for an eXtreme Scale grid to run on WebSphere Application Server, you need to package the eXtreme Scale configuration files into a web archive (WAR file) to deploy them. WebSphere eXtreme Scale provides a default configuration for the dynamic cache grid, which can be found in:

WAS_HOME/optionalLibraries/ObjectGrid/dynacache/etc

ObjectGrid is the name of the technology within WebSphere eXtreme Scale, and was a previous name of the product. Hence it is used a lot in the configuration. The term "grid" used throughout this book in fact refers to an ObjectGrid.

Two files cover the definition of the grid and the nature of its deployment:

- dynacache-remote-objectgrid.xml

Contains the definition of the grid required by eXtreme Scale to host the dynamic cache data. This file should not need editing.

- dynacache-remote-deployment.xml

Contains deployment information for the grid. Review and edit this file.

To deploy the dynamic cache grid, perform the following steps:

- Create a temporary folder location with a META-INF folder under it and copy the sample XML files into it before editing them. The files will need to be renamed (this is case sensitive) as shown in Figure 6-11. This is to adhere to the file name convention needed by eXtreme Scale for it to recognize the grid configuration in a WAR file.

- Rename dynacache-remote-objectgrid.xml to objectGrid.xml

- Rename dynacache-remote-deployment.xml to objectGridDeployment.xml

- Optional: Review the contents of objectGrid.xml. This is not provided here because it should not need editing. It contains the definition of the eXtreme Scale grid required to host the dynamic cache information.

It is possible to configure eXtreme Scale to run more than one dynamic cache grid. This can be used for hosting grids for more than one Commerce or other application server environment.

To do this:

- Create a separate set of objectGrid.xml and objectGridDeployment.xml files and rename objectGrid definition in the objectGrid.xml (for example, to DYNACACHE_REMOTE_2).

- Edit objectGridDeployment.xml (shown in Example 6-3) to have the same objectgridName and mapSet name.

- Add an additional JVM property (as added in step 3) on Commerce JVMs to tell it to use a separate grid:

com.ibm.websphere.xs.dynacache.grid_name

- Deploy the grid settings alongside other grids and register them with the same catalog server(s).

- Review the contents of objectGridDeployment.xml as shown in Example 6-3.

- numInitialContainers=2

This value must be changed to equal the number of application servers in the eXtreme Scale grid cluster, in our example two. This prevents the grid starting until all application servers in the grid have started so it can balance the partitions evenly. Without this, there will be imbalance towards the initial application server started because eXtreme Scale will not move or balance partitions unless strictly necessary.

- numberOfPartitions=47

The default number of partitions is a good starting place. This number can be changed in line with sizing and capacity planning considerations (as discussed in more detail in 6.5, Sizing guidance).

- maxAsyncReplicas=1

WebSphere eXtreme Scale provides two modes of replication: synchronous and asynchronous. With the quality of service required by the dynamic cache service, asynchronous replication will be sufficient, and it naturally performs better than synchronous replication.

Set maxAsyncReplicas to the maximum number of asynchronous replicas you want. The default of 1 is probably sufficient and will mean that each partition will have one copy.

Zero is a viable option if you do not want to replicate the cache partitions. In this case, any cached data held on a stopped or failed JVM is lost and must be recreated. That might meet the cache availability requirement and saves memory by having no data duplication.

- developmentMode=false

This setting shouldn't need changing but is commented on here for convenience. If testing the grid on a single server, then by default eXtreme Scale will not start replicas because it tries to put them on separate nodes from the primary for availability. For testing purposes, this can be set to true to test the impact of replication in a single node environment.

- Create a web archive file (WAR) to deploy the eXtreme Scale configuration.

From the temporary directory, create a WAR file by running the jar command found in Example 6-4. If the jar command is not found, then provide a full path to the executable found in WAS_HOME\java\bin.

jar -cvf WXSDynacacheGrid.war * added manifest ignoring entry META-INF/ adding: META-INF/objectGridDeployment.xml(in = 668) (out= 354)(deflated 47%) adding: META-INF/objectGrid.xml(in = 2132) (out= 722)(deflated 66%)

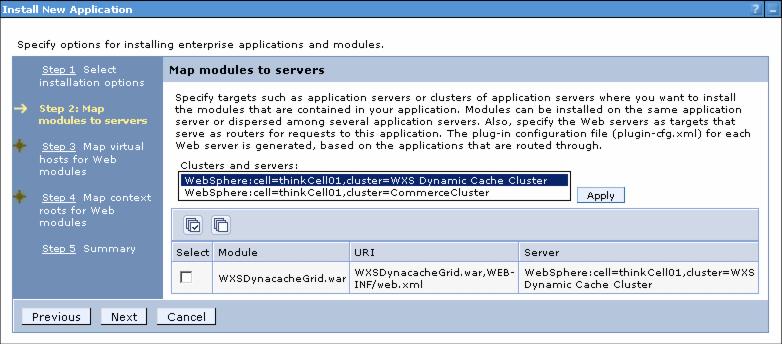

- Deploy the WAR file to the WXS Dynamic Cache Cluster we created earlier. Deploy this as you deploy any normal application:

- From the administrative console, select Applications Æ New Application Æ New Enterprise Application.

- Click Browse for the Local file system option and select the WXSDynacacheGrid.war file that you just created. Click Next.

- On Preparing for the application installation, select the default of Fast Path and click Next.

- In the next five steps of the deployment, leave the defaults except for Step 2: Map modules to servers, where we will need to select the WXSDynacachGrid.war module, select the WXS Dynamic Cache Cluster and click Apply, as shown in Figure 6-12.

- On the summary page, click Finish and save the changes.

- To start the eXtreme Scale grid, in the administrative console, click Servers Æ Clusters Æ WebSphere application server clusters Æ WXS Dynamic Cache Cluster and click Start.

- When the cluster has started, check the cluster members' SystemOut.log files for messages that eXtreme Scale has started successfully.

When the application server itself is starting, we will see a message similar to that shown in Example 6-5. This shows that the product is correctly installed and being used by the cluster, and provides the exact product version.

[10/22/10 13:19:41:468 CDT] 00000000 RuntimeInfo I CWOBJ0903I: The internal version of WebSphere eXtreme Scale is v4.0 (7.1.0.0) WXS7.1.0.XS [a1025.57350].

Secondly, towards the end of the application server start-up, you can see where the web module starts to load. Example 6-6 shows that the eXtreme Scale configuration has been detected in the web module and the ObjectGrid Server is started. It will also reference the template map configuration from the objectGrid.xml file. A template is the prefix name for the dynamic cache grid(s) that will be created.

Example 6-6 Log messages to demonstrate grid start

[10/22/10 13:20:04:515 CDT] 00000019 ApplicationMg A WSVR0200I: Starting application: WXSDynacacheGrid_war

[10/22/10 13:20:07:781 CDT] 00000019 ServerImpl I CWOBJ1001I: ObjectGrid Server thinkCell01\thinkNode02\WXS_DC_ClusterMember1 is ready to process requests.

[10/22/10 13:20:08:140 CDT] 00000019 XmlObjectGrid I CWOBJ4701I: Template map info_server.* is configured in ObjectGrid IBM_SYSTEM_xsastats.server.

[10/22/10 13:20:08:250 CDT] 00000019 XmlObjectGrid I CWOBJ4701I: Template map IBM_DC_PARTITIONED_.* is configured in ObjectGrid DYNACACHE_REMOTE.Finally, eXtreme Scale will wait until both application servers are fully started before actually starting the grid itself.

This is the importance of the numInitialContainers value in the objectGridDeployment.xml file. This value was set to 2 so that eXtreme Scale will wait for both servers to start.

You will see a message when each of the partitions and their replicas that are started, as illustrated in Example 6-7. A primary partition must always be on a separate cluster member than its replica.

Example 6-7 Log messages to show the eXtreme Scale partitions and replicas activating

... [10/29/10 4:24:19:896 CDT] 00000021 AsynchronousR I CWOBJ1511I: DYNACACHE_REMOTE:DYNACACHE_REMOTE:10 (asynchronous replica) is open for business. [10/29/10 4:24:19:896 CDT] 00000021 AsynchronousR I CWOBJ1543I: The asynchronous replica DYNACACHE_REMOTE:DYNACACHE_REMOTE:10 started or continued replicating from the primary. Replicating for maps: [xsastats_DYNACACHE_REMOTE_grid, xsastats_DYNACACHE_REMOTE_map] [10/29/10 4:24:22:802 CDT] 00000038 ReplicatedPar I CWOBJ1511I: DYNACACHE_REMOTE:DYNACACHE_REMOTE:15 (primary) is open for business. ...

- Verify the grid deployment and grid access.

WebSphere eXtreme Scale provides a command line tool, called xsadmin, for querying the eXtreme Scale runtime configuration. Here are simple commands for validating and reviewing the configuration.

At the time of writing, there is an issue running xsadmin from the installation of eXtreme Scale v7.1 when installed on WebSphere Application Server v7.0, where it wouldn't connect properly to the catalog server. The work around is to use xsadmin from a separate, stand-alone installation of WebSphere eXtreme Scale.

The problem is resolved in WebSphere eXtreme Scale V7.1.0.1. and WebSphere eXtreme Scale V6.1.0.5. The resolution is also available as a patch at:

http://www-01.ibm.com/support/docview.wss?uid=swg1PM20613

From the deployment manager profile bin directory, run the xsadmin commands with a -dmgr flag, telling xsadmin that it is connecting to a catalog server in a deployment manager and to use the default deployment manager ports to connect. If the deployment manager is running on a non-default bootstrap port, then specify that with the -p option.

Example 6-8 shows a list of all running grids and maps. You will see that it correctly lists our DYNACACHE_REMOTE grid.

Example 6-8 Output from xsadmin utility to list grids and maps

This administrative utility is provided as a sample only and is not to be considered a fully supported component of the WebSphere eXtreme Scale product Connect to Catalog service at localhost:9809 Warning: Detected there is more than one Placement Service MBean *** Show all 'objectGrid:mapset' names Grid Name MapSet Name DYNACACHE_REMOTE DYNACACHE_REMOTE IBM_SYSTEM_xsastats.server stats

Example 6-9 shows a list of all containers (JVMs) and their partitions. This lists all 47 partitions and their replicas shared evenly between the two cluster members.

Example 6-9 Output from xsadmin utility to list the containers

C:\<WAS_HOME>\profiles\Dmgr01\bin>xsadmin.bat -dmgr -containers This administrative utility is provided as a sample only and is not to be considered a fully supported component of the WebSphere eXtreme Scale product Connect to Catalog service at localhost:9809 Warning: Detected there is more than one Placement Service MBean *** Show all online containers for grid - DYNACACHE_REMOTE & mapset - DYNACACHE_REMOTE Host: think.was7.ibm.com Container: thinkCell01\thinkNode02\WXS_DC_ClusterMember1_C-1, Server:thinkCell01\thinkNode02\WXS_DC_ClusterMember1, Zone:DefaultZone Partition Shard Type 0 AsynchronousReplica 1 AsynchronousReplica 2 AsynchronousReplica 8 AsynchronousReplica ... 40 Primary 42 Primary 43 Primary Container: thinkCell01\thinkNode02\WXS_DC_ClusterMember2_C-1, Server:thinkCell01\thinkNode02\WXS_DC_ClusterMember2, Zone:DefaultZone Partition Shard Type 3 AsynchronousReplica 4 AsynchronousReplica 5 AsynchronousReplica ... 44 Primary 45 Primary 46 Primary Num containers matching = 2 Total known containers = 2 Total known hosts = 1

Example 6-3 default objectGridDeployment.xml file

<?xml version="1.0" encoding="UTF-8"?>

<deploymentPolicy xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://ibm.com/ws/objectgrid/deploymentPolicy/deploymentPolicy.xsd"

xmlns="http://ibm.com/ws/objectgrid/deploymentPolicy">

<objectgridDeployment objectgridName="DYNACACHE_REMOTE">

<mapSet name="DYNACACHE_REMOTE"

numberOfPartitions="47"

minSyncReplicas="0"

maxSyncReplicas="0"

maxAsyncReplicas="1"

numInitialContainers="1"

developmentMode="false"

replicaReadEnabled="false">

<map ref="IBM_DC_PARTITIONED_.*" />

</mapSet>

</objectgridDeployment>

</deploymentPolicy>

The key parameters to review and change where appropriate are:

We have now created an application server cluster that is hosting an eXtreme Scale grid that we can use to store dynamic cache data from WebSphere Commerce. We have verified that the grid has been deployed and started successfully.

All of these steps involve hosting the eXtreme Scale grid within WebSphere Application Server. It is possible to run the eXtreme Scale grid outside WebSphere on stand-alone JVMs. This will function in exactly the same way and the eXtreme Scale grid configuration is the same. The difference is that the stand-alone deployment will not have any management tooling for grid deployment and operational control. In the case of a stand-alone grid, the XML descriptors do not need packaging but can be referred to directly from the command line.

Error 404 - Not Found

The document you are looking for may have been removed or re-named. Please contact the web site owner for further assistance.