Intelligent Management: application placement frequently asked questions

Where is the application placement controller running?

To find where the application placement controller is running, we can use the administrative console or scripting. To check the location in the administrative console, click...

-

Runtime Operations > Component stability > Core components

We can also run the checkPlacementLocation.jacl script to display the server on which the application placement controller is running.

When does the application placement controller start a server?

The application placement controller starts servers for the following reasons:

- To meet the minimum number of application instances defined for the dynamic cluster.

- When a request routed through the on demand router for a deactivated dynamic cluster.

- When a dynamic cluster could benefit from additional capacity. The autonomic request flow manager sends a signal indicating how beneficial it would be for a dynamic cluster to have more additional capacity, and additional instances start for the dynamic cluster.

For a view of what is running in view of the application placement controller, see the SystemOut.log messages.

When does the application placement controller stop a server?

The application placement controller stops a server for the following reasons:

- A memory constraint on a node exists. The application placement controller understands the minima for the dynamic cluster, or the amount of capacity needed for that dynamic cluster and the processor constraints and memory constraints of the system. If available memory becomes low on a node, the application placement controller attempts to stop instances to try and prevent the node from swapping.

- The dynamic cluster is configured for application lazy start and proactive idle stop, and no demand exists for the dynamic cluster. If the dynamic cluster has no demand, the application placement controller attempts to stop instances of that cluster to eliminate the resource consumption of an inactive dynamic cluster.

Why did the application placement controller not start a server?

The application placement controller does not display that the server is started for one of the following reasons:

- The configuration did not enable dynamic application placement:

- Verify that the placement controller is enabled. In the administrative console, click...

-

Operational policies > Autonomic managers > Application placement controller

- Verify that the subject cluster or clusters are dynamic clusters. The application placement controller acts upon dynamic clusters only. In the administrative console, click...

-

Servers > Dynamic clusters

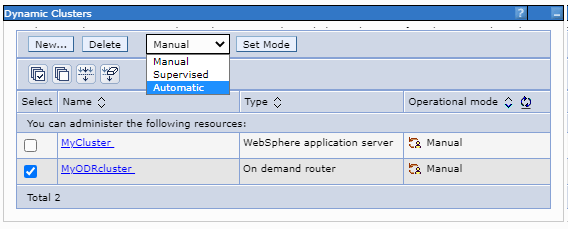

Check that the Operational mode field for each of the subject clusters is Automatic. If not, select the dynamic clusters and click Automatic. After selecting automatic for our dynamic clusters, click Set mode.

- Verify that the configured minimum time between placement change parameters is not set too high. In the administrative console, click...

-

Operational policies > Autonomic managers > Application placement controller

Set the value in the Minimum time between placement changes field to a suitable value. Acceptable values range from 1 minute to 24 hours.

- Verify that the placement controller is enabled. In the administrative console, click...

- Server operation timeout value set too low.

Sometimes the application placement controller does not start a server because the server operation times out. We can configure the amount of time before a timeout occurs in the administrative console. Click...

-

Operational policies > Autonomic managers > Application placement controller

Edit the Server operation timeout field. If our cell is large, the system is slow, or the system is under high workload, set this field to a higher value. This value represents the amount of time for each server to start, but the timeout occurs based on the number of servers in the cell. For example, if we have five servers and set the value to 10 minutes, then a timeout occurs after 50 minutes.

- Not enough memory available:

- We can diagnose when not enough memory is available by looking at the failed starts in the SystemOut.log file.

- The application placement controller uses the following formulas

to calculate the memory consumption of a dynamic cluster member:

- If no other dynamic cluster instances are running (cold start):

Server memory consumption = 1.2*maxHeapSize + 64 MB

- If other dynamic cluster instances are running, the application placement controller memory profiler uses the following formula:

Server memory consumption = .667*resident memory size + .333*virtual memory size

- If no other dynamic cluster instances are running (cold start):

- Memory profiles are not persisted when the application placement controller restarts.

To debug, we can disable the application placement controller memory profiler by setting the memoryProfile.isDisabled custom property to true.

View failed start information

Remember: The failed start list is not persisted when the application placement controller restarts or moves between nodes.

We can view failed start information with one of the following options:

- Use the PlacementControllerProcs.jacl script

to query failed server operations.

Run the following command:

./wsadmin.sh -profile PlacementControllerProcs.jacl -c "anyFailedServerOperations"

- Use commands in wsadmin.sh to display failed starts.

For example, we might run the following commands:

wsadmin>apc = AdminControl.queryNames('WebSphere:type=PlacementControllerMBean,process=dmgr,*') wsadmin>print AdminControl.invoke(apc,'anyFailedServerOperations')

When the server becomes available, the failed to start flag is removed. Use the following wsadmin tool command to list the servers that have the failed to start flag enabled:wsadmin>print AdminControl.invoke(apc,'anyFailedServerOperations') OpsManTestCell/xdblade09b09/DC1_xdblade09b09

- View the failed starts in the SystemOut.log file.

Why did the application placement controller start more servers than I expected?

More servers can start than expected when network or communication issues prevent the application placement controller from receiving confirmation that a server started. When the application placement controller does not receive confirmation, it might start an additional server.

Why did the application placement controller submit multiple tasks for starting the same server?

The reason for this behaviour can be the fact that the application placement controller runs on multiple servers. This scenario often occurs in mixed topologies, where a WebSphere Application Server Version 8.5 cell also contains a WebSphere Virtual Enterprise Version 6.1.x node. The application placement controller runs on both nodes: the WAS v8.5 node and the WebSphere Virtual Enterprise Version 6.1.x node. WAS Version 8.5 and WebSphere Virtual Enterprise Version 6.1.x nodes use different high availability solutions by default. Therefore, multiple application placement controllers are running. To correct the issue, run the useBBSON.py script on the deployment manager, and restart the cell. The script sets the cell custom properties to ensure that the same high availability solution is used throughout the cell, and that only one application placement controller is started.

How do I know when the application placement controller has completed or will complete an action?

We can check the actions of the application placement controller with runtime tasks. To view the runtime tasks, click...

- System administration > Task management > Runtime tasks

The list of runtime tasks includes tasks that the application placement controller is completing, and confirmation that changes were made. Each runtime task has a status of succeeded, failed, or unknown. An unknown status means that there was no confirmation either way whether the task was successful.

How does the application placement controller work with VMware? Which hardware virtualization environments are supported?

For more information about how the application placement controller works with VMware and other hardware virtualization environments, read about virtualization and Intelligent Management and supported server virtualization environments.

How can I start or stop a server without interfering with the application placement controller?

If we start or stop a server while the dynamic cluster is in automatic mode, the application placement controller might decide to change your actions. To avoid interfering with the application placement controller when we start or stop a server, put the dynamic cluster into manual mode before starting or stop a server.

In a heterogeneous system (mixed hardware or operating systems), how does the application placement controller pick where to start a server?

The membership policy for a dynamic cluster defines the eligible nodes on which servers can start. From this set of nodes, the application placement controller selects a node on which to start a server by considering system constraints such as available processor and memory capacity. The application placement controller does not determine the server placement based on operating systems.

When my dynamic cluster is under load, when does the application placement controller start another server?

The application placement controller works with the autonomic request flow manager (ARFM) and defined service policies to determine when to start servers. Service policies set the performance and priority maximums for applications and guide the autonomic controllers in traffic shaping and capacity provisioning decisions. Service policy goals indirectly influence the actions that are taken by the application placement controller. The application placement controller provisions more servers based on information from the ARFM about how much capacity is required for the number of concurrent requests that are being serviced by the ARFM queues. This number is determined based on how much capacity each request uses when it is serviced and how many concurrent requests ARFM determines is appropriate. The number of concurrent requests is based on application priority, goal, and so on.

The performance goals defined by service policies are not guarantees. Intelligent Management cannot make the application respond faster than its limit. In addition, more capacity is not provisioned if enough capacity is already provisioned to meet the demand, even if the service policy goal is being breached. Intelligent Management can prevent unrealistic service policy goals from introducing instability into the environment.

How does the application placement controller determine the maximum heap size of my server?

We can change the heap size of the server in the dynamic cluster template. See modifying the JVM heap size.

Why are the dynamic cluster members not inheriting properties from the template?

We must save dynamic clusters to the master repository before changing the server template. If we have dynamic cluster members that do not inherit the properties from the template, the server template probably incurred changes in an unsaved workspace. To fix this issue, delete the dynamic cluster, then recreate it.

Save our changes to the master repository. We can ensure that our changes are saved to the master repository after clicking Finish, by clicking Save in the message window. Click Save again in the Save to master monfiguration window. Click Synchronize changes with nodes.

Why does my dynamic cluster have too few active servers?

If we encounter problems where not enough servers are running in the dynamic cluster, try the following actions:

- When the nodes in the node group are not highly utilized, verify that the service policy is met. At times, the policy might not be defined clearly and although the system is able to meet them, although not to your expectations. To check or change a service policy in the administrative console click...

-

Operational policies > Service policies > existing policy

Check the goal type, goal value, and importance of the policy, and make any necessary changes.

- When the nodes in the node group are highly utilized, compare the service policy goals of this cluster to service policy goals of other active clusters. If the traffic that belongs to this cluster has lesser importance or looser target service goals relative to the other clusters, it is more likely the system instantiates fewer

servers for this cluster. To check or change a service policy in the administrative console, click...

-

Operational policies | Service policies | existing policy

- When the node group seems to have some extra capacity, but your service policies are not met, check the configuration settings on the dynamic cluster. There might be too few instances of the dynamic cluster created as a result of the maxInstances policy setting.

In a dynamic cluster environment, why does the application placement controller fail to distribute available servers across nodes?

Dynamic application placement is based on load distribution, service policy, and available resources. When reducing the maximum number of application instances in a dynamic cluster, the application placement controller stops servers on nodes with highest workload until the number of servers is reduced to the set maximum value. If all the nodes are available, the application placement controller selects the first node in the list, and continues with the next node in the list until the maximum number is met.

When reducing the maximum number of application instances in multiple dynamic clusters running on the same nodes, the same process applies: the application placement controller stops servers in each dynamic cluster until the number of servers meets the set maximum for each dynamic cluster. Because all the servers in each dynamic cluster are running on the same nodes, the node selection order for stopping servers is the same for each dynamic cluster.

If at least one node is under load, the application placement controller initiates a more distributed placement solution.

Related: