Testing

- Create a test project

- Performance testing tips

- Record HTTP tests

- Remove temporary files

- Allow recorded pages to load completely

- Record with IP addresses

- Configure Internet Explorer for recording from a secure web site

- Record an HTTP test

- Split an HTTP test during recording

- Returning a browser to its initial state if recording is interrupted

- Create HTTP tests manually

- Record Adobe Flex content

- Record Microsoft Silverlight applications

- Change HTTP test generation preferences

- Configure the workbench for NTLMv2 authentication

- Record SAP tests

- Record Citrix tests

- Record service tests

- Record socket and TN3270 tests

- Digital certificates overview

- Kerberos overview

- Annotating a test during recording

- Record sensitive session data

- Split a test during recording

- Generating a new test from a recorded session

- Putting test assets under source control

- Organize test assets by type

- Copy test assets with dependencies

- Copy projects

- Edit tests

- Edit HTTP tests

- Redirection support for HTTP tests

- HTTP test editor overview

- Specify the number of allowable URL redirects during test runs

- Cutting and pasting in tests

- Defining performance requirements in tests

- Add an authentication folder

- Verify expected behavior

- Specify the expected page title

- Specify the expected response code

- Specify the expected response size

- Specify the expected content

- Specify the expected content for multiple requests

- Specify error-handling behavior

- How loops affect the state of virtual users

- Split a test

- Split a test page

- Merging test pages

- Disable and enable secondary HTTP requests

- Reusing tests on different hosts: Server connection variables

- Converting tests to use SSL connections

- View a test in the Protocol Data view

- Test Siebel applications

- Prerequisites for Siebel testing

- Differences between Siebel tests and HTTP tests

- Correlating a request value with a built-in Siebel variable

- Edit SAP tests

- SAP test editor overview

- Verify expected behavior

- Add an SAP verification point

- Specify an expected screen title

- Verify response times

- Add elements to an SAP test

- Add an SAP set, SAP get, or SAP call element

- Add an SAP sequence element

- Add a batch input transaction

- Split an SAP test

- View GUI data in the SAP Protocol Data view

- Edit Citrix tests

- Citrix test editor overview

- Citrix synchronization overview

- Verify application behavior

- Enable Citrix window verification points

- Enable Citrix image synchronization verification points

- Specify window verification point criteria

- Measuring response times

- Add elements to a Citrix test

- Edit a mouse action

- View a mouse sequence

- Edit a keyboard action

- Edit a text input

- Synchronize tests with screen areas

- Manually adding an image synchronization

- Add values to an image synchronization

- Use custom code with a Citrix test

- Edit service tests

- Web service test editor overview

- Verify application behavior

- Add equal verification points

- Add contain verification points

- Add Xpath query verification points

- Add attachment verification points

- Add XSD verification points

- Add elements to a service test

- Add a service request

- Update a service response from the service

- Manually adding a response element

- Edit WSDL security profiles

- WSDL security editor overview

- Create security profiles for WSDL files

- Use a security policy

- Implementing a custom security algorithm

- Add WS-Addressing to a security configuration

- Test asynchronous services

- Asynchronous service testing overview

- Create an asynchronous request structure

- Add an asynchronous callback to a service request

- Create a reliable messaging call structure

- Edit socket tests

- Socket API test editor overview

- Add elements to a socket performance test

- Add a socket close

- Add a socket send

- Add a socket receive element

- Verify application behavior

- Verify received content

- Verify received message size

- Verify received data with custom Java code

- Merging socket send and receive elements

- Change multiple socket send and receive elements

- Split a socket test

- Use custom code to specify an end policy

- Use custom code to manipulate data

- Edit Kerberos tests

- Add test elements

- Add a comment

- Add a transaction to a test

- Add conditional logic

- Synchronize users in tests

- Add a loop to a test

- Search within tests

- Locating specific types of test elements

- Search and replacing text in tests

- Disable portions of a test

- Specify error-handling behavior

- Running test elements in random order

- Rename test assets

- Delete test assets

- Debugging custom code for tests and compound tests

- Provide tests with variable data (datapools)

- Datapool overview

- Create a datapool associated with a test

- Create a datapool in the workspace

- How datapool options affect values that a virtual user retrieves

- Import a CSV file into a datapool

- Enable a test to use a datapool

- Associate a test value with a datapool column

- View datapool candidates when you open a test

- Edit a datapool

- Encrypted datapools overview

- Encrypting datapools

- Remove encryption from datapools

- Change passwords on encrypted datapools

- Import to encrypted datapools

- Export from encrypted datapools

- Use a digital certificate store with a datapool

- Navigating between a datapool and a test

- About test variables

- Declaring and assigning test variables

- Initializing variables from the command line

- Initializing variables from Rational Quality Manager

- Correlating response and request data

- Data correlation overview

- View data correlation

- Data correlation rules overview

- Create data correlation rule sets

- Create a reference using data correlation rules

- Create a substitution with data correlation rules

- Assigning sequential numbers to users

- Example: Linking references to substitutions with rules

- Example: Linking substitutions to references with rules

- Example: Linking substitutions to built-in data sources with rules

- Recorrelating data with rules

- Record tests with data correlation rules

- Generate data correlation rules in the test editor

- Generating rules while you edit

- Saving manual data correlation in a rule set file

- View data correlation rule usage

- Test data sources overview

- Correlating a request value with test data

- Substituting request content with file contents

- View references

- Create a reference or field reference

- Select a reference in a response

- Correlating multiple fields in a test

- Guidelines for adjusting data correlation

- Find data correlation errors

- Disable data correlation

- Recorrelating test data

- Data transformation

- View binary data

- Transforming binary data in tests

- Transforming binary data in specific requests

- Transforming binary data automatically

- View or transforming GWT encoded data

- Transforming GraniteDS or BlazeDS data

- Transforming SAP Web DynPro XML to ASCII

- Create custom data transformations

- Use custom data transformations

- Compound tests

- Emulating workloads while performance testing

- Schedule overview

- Create a schedule

- Set the user load

- Think time overview

- Set think time behavior in schedules

- Limiting think times in schedules

- Limiting think times in tests

- User group overview

- Add a user group

- Adjusting user groups

- Emulating slower network traffic (HTTP)

- Running long duration tests (SAP and Citrix)

- Running a user group at a remote location

- Work with agents

- Checking status of agents

- Configure ports for agents

- Manage logs in deployment directory

- Configure the high resolution timer

- Add a test

- Add must run tests

- Assigning variables

- Defining performance requirements in schedules

- Repeating tests

- Delaying virtual users or actions

- Running tests at a set rate

- Running tests in random order

- Add a transaction to a schedule

- Synchronize users

- Emulating network traffic from multiple hosts

- Configure IP aliases for a remote Windows location

- Configure IP aliases for a remote Linux location

- Configure IP aliases for a remote AIX location

- Enable virtual users to use IP aliases

- Monitoring resource data while running a performance test schedule

- Enable resource monitoring

- Enable resource monitoring on Windows Vista, Windows 7, and Windows Server 2008

- Add sources for resource monitoring data

- Add Apache HTTP Server sources

- Add Apache Tomcat sources

- Add IBM DB2 sources

- Add IBM Tivoli Monitoring sources

- Add IBM WebSphere Performance Monitoring Infrastructure sources

- Add Java Virtual Machine sources

- Add JBoss Application Server sources

- Add Microsoft Windows Performance Monitor sources

- Add Oracle Database sources

- Add Oracle WebLogic Server sources

- Add SAP NetWeaver sources

- Add Simple Network Management Protocol sources

- Add UNIX rstatd sources

- Monitoring response time breakdown

- Enable response time breakdown collection

- Enable response time breakdown collection on Windows Vista, Windows 7, and Windows Server 2008

- Set logging levels

- Enable response time breakdown collection for specific page elements

- Filtering POJO packages, methods, and classes

- Set log and statistic levels

- Set the statistics displayed during a run

- Set the data that the test log collects

- Set the problem determination level

- Running schedules with performance testing

- Running a schedule or test

- Adjusting delays in HTTP tests

- Configure HTTP client delays

- Overriding the HTTP connection timeout value

- Playing back HTTP tests faster than the recorded rate

- Set a launch configuration

- Running a configured schedule

- Configure multiple host names for a location

- Automating tests

- Running a test from the command line

- Export report counters automatically

- Controlling caches size

- Increasing memory allocation

- Controlling execution of reports from Eclipse viewer

- Change the number of virtual users during a run

- Releasing virtual users from synchronization points

- Change the stage duration during a run

- Change the problem determination level during a run

- Interrupting a run

- Controlling execution of reports from web browser

- Change number of virtual users during a run

- Change the stage duration during a run

- Releasing virtual users from synchronization points

- Change log level during a run

- Stopping test runs

- Debugging HTTP tests

- HTTP debugging overview

- Watching a virtual user during a test run

- Watching a virtual user during a schedule run

- Replaying a virtual user after a run completes

- View the playback summary of a virtual user

- Inspecting HTTP test logs in the Protocol Data view

- Manage HTTP information in the Protocol Data view

- Debugging Citrix tests

- Enable the Citrix monitoring panel

- Debugging tests with the Citrix monitoring panel

- Set Citrix breakpoints

- Evaluating results

- RPT logs

- View reports after a run

- Access reports remotely

- Switching between reports

- Evaluating results in the Eclipse viewer

- Comparing results within and among runs

- Comparing schedule stages

- View stage results in real time

- Generating functional test reports

- Customize reports

- Set custom and conditional report colors

- Change the default appearance of reports

- Customize the appearance of report graphs

- Change the report displayed during a run

- Change information in a report

- Filtering results

- Evaluating results for a specific time range

- Create a custom report

- Correcting time offset

- View resource monitoring data

- Add resource counters to reports

- Navigating to resource monitoring in reports

- Filtering resource counters

- Import resource monitoring data

- View response time breakdown

- Choose page elements to view

- Interpreting response time breakdown statistics

- View page element responses

- View page response time contributions

- View different types of statistics

- Navigating to resource monitoring data from response time breakdown

- Import response time breakdown data

- Imposing constraints while importing response time breakdown data

- Export data from runs

- Create executive summaries

- Export reports to HTML format

- Export results to a CSV file

- Export report counters to a CSV file

- Export report metadata to a .view file

- Evaluating results in the web browser

- Comparing run results

- Comparing schedule stages

- Customize reports

- Set custom and conditional report colors

- Manage counters in a graph

- Filtering report results

- Evaluating results for a specific time range

- Create custom web reports

- Export test results

- Create executive summaries of reports

- Export report counters to a CSV file

- Export reports to HTML format

- Export charts as images

- Test log overview

- View test logs

- View errors while running tests

- Export test logs

- Export event log

- Export event console output

- View adjustments to page response times

- Disable adjustments to page response times

- Simulating services with stubs

- Send service requests with the generic service client

- Generic service client overview

- Create transport protocol configurations

- Create an HTTP transport configuration

- Configure the workbench for NTLMv2 authentication

- Create a JMS transport configuration

- Create a WebSphere MQ transport configuration

- Create Microsoft .NET transport configurations

- Create SSL configurations

- Edit WSDL security profiles

- WSDL security editor overview

- Create security profiles for WSDL files

- Use a security policy

- Implementing a custom security algorithm

- Add WS-Addressing to a security configuration

- Send service requests with WSDL files

- Send HTTP endpoint requests

- Send a JMS endpoint request

- Send a WebSphere MQ endpoint request

- Test all operations in a WSDL file

- View message content

- Synchronize a remote WSDL file

- Add static XML headers to a service request

- Open file attachments

Create a test project

Tests created, and assets associated with the test, reside in a test project. You can create the project separately, or you can simply record a test, which automatically creates a project named testproj.

- Select...

-

File | New | Project | project_type

- Type a name for the project.

Do not use a project name containing spaces. The only allowed characters in the Project Name field are [a-z], [A-Z], [0-9], "-", "_", and "."

- Select: Use default location.

- Optional: Click Next and select the folders to create in the new project.

These folders organize your files by asset (Tests, Schedules, Results, and so on).

- Click Finish.

Performance testing tips

| Number of computers | Have at least two computers. Play back schedules on an agent computer separate from the workbench computer. |

| Number of virtual users (vusers) at remote locations | Do not overload the remote agent computer. If you exceed the number of vusers that the remote computer can run, the performance measurements of the server will be skewed. For a computer with a 1 GHz processor and 1 GB of RAM, do not exceed 1000 concurrent vusers. |

| TCP/IP ports | On computers with Microsoft Windows, the typical limit for TCP/IP ports is 5000. To observe port use, run netstat -a. If the largest number you see is 5000, then you need to increase the number. To increase it, open the registry, go to HKEY_LOCAL_MACHINE/SYSTEM/CurrentControlSet/Services/Tcpip/Parameters, create a new dWord named MaxUserPort, and set its value up to 65000. Restart the computer. |

| Open file limit for Linux | Computers that are running Linux need a per-process open file limit higher than 1024. As root, enter ulimit -n 30000 (or higher) before starting Agent Controller. |

| Looping within tests | If you are stress testing a server, your test typically contains a loop. Your connection behavior differs depending upon whether the loop is set at the schedule level or at the test level. Setting a loop at the test, rather than the schedule, level gives you a performance advantage, because the connections are reused during the looping process. |

| Logging levels | After the test is stable, for maximum performance, reduce the test log level and problem determination log level and sample a small number of users. Increase the statistics sample interval to 30 or 60 seconds for long-running tests. |

| Workbench heap size | The JVM heap size on the workbench is based on the available physical memory. Do not run the workbench on a computer with less than 768 MB of physical memory. The maximum workbench heap size depends on your JVM. To increase workbench heap size, set -Xmx in the RPT_HOME/eclipse.ini For Windows, if the physical memory is 3 GB or more, maximum heap size must not exceed 1200 MB. For Linux, the maximum heap size is approximately 3000 MB. If the workbench is sluggish or fails to start after increase the heap size, reset the heap size to the default by removing the VMARGS=-Xmx line from the eclipse.ini file. |

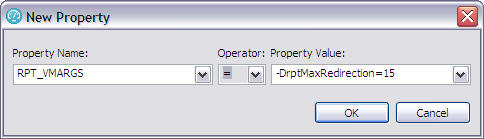

| Location (agent) heap size | To access maximum heap, after one successful test of any size, search for a location (agent) attribute called RPT_DEFAULT_MEMORY_SIZE. If you cannot find this attribute, you can specify a maximum heap by creating a new attribute: RPT_VMARGS=-Xmx1500m (for example, max heap 1.5 GB). |

| Disk space | Verify that there is sufficient free disk space on the workbench and agent computers. Also, verify that there is sufficient free disk space on the drive containing the system temporary directory. |

| Record length | If you record for a relatively long time, test generation also takes a long time. If test generation is taking a relatively long time, try shorter recording scenarios. |

Record HTTP tests

When you record a test, the test creation wizard...

- records your interactions with a web-based application

- generates a test from the recording

- opens the test for editing

I have found that Firefox is a reliable browser for recording tests, but Internet Explorer and other browsers should work also.

Remove temporary files

To ensure the recording accurately captures HTTP traffic, remove temporary files from the web browser's cache before you record a test.

From Internet Explorer...

-

Tools | Options | General | Delete | Delete Browsing History | Delete | OK

From the Mozilla FireFox...

-

Tools | Options | Advanced tab | Network tab | Clear Now | OK

Allow recorded pages to load completely

When recording, wait for each page to load completely. This wait does not affect performance results, because you can remove extra think time when you play back the test.

Record with IP addresses

When recording, you can enter the IP address of the website to connect to instead of entering the host name. When you play back the test, however, make sure that the IP address can resolve to a host name. Typically, this resolution occurs via a reverse DNS lookup. While recording a test in Internet Explorer, if you use localhost in the URL, Internet Explorer does not route the traffic through Rational Performance Tester Agent. The test will not contain any recorded data. You must use a host name or IP address to record the traffic.

To resolve the IP address, map each IP address to a host name in...

-

C:\windows\system32\drivers\etc\hosts

If an address cannot be resolved, your test shows a slower connection time. This time might be delayed by as much as 8 seconds, depending on the network configuration of the computer.

Configure Internet Explorer for recording from a secure web site

You can suppress the security warning that Internet Explorer displays when you are recording from a secure web site.

Performance Tester uses a proxy recording program that intercepts all traffic between the browser and the web server. During recording at a secure web site (with a web address that starts with https://), by default you see a security warning before every action and must confirm your acceptance of a security risk to continue.

If you do nothing, this warning is displayed with every browser action, and you must repeatedly click Yes to continue. Performing the following procedure installs the recorder certificate on the local host as a trusted authority and thereby disables warnings from Internet Explorer during recording at secure web sites. This procedure does not affect other browsers that record from secure web sites.they will display warnings before every action.

To disable security warnings when using Internet Explorer to record from a secure web site:

- During test recording, the first time the warning is displayed, click View Certificate.

- In the Certificate window, click Install Certificate.

- In the Certificate Import Wizard window, click Next.

- On the second page of the wizard, click Next.

- On the last page of the wizard, click Finish.

- In the Root Certificate Store confirmation window, click Yes.

- In the window that reports that the import was successful, click OK.

- In the Certificate window, click OK.

- In the Security Alert window, click OK.

Record an HTTP test

The following recorders are available for recording HTTP traffic from a browser:

| SOCKS proxy recorder | Use when no proxy connections are required. |

| HTTP proxy recorder | Use when proxy connections are required to connect to the network or when the client program does not support SOCKS. |

| Socket recorder | Use for low-level network traffic when the client does not support proxies. |

Because SSL relays traffic between the client and the server, the client might require the proxy recorder to authenticate itself as the server, and the server might require the proxy recorder to authenticate as the client. If the client program requires an authenticated server, you must either...

- Have access to the server certificate keystore and provide it to the proxy recorder

- Configure the client to accept the default certificate from the proxy recorder instead of the certificate from the actual server

To record an HTTP performance test with a browser:

- In the Performance Test perspective, on the toolbar, click the New Test From Recording icon

or click...

or click...

-

File | New | Test From Recording

- In the New Test From Recording wizard, click Create a test from a new recording, select HTTP Test, and click Next.

If you are recording sensitive data, click Recording encryption level and select the encryption level to record.

- On the Select Location page, select the project and folder to create the test in, type a name for the test, and click Next.

If necessary, create a performance test project or folder...

-

Create the parent folder

- On the Select Client Application page, select the web browser to use.

The type of application defines the recorder that can be used. The following client application types are supported for recording a service test:

Microsoft Internet Explorer Records traffic sent and received with Internet Explorer. Mozilla Firefox Records traffic sent and received with Firefox. Google Chrome Records traffic sent and received with Chrome. Apple Safari Records traffic sent and received with Safari. Opera Records traffic sent and received with Opera. Managed Application Starts a browser not in the list. Unmanaged Application Records HTTP traffic from one or multiple client programs that use a proxy. Manually start the client programs, and the proxy recorder records all traffic that is sent and received through the specified network port. - Depending on your selection in step 4, take one of the following necessary steps: On the Recorder Settings page, depending on the browser that you selected, specify these details:

- If you selected a browser, specify the recording method.

- To record HTTP or SOCKS traffic through a proxy, click either...

-

Record traffic with the proxy recorder

To record low-level network traffic for applications where a proxy cannot be used, click...

-

Record traffic with the socket recorder

Use this option primarily when you are not using Mozilla Firefox or Microsoft Internet Explorer.

When using proxy recording, you can filter out HTTP or HTTPS requests to a specific endpoints so that any requests to those endpoints are not recorded.

- If the server requires client SSL authentication, provide the client certificate keystore for the proxy recorder to be authenticated by the server as though the proxy recorder were the client. Select the check box The server requires a specific client certificate. Specify the file name and password of the server certificate keystore. If multiple certificates are required, click Multiple certificates, and click Add to specify a certificate keystore file name and password for each host name and port.

- If you selected Mozilla Firefox, you can choose to use a temporary Firefox profile.

This starts Firefox without any bookmarks, plug-ins, or toolbars that might be associated with your usual profile. Select the Use an alternate Firefox profile check box, and then select Use a temporary Firefox profile. This option is also use if you need a prompt to download a server certificate interactively when recording.

- If you clicked Record traffic with the proxy recorder, click Advanced to specify whether to use an HTTP or SOCKS proxy recorder to review and edit network connection settings that the browser uses or to specify advanced SSL authentication settings. If you clicked Record traffic with the socket recorder, specify advanced SSL authentication settings.

- To record HTTP or SOCKS traffic through a proxy, click either...

- If you selected Managed Application...

- On the Recording Method page, click a recorder to record HTTP traffic and click Next.

- On the Managed Application Options page, for Program path, click Browse to select the program. If necessary, specify the Working directory, and in the Arguments field, type the command-line arguments that the program requires. Click Next.

- If the program requires user input from a command line interface, select the Open console for user input check box. Click Next.

- Depending on the recording method that you selected, complete one of the following steps:

- On the Proxy Recorder Settings page, specify whether to use an HTTP or SOCKS proxy recorder to review and edit network connection settings that the browser uses or to specify advanced SSL authentication settings.

- On the Socket I/O Recorder Secured Settings page, specify advanced SSL authentication settings.

- If you selected Unmanaged Application, on the Proxy Recorder Settings page, specify whether to use an HTTP or SOCKS proxy recorder to review and edit network connection settings that the browser uses or to specify advanced SSL authentication settings.

- If you selected a browser, specify the recording method.

- Click Finish. A progress window opens while your browser starts.

- In the browser address field, type the address of the web-based application to test, and activate the link.

If you enter the address of a secure website (one that starts with https:), your browser might display a security alert. Depending on the security certificate for the site, you might be required to accept a security risk to proceed with the recording. If you do not get prompted to access a certificate, and instead just get SSL error, you may need to use a temporary profile.

- Complete the user tasks to test. While you are recording, follow these guidelines:

- Wait for each page to load completely. This wait does not affect performance results, because you can remove extra waiting time (think time) when you play back the test.

- Do not change any browser preferences.

You can use the Recorder Test Annotations toolbar to add comments, record synchronizations, or take screen captures during the recording.

- To change the page name, click the Change page name icon

.

.

In the resulting test, the page element in the test editor uses the new name; however, the original name is preserved in the Page Title Verification Point area so that page title verification points still work correctly.

- To add a comment to the recorded test, click the Insert comment icon

.

.

You are prompted for a comment.

- To add a screen capture to the recorded test, click the Capture screen icon

.

.

Screen and window captures make your tests easier to read and help you visualize the recorded test. You can change the settings for screen captures and add a comment to the image.

- To manually add a synchronization point to the recording, click the Insert synchronization icon

.

.

- To manually add a transaction folder to the recording, click the Start Transaction icon

and Stop Transaction icons

and Stop Transaction icons  to start and stop the transaction.

to start and stop the transaction.

Transactions can be nested.

- To insert a split point into the recorded test, click the Split point icon

icon.

icon.

With split points, you can generate multiple tests from a single recording, which you can replay in a different order with a schedule.

- To filter packets that display during recording sessions, click the Filter packets

icon.

icon.

You can specify the filter criteria for the following elements:

- SAP packet type

- SAP packet attribute

- Socket packet type

- Socket packet attribute

- Packet type

- Proxy connection IDs

- Citrix events

- A group of conditions

- Remote host

You can add and remove packet filters as needed.

- After finishing the user tasks in the browser, stop the recorder. You can stop the browser by closing the client program or by clicking the Stop icon

in the Recording Control view.

in the Recording Control view.

A progress window opens while the test is generated. After the test is complete, the Recording Control view displays the Test generation completed message, the test navigator lists your test, and the test opens in the test editor.

Split an HTTP test during recording

You can insert split points when you record a test. With split points, you can generate multiple tests from a single recording that you can replay in a different order with a schedule. You can also create a schedule containing all of the tests that are generated from the split points.

During the recording process, you can select the option to create a schedule for the tests that are generated from the split points. The schedule will contain these attributes:

- One user

- One user group for the local computer

- All of the tests from the recording, in serial order

- One stage: Run until finished

- Recorded think times, with the maximum think time set to 2 seconds

- Statistics:

- Statistics log level: All

- Statistics sample interval: 5 Seconds

- Only store All Hosts statistics

- Test Log:

- Show errors and failures: All

- Also show warnings: All

- And also show all other types: All

- Problem Determination log level: Warning

To insert split points when you record a test:

- Start recording the test. The Recorder Test Annotations toolbar opens near the top of the screen.

- To insert a split point into the recorded test, click the Split point icon

.

.

The Insert Split Point window is displayed.

- Click Test name, and then type a name for this section of the test.

You are naming the previous section of the test, not the upcoming section of the test.

Repeat this step between recorded user actions as needed to split tests.

- Click Test name, and then type a name for this section of the test.

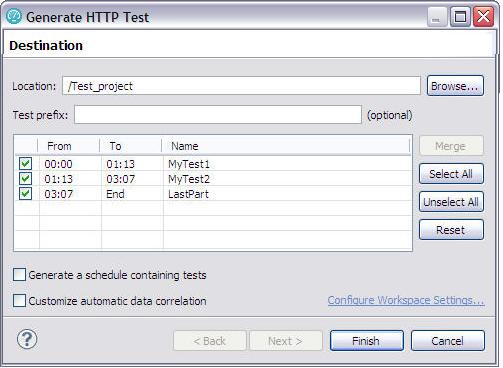

- Close the client program to stop the recording. The Generate HTTP Test window is displayed.

- Type a Test prefix for all the tests in the schedule.

- To create a schedule for the tests...

-

Generate a schedule containing tests

When you select this option, you can modify the name of the schedule.

- To choose automatic data correlation or rule-based data correlation.

-

Customize automatic data correlation

If you select this option and choose rule-based data correlation, you can specify which data correlation rule sets to use.

- Click Finish.

The Test Generation window displays the status of generating the tests and schedule and the data correlation. You can view the test generation log from this window.

- When test generation is complete, you can select the test to open and then click Open Selected Tests, or you can click Close to finish this process.

The schedule and tests are generated using the names that you specified in the wizard.

Returning a browser to its initial state if recording is interrupted

Browser options are changed during recording and are reset after recording is complete. If you interrupt recording, the browser stays in its changed state, and you may receive The page cannot be displayed messages. To fix this, reset the browser to its initial state.

- Right-click the Internet Explorer icon, and select Properties.

- On the Connections page, click LAN Settings.

- If you do not use a proxy, in the Local Area Network (LAN) Settings window, clear...

-

Use a proxy server for your LAN

- If you use a proxy:

In the Local Area Network (LAN) Settings window, select...

-

Use a proxy server for your LAN

...and then click Advanced.

In the Proxy settings window:

- Add the proxy address and port number to the HTTP and the Secure fields.

- Remove the proxy address and port number from the Socks field.

- In the Proxy Settings window, click OK.

- If you do not use a proxy, in the Local Area Network (LAN) Settings window, clear...

- In the Local Area Network (LAN) Settings window, click OK.

- In the Internet Properties window, click OK.

Create HTTP tests manually

Create an empty test

You can create an empty template for a test that you plan to write manually.

- In the Test Navigator, right-click a project and click...

-

New | Other | New | Test | Test Assets | New Test | Next

- In the Enter, create, or select the parent folder field, click the test project in which to store the test.

- In the Name field, type a name for the test, and then click Next.

- Optionally, in the Test Attributes window, type a description for the test, and then click Next.

- In the Protocol and Features window, select HTTP Protocol, and then click Next.

- In the HTTP Extension window, enter the name of the host, and set the options for the test.

- In the New test summary window, inspect your selections, and then click Finish.

- Confirm to open the editor.

A test template opens for you to edit. The following figure shows HTTP test template with the default options (one page, one request per page, and a response for the request).

Add templates for new elements

You can add a template for a new test element that you plan to write by hand.

To add a template for a page, page request, or basic authentication block to a test:

- Open the test. If you are writing a test completely by hand, you can create an empty test.

- Click the parent or sibling element.

- Click Add or Insert. The editor enforces the test hierarchy: tests contain pages, pages contain requests, and requests contain authentication blocks.

- Add adds the template to the bottom of the selected element: a page is added to the bottom of the test, a request is added to the bottom of the selected page, and an authentication block is added to the end of the selected page request.

- Insert inserts the template at the point of the cursor: a page is inserted before the selected page, a page request is inserted before the selected request, and an authentication block is inserted at the top of the selected page request.

- Select one of the following options. The options displayed depend on the element that you select.

Option Description HTTP Page Adds a page to the test. HTTP Request Adds a request to the test. Basic Authentication Adds an authentication block to a request. A folder named Authentication is added, and the Test Element Details area displays the User id, Password, and Realm fields. Custom Code Adds a block of custom code to the test. Delay Adds a delay to the test. Loop Runs part of the test a specific number of times. In addition to setting the number of iterations, you can control the rate of iterations and randomly vary the delay between iterations. Condition Adds a conditional block to the test. In most cases, a conditional block issues HTTP requests depending on the value of a reference or field reference. The reference or field reference must exist in the test and precede the conditional block. Transaction Enables you to view performance data about the transactions that you have added. In the Test Element Details area, give the transaction a meaningful name. This is useful in the Transactions report, which lists transactions by name. Random Selector Enables you to run test elements in random order. Comment Adds a comment that appears in the Test Element Details area and in the actual test.

Add a header

You can add a standard header or a custom header to a request or response.

- Open the test.

- In the test hierarchy, click a request (to add a request header) or the Response Data folder for a request (to add a response header).

- In the Test Element Details area, locate the Request Headers table, and then click Add. The Add/Edit Headers window opens.

- To add a standard header:

- In the Available Headers list, locate the header to add and click it. Use the Quick search field (start typing the name of a header) and Header types list (select the type of header you are looking for) to quickly locate a header in the Available Headers list.

- Click the right angle bracket (>). The selected header moves into the Selected headers list and your cursor is placed in the value column.

- Type the value for the header.

- To add a custom header:

- In the Header types list, select Custom.

- At the bottom of the window, in the New custom header area, type the header information in the Name field and the Value field, and then click Insert. The custom header is added to the Selected headers list.

- When you have finished adding headers, click OK.

Edit header contents

You can modify the contents of the headers that are contained in requests and responses.

- Open the test.

- In the test hierarchy, click a request (to edit a request header) or the Response Data folder for a request (to edit a response header).

- In the Test Element Details area, locate the Request Headers table, and double-click the cell containing a value to edit. An ellipsis button is displayed in the Value column.

- Click Modify.

- In the Edit Header window, edit the header value as desired. You can create a reference or field reference in the header value.

- Click Table View to return to the Request Headers table.

Record Adobe Flex content

You can record an HTTP session containing traffic to and from a .swf Adobe Flex application.

The Adobe Flash plugin must be installed in your web browser to run flex applications (.swf files) and to record the HTTP session.

Adobe Flex technology allows communication through XML, SOAP, or AMF. AMF is compressed binary data that must transformed into XML to be used in an HTTP performance test.

- Record the HTTP. By default, Rational Performance Tester transforms the AMF format in to the XML format.

After the transformation, the XML data is generated in the test with "amf" prefixes in the names...

- <amf3Property amfName="zipcode" amfType="amf3String" zipcode="94103"/>

The generated XML can be used for data correlation. Only the amfValue attributes or attirbutes without the amf prefix can be used for data correlation.

Do not modify or use data correlation on any XML attributes that start with the amf prefix except for amfValue. If these attributes are modified, the replay of the test will cause errors.

Record Microsoft Silverlight applications

To test the performance of an application that was developed by using Microsoft Silverlight, you can record the application.

Rational Performance Tester supports Microsoft Silverlight 5. Microsoft Silverlight communicates with the server in the Windows Communication Foundation (WCF) Binary format. After recording the Microsoft Silverlight application, you can transform the format to XML for better readability and to apply data correlation.

- Record the HTTP application

Change HTTP test generation preferences

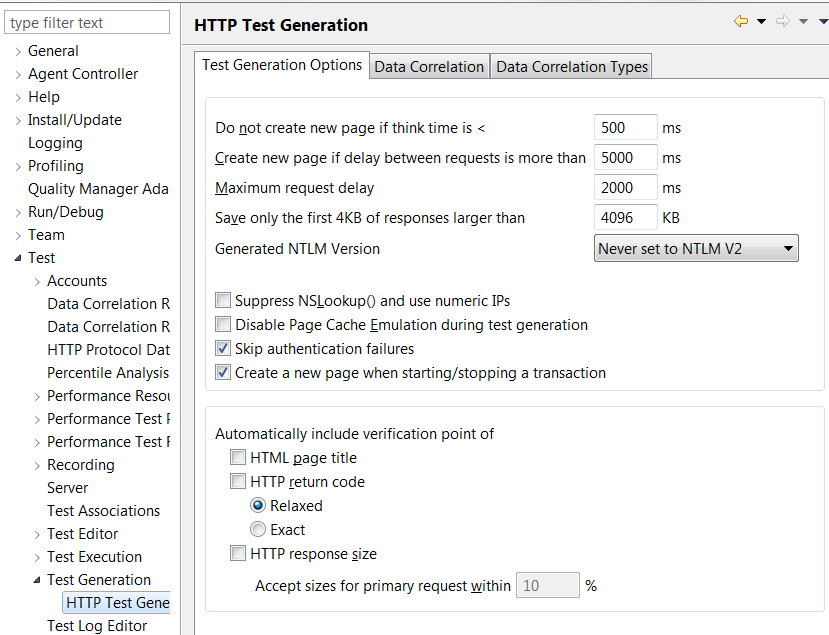

- Click...

-

Window | Preferences | Test | Test Generation | HTTP Test Generation

- Select the preference to change.

- Do not generate a new page if think time is less than

- Enter the shortest time, in milliseconds, that the generator uses as a delay to emulate user think time for an HTTP page. If your tests contain fewer pages than expected, try a shorter interval.

- Generate a new page if delay between requests is greater than

- Enter the longest delay, in milliseconds, that the generator allows between page requests. If this time is exceeded, a new page is generated. If your tests contain more pages than expected, try a longer interval.

- Maximum request delay

- Enter the longest delay, in milliseconds, that the generator allows before truncating HTTP requests. The requests are truncated on the generated test. The recorded test still contains the original values, and you can get them back by generating a new test.

- Save only the first 4KB of responses larger than

- Enter the limit of response data, in KB, that the generator saves. If a response is larger than the specified limit, only the first 4 KB of data is saved.

- Suppress NSLookup() and use numeric IPs

- Select this option to shorten test generation time. The disadvantage is that IP addresses in a test are less user-friendly than web page format (www.example.com).

- Disable Page Cache Emulation during test generation

- Disable page cache emulation. When page cache emulation is enabled, caching information in server response headers is honored. Additionally, requests are not submitted to the server for content that is confirmed by the client as fresh in the local cache. Page cache emulation is enabled by default.

- Use Legacy Test Generator

- Select this option if you have been instructed to use the legacy HTTP test generator.

- Automatically include verification point of

- Click to specify the types of verification points to be automatically included. If a check box for a verification point is selected, the code and edit controls for this type of verification point are generated in all tests. Verification points can also be enabled or disabled within specific tests.

- Relaxed

- Response codes that are in the same category (for example, 200, 201, 203, 209) are considered equivalent. An error is reported if the response code is not in the same category.

- Exact

- An error is reported if the response code does not match the recorded value exactly.

- Accept sizes for primary request within

- If you are automatically generating response size verification points, click to specify the acceptable size range for primary requests. No error is reported if a response is within the specified percentage above or below the expected size. By default, for primary requests, HTTP response size verification points use range matching.

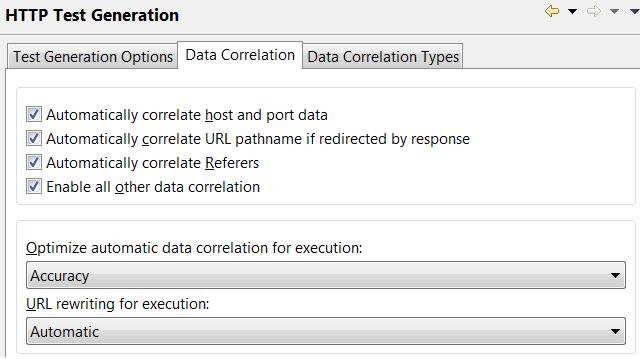

The data correlation preferences are as follows:

- Automatically correlate host and port data

- By default, host and port data is correlated automatically. If tests in a previous release have significant manual correlations, or you are using proxies, the migration of the replace-host functionality feature is likely to fail during playback. In this situation, clear the check box. When you reopen your tests, they will not have the automatic correlation feature in them.

- Automatically correlate URL pathname if redirected by response

- Specifies whether URL path names are correlated if they are redirected by a selected response code. If a check box for a response code is selected, the test generator performs correlations for that response code. This option applies only to responses that are redirects, with a status code between 300 and 399.

- Automatically correlate Referers

- By default, the Referer field in an HTTP request header is correlated automatically. Clear the check box if you plan to correlate Referers manually. If you run tests against servers that do not require a Referer field, clearing this check box reduces the number of correlations performed when the test runs, and can increase user throughput.

- Enable all other data correlation

- By default, request and response data is correlated automatically. Clear the check box to disable automatic data correlation of request and response data. Consider clearing the check box if you create your own data correlation rules in the rules editor.

- Optimize automatic data correlation for execution

- Specifies the characteristic that tests are automated for.

- With the Accuracy setting (the default), many references with an identical session ID value are created and the value of each session ID is substituted from the nearest previous reference.

- To make a test run faster by reducing the number of references that are created during automatic data correlation, change the optimization to Efficiency. For example, consider a test where a session ID, which is assigned when a user logs in, is included in every subsequent request in the test. With the Efficiency setting, all session IDs are substituted from a single previous reference. The downside of this setting is that it can result in incorrect correlations. For example, a request containing the Joe Smith string might be incorrectly correlated with a request containing the Joe Brown string.

- URL rewriting for execution

- Specifies how web addresses (URLs) are rewritten during test execution.

When correlating data, the test generator replaces part of a URL request string with a value that the server returned in response to a previous request.

- Automatic (default): The test generator automatically determines when rewriting the entire URL during substitution will facilitate test execution.

- On: Select to rewrite URLs in every instance of data correlation. This produces larger tests that take longer to run. Try this setting if your tests fail unexpectedly.

- Off: Select to manually correlate the instances where URL rewriting is needed. This setting might cause execution errors.

To turn data correlation off entirely or to set whether names are automatically generated for data correlation references, click...

-

Window | Preferences | Test | Test Generation | HTTP Test Generation | Data Correlation tab

The data correlation type preferences are as follows:

- Data Correlation Types

- Specify when to generate data correlation constructs. With the Automatic setting, the test generator creates the required constructs where needed. If the test does not contain the required constructs, change the setting to On, which will always perform data correlation. If tests do not require a specific construct, select Off, which has the additional benefit of improving performance on subsequent test generation.

- For Jazz Foundation Services, On and Automatic enable data correlation for Jazz applications that use REST storage or query APIs from Jazz Foundation Services. An example of such an application is Rational Requirements Composer. Although data correlation does not typically apply to browser-based Jazz web clients, it may be useful for other HTTP client-server applications that use REST services and the Atom Publishing Protocol for updating web resources.

- For Jazz Web Applications, On and Automatic enable data correlation for Jazz web applications that use the Jazz Foundation web UI framework Examples of these web applications are the web interfaces for Rational Quality Manager and Rational Team Concert. Data correlation can also be useful for other web applications that contain javascript that employs JSON for client-server data exchange. This is a common practice with DOJO- and AJAX-based applications.

- After changing a setting, click Apply.

Configure the workbench for NTLMv2 authentication

NTLMv2 authentication requires access to a set of third-party libraries. You must prepare an environment with these libraries to use NTLMv2 authentication, set the class path of the Java. JRE that Eclipse uses, and set the class path of the virtual machine that the Agent Controller uses.

Before testing SOAP-based services that use security algorithms, obtain and install a third-party library file. By default, the HTTP test generation does not enable NTLMv2 authentication, even if it was part of the recording. To automatically enable the correct NTLM version from the recording, set the NTLM V2 setting to Automatic in the HTTP Test Generation preferences.

To configure the workbench to enable NTLMv2 authentication

- Download the archive from...

-

http://jcifs.samba.org/src/jcifs-1.3.17.zip

- Unarchive the zip file and copy the JAR file to:

-

C:\\IBM\IBMIMShared\plugins\com.ibm.rational.test.lt.provider_<version>

- To automatically enable the correct NTLM version from the recording, In the workbench, click...

-

Window | Preferences | Test | HTTP Test Generation | NTLM v2 | Automatic

When a test was recorded with NTLMv2, the NTLM V2 setting is selected in the test editor, under NTLM Authentication.

Digital certificates overview

The digital certificates feature enables you to run tests against servers that use SSL for applications that require client-side digital certificates to authenticate users.

A digital certificate is a file that binds a public cryptographic key with an identity (a user or an organization). Trusted certificate authorities issue digital certificates, which are then used to authenticate users and organizations for access to websites, email servers, and other secure systems. A certificate store is an archive file containing almost any number of digital certificates, possibly certificates that are issued from different certificate authorities.

To use digital certificates in tests:

- Create a digital certificate store.

- Record a test that requires used a digital certificate.

- Associate a digital certificate with a test for playback.

- Optionally, you can associate the digital certificates in one or more digital certificate stores with a datapool.

Create a certificate store by running the supplied KeyTool command-line program. The program creates a certificate store containing digital certificates.

Record a test that requires using a digital certificate. Specify the certificate and password that to use, and then begin recording the test. Browse the website as you typically would to record a test.

After you have finished recording, open the test for editing. On the Common Options page, under Digital Certificates, click Add. Type the name of the certificate store that you created previously; then select the certificate to use. Save the test. When you run this test, the digital certificate from the certificate store is submitted to the server.

To use a certificate store with a datapool, open the test for editing. On the Common Options page, click Add Datapool. Create a datapool with two columns containing a list of the certificates in the certificate store and a list of passphrases for the certificates. Select Fetch only once per user. Save the datapool. On the Common Options page, under Digital Certificates, click Add. Select the certificate store that you created previously from the Certificate Store column. Insert a Certificate Name for the digital certificate. Highlight this name, and then select Substitute from datapool. Choose the datapool added previously, and then choose the column with the certificate name. Repeat this process to substitute passphrases from the datapool column containing passphrases. Save the test. Add the test to a schedule. When you run this schedule, the certificates from the certificate store are submitted to the server.

Digital certificate creation overview

To use digital certificates to run tests against applications that require client-side digital certificates to authenticate users, work with the appropriate server administrators to determine the types of certificates that you need to create.

In cryptography, a public key certificate is a document that uses a digital signature to bind a public key with a physical identity. These certificates are often referred to generically as digital certificates or client digital certificates. The most common standard for digital certificates is the X.509 standard.

In public key cryptography, each certificate has two associated keys: a public key and a private key. The public key is incorporated into the X.509 certificate and is always available with the certificate itself. The private key is always kept private (meaning, it is never transmitted). For ease of portability, the two keys (and the certificate) can be included in one, encrypted and passphrase-protected, format known as PKCS#12.

In order to verify the authenticity of a certificate, it is digitally signed by another certificate, known as a Certificate Authority (CA). This CA certificate may be one created (and kept secure) by a company hosting a secure application, or it could be created by a company such as Verisign.

When a web application requires digital certificates, an administrator typically creates digital certificates for each authorized user. The administrator digitally signs each certificate using the system CA certificate. These certificates, along with the public and private keys, are distributed to users. Often these keys will be distributed in the PKCS#12 format. Users then import these certificates into their web browsers. When the browser is challenged by the server, it will produce its certificate.

When importing certificates for web applications, select the check box that indicates that the keys be exportable. With this indication, the certificate can be exported to a PKCS#12 formatted file for later use by other programs.

Do not use certificates that are assigned to actual users for performance testing purposes. Use test certificates that do not correspond to actual users.

There are four types of certificates that can be used in testing:

- Self-signed certificates

- Signed certificates

- Certificate authority (CA) certificates

- Unsigned certificates (rarely used)

Self-signed certificates are used when no entity needs to vouch for the authenticity of the certificate. These are the simplest certificates to create and use. Typically, however, a signed certificate is used to represent a particular user.

Signed certificates are used when a certificate needs to be created for and issued to one, and only one, user. Signed certificates are signed by a certificate authority (CA).

Certificate authority (CA) certificates are self-signed certificates used to sign (certify) certificates.

Unsigned certificates are certificates that are neither signed by a CA nor self-signed. Most web applications do not use unsigned certificates.

When you create a self-signed or signed certificate (including CA certificates) you can specify a subject. The subject of a certificate is the set of attributes of an X.500 Distinguished Name that is encoded in the certificate. The subject enables the recipient of a certificate to see information about the owner of the certificate. The subject describes the certificate owner, but is not necessarily unique. Think of subjects as entries in a telephone book; there can be multiple entries for Patel Agrawal, but each entry refers to a different person.

The subject can contain many different types of identifying data. Typically, the subject includes the following:

| Attribute | Example |

|---|---|

| COMMON NAME (CN) | CN=Patel Agrawal |

| ORGANIZATION (O) | O=IBM Corporation |

| ORGANIZATIONAL UNIT (OU) | OU=IBM Software Group |

| COUNTRY (C) | C=IN |

| LOCALITY (L) | L=Bangalore |

| STATE or PROVINCE (ST) | ST=Kanataka |

| E-MAIL ADDRESS (emailAddress) | emailAddress=agrawal@abc.ibm.com |

This information can be typed as one string, using forward slashes to separate the data.

For example, the above subject would be typed as follows:

/CN=Patel Agrawal/O=IBM Corporation/OU=IBM Software Group/C=IN/L=Bangalore/ST=Karnataka/emailAddress=agrawal@abc.ibm.com

Create a digital certificate with OpenSSL

You can use the OpenSSL program to create digital certificates for use with tests.

OpenSSL is available from the OpenSSL Project at http://www.openssl.org/.

- Create a certificate authority (CA).

For the purposes of testing, this CA takes the place of a recognized CA on the Internet, such as VeriSign. Use this CA to digitally sign each certificate that you plan to use for testing.

- Create a certificate request (CSR) file.

The "subject" (-subj) describes the user of the certificate. Enter dummy values as shown. The following command line sets the password for the certificate to abcdefg.

openssl req \ -passout pass:abcdefg \ -subj "/C=US/ST=IL/L=Chicago/O=IBM Corporation/OU=IBM Software Group/CN=Rational Performance Tester CA/emailAddress=rpt@abc.ibm.com" \ -new | waipio.ca.cert.csr - Create a key file, waipio.ca.key, to store the private key.

This removes the password protection from the certificate request file so that you do not have to type the password every time you sign a certificate. Because the password protection has been removed, use the certificate request file for testing purposes only.

openssl rsa -passin pass:abcdefg \ -in privkey.pem \ -out waipio.ca.key - Create an X.509 digital certificate from the certificate request.

The following command line creates a certificate signed with the CA private key. The certificate is valid for 365 days.

openssl x509 \ -in waipio.ca.cert.csr \ -out waipio.ca.cert \ -req \ -signkey waipio.ca.key \ -days 365 - Create a PKCS#12-encoded file containing the certificate and private key.

The following command line sets the password on the P12 file to default. Rational Performance Tester uses password of default for all PKCS#12 files by default.

openssl pkcs12 \ -passout pass:default \ -export \ -nokeys \ -cacerts \ -in waipio.ca.cert \ -out waipio.ca.cert.p12 \ -inkey waipio.ca.key

You now have a CA certificate (waipio.ca.cert), which can be installed into the web server under test and a private key file (waipio.ca.key) that you can use to sign user certificates.

- Create a certificate request (CSR) file.

- Create a digital certificate for a user.

- Create a CSR file for the user. Set the initial password to abc. Optionally, provide an appropriate subject.

openssl req \ -passout pass:abc \ -subj "/C=US/ST=IL/L=Chicago/O=IBM Corporation/OU=IBM Software Group/CN=John Smith/emailAddress=smith@abc.ibm.com" \ -new | johnsmith.cert.csr - Create a private key file without a password.

openssl rsa \ -passin pass:abc \ -in privkey.pem \ -out johnsmith.key - Create a new X.509 certificate for the new user, digitally sign it using the user's private key, and certify it using the CA private key.

The following command line creates a certificate which is valid for 365 days.

openssl x509 \ -req \ -in johnsmith.cert.csr \ -out johnsmith.cert \ -signkey johnsmith.key \ -CA waipio.ca.cert \ -CAkey waipio.ca.key \ -CAcreateserial \ -days 365 - Optional: Create a DER-encoded version of the public key. This file contains only the public key, not the private key. Because it does not contain the private key, it can be shared, and does not need to be password protected.

openssl x509 \ -in johnsmith.cert \ -out johnsmith.cert.der \ -outform DER - Create a PKCS#12-encoded file.

The following command line sets the password on the P12 file to default.

openssl pkcs12 \ -passout pass:default \ -export \ -in johnsmith.cert \ -out johnsmith.cert.p12 \ -inkey johnsmith.key

Repeat this step to create as many digital certificates as needed for testing. Keep the key files secure, and delete them when they are no longer needed. Do not delete the CA private key file. You need the CA private key file to sign certificates.

- Create a CSR file for the user. Set the initial password to abc. Optionally, provide an appropriate subject.

Now you can install the CA certificate (waipio.ca.cert) into WebSphere. Optionally, create a user certificate specifically for your web server, and install it into WebSphere.

You can use user certificates individually to record tests. To use the user certificates (johnsmith.cert.p12) during test editing and playback, compress them in ZIP format to a file with the .rcs extension. This creates a digital certificate store. To learn more digital certificate stores, You can also import user certificates into your web browser to interactively test them in your environment.

Create a digital certificate store

The KeyTool command-line program enables you to create a Rational Certificate Store (RCS) file containing digital certificates for use with tests. A Rational Certificate Store (RCS) file is a compressed archive file containing one or more PKCS#12 certificates. You can also use the KeyTool program to remove certificates from a certificate store.

- Type the following command:

java -cp rpt_home/plugins/com.ibm.rational.test.lt.kernel_version.jar com.ibm.rational.test.lt.kernel.dc.KeyTool \ --store=file \ --passphrase=certificate-passphrase \ --add \ --remove \ --generate \ --cert=certificate-name \ --subject=subject-name \ --ca-store=store \ --ca-cert=ca-certificate-name \ --ca-passphrase=ca-certificate-passphrase \ --sign \ --self-sign \ --algorithm=algorithm {RSA | DSA} \ --listIf a value contains spaces, enclose the value in quotation marks.

Option Description --store Required if adding or removing a certificate. The file name of the Rational Certificate Store (RCS) file. If the specified certificate store does not have the RCS extension, this extension will be added. --passphrase Optional. The passphrase to place on the generated certificate. The default passphrase is default. --add Optional. Adds the certificate to the certificate store. Used with --generate, this generates a certificate and adds it to the certificate store. --remove Optional. Removes the certificate from the certificate store. This option cannot be used with the --add or --generate options. --generate Optional. Generates a certificate. Used with --add, this generates a certificate and adds it to the certificate store. --cert Required. The name of the certificate file to add, remove, or generate. If you are creating a certificate, the file name will be given the P12 extension. --subject Optional. The X.500 Distinguished Name for the certificate. If no subject is specified, a default subject will be provided. --ca-store Required if signing a certificate. The file name of the Rational Certificate Store (RCS) file from which to retrieve the CA certificate. --ca-cert Required if signing a certificate. The name of the CA certificate file to use to sign another certificate. --ca-passphrase Required if signing a certificate. The passphrase for the CA certificate. --sign Optional. Signs the generated certificate using the specified CA certificate. This option cannot be used with --self-sign. --self-sign Optional. Self-sign the generated certificate. This option cannot be used with --sign. --algorithm Optional. This determines the encryption algorithm to use. The default is RSA. The options are RSA or DSA. --list Optional. This prints the names of all certificates in a certificate store to standard output. This list can be used to create a datapool. - Use KeyTool to create and add as many digital certificates as you want. To create a datapool of the names of certificates in the certificate store, run KeyTool again with the --list option. This writes a list of names that can then be imported to a datapool.

You now have a digital certificate store that you can use with tests. Because the KeyTool program has many options, you might want to create an alias or script file to use to invoke KeyTool.

You do not have to use the KeyTool command-line program to create a certificate store. It is possible to use existing PKCS#12 certificates with RPT. PKCS#12 certificates can be exported from a web browser. PKCS#12 certificates encode the private key within the certificate by means of a password.

Do not use certificates associated with real users. Certificates associated with real users contain private keys that should not become known by or available to anyone other than the owner of the certificate. An intruder who gained access to the certificate store would have access to the private keys of all certificates in the store. For this reason, you must create, or have created for you, certificates that are signed by the correct certificate authority (CA) but that are not associated with real users.

Record a test with digital certificates

The test creation wizard starts when you record a test. This wizard combines these actions: recording a session with a web application, generating a test from the recording, and opening the test in the test editor. You can record a test from Internet Explorer (the default for the Windows operating system) or from another browser.

You must use a PKCS#12 digital certificate when recording performance tests.

- Click File | New | Test from Recording.

- In the Create New Test from Recording window, select...

-

Create Test from New Recording | RPT HTTP Recording | Next

- In the list of projects, click the one in which to store this test and related files. The

icon identifies projects that are suitable for containing tests. Tests stored in other projects will not run.

icon identifies projects that are suitable for containing tests. Tests stored in other projects will not run.

If you have not yet created a project, the Project Name field displays a default name of testproj, which you can change.

- In the Test file name field, type a name for the test, and click Next. The standard Navigator and the Java. Package Explorer use this name, with extensions. You will also see this name in the Test Navigator.

- In the Enter Client Digital Certificate window enable Use Client Digital Certificate, and then type or browse to the Path to the digital certificate file to use.

- Type the Pass Phrase, or password, for the digital certificate, and then click Finish.

A progress window opens while your browser starts.

The Java JRE that the workbench uses must support the level of encryption required by the digital certificate that you select. For example, you cannot use a digital certificate that requires 256-bit encryption with a JRE that supports only 128-bit encryption. By default, the workbench is configured with restricted or limited strength ciphers. To use less restricted encryption algorithms, you must download and apply the unlimited jurisdiction policy files (local_policy.jar and US_export_policy.jar). You can download unlimited jurisdiction policy files from http://www.ibm.com/developerworks/java/jdk/security/60/. Click on "IBM SDK Policy files," and then log in to developerWorks . Download and extract unrestricted.zip, the 1.4.2+ version of the unlimited jurisdiction policy files. Before installing these policy files, back up the existing policy files in case you want to restore the original files later. Then overwrite the files in RPT_HOME/jdk/jre/lib/security/ directory with the unlimited jurisdiction policy files. By default, this directory is C://IBM/SDP/jdk/jre/lib/security/.

- In the address box for your browser, type the address of the web-based application to test and activate the link.

- Perform the user tasks to test. While you are recording, follow these guidelines:

- Wait for each page to load completely. Waiting will not affect performance results, because you can remove extra "waiting time" (think time) when you play back the test.

- Do not change browser preferences.

- After finishing performing the user tasks, stop recording. To stop recording, close your browser or click on the right side of the Recorder Control view bar. A progress window opens while the test is generated.

On completion, the Recorder Control view displays the message Test generation completed, the Test Navigator lists your test, and the test opens for you to inspect or edit.

Playing back a test with a digital certificate

After you have created a digital certificate store and recorded a test using a digital certificate, you must associate a digital certificate with the test for playback.

You need to have recorded a test using a digital certificate, and you need a digital certificate store file containing one or more PKCS#12 certificates.

To associate a digital certificate with a test for playback:

- Open the test for editing.

- On the Common Options page, under Digital Certificates, click Add.

- Select or type the name of the certificate store file that you created previously. You must type or select the file name. You cannot browse to locate the file. The certificate store must be a Rational Certificate Store (RCS) file. A Rational Certificate Store file is a compressed archive file containing one or more PKCS#12 certificates.

- Select the digital certificate to use, and then click Select.

- When prompted to place the digital certificate in a datapool, click No.

If you add multiple certificates to the Digital Certificates list on the Common Options page, the first certificate that satisfies the request from the server (in the order by which the certificates were entered) will be used during playback.

- Save the test.

When you run this test, the digital certificate from the certificate store will be submitted to the server.

Entrust TruePass authentication overview

Entrust provides digital identity and encryption technologies to governments and private industry. With Entrust TruePass software users can authenticate with secure web applications without installing a digital certificate in their browsers. This makes it convenient for use in kiosks and other public user environments.

You can now run tests against servers that require Entrust TruePass authentication. Roaming mode with TruePass applet version 7.0 and later are supported. Local mode, and versions of the TruePass applet prior to 7.0, are not supported. Recording tests with Entrust TruePass applications works just as regular HTTP recording does.

The Entrust TruePass Authentication object is displayed in the test editor for tests that you record with Entrust TruePass applications. The Version field displays the recorded version number of the Entrust TruePass applet. The Server Name and Port are correlated fields. Click Substitute to use the Data Sources view to change the server or port number for playback. The Application Context displays where the Entrust application is mapped to in the application server. The User Name and Passphrase fields can be substituted with values from a datapool.

Kerberos overview

You can run HTTP tests against servers that use the Kerberos protocol for authentication.

Introduction

Kerberos is a security authentication protocol that requires users and services to provide proof of identity.

Kerberos is supported only for HTTP tests on Rational Performance Tester.

Supported environments

Kerberos is supported on HTTP for web servers running Internet Information Server (IIS) or WebSphere with the Simple and Protected GSS-API Negotiation Mechanism (SPNEGO) trust association interceptor (TAI). Additionally, the Key Distribution Center (KDC) must be part of the Windows Domain Controller Active Directory. Internet Explorer, Mozilla Firefox, Opera, Apple Safari, and Google Chrome browsers are supported for recording tests. Kerberos is not supported on other protocols, environments, or browsers. For example, a KDC running on Linux is not supported.

Tips

For best results when you record tests that use Kerberos authentication, specify the host by name, not by numeric IP address. Also, note that user information is case-sensitive. Specify user information using the exact logon name from the user account in Active Directory. The User logon name field in the properties for the user in Active Directory displays the correct user name in the correct case. To the right of the user name the realm or domain name is displayed in the correct case. For example:

- User ID: kerberostester

- Password: secret

- Realm: ABC.IBM.COM

User logon names of the form ABC\kerberostester are not supported.

Troubleshooting

Kerberos authentication is a complex process. If you encounter problems when you attempt to record and play back tests that use Kerberos authentication, change the problem determination log level toAll and run the tests again with only one virtual user. To learn more about the problem determination log, see the help topic on changing the problem determination level. After running a test, the CommonBaseEvents00.log file on the agent computer contains information that can help you determine why Kerberos authentication failed.

Terms

- Active Directory

- Active Directory is an implementation of Lightweight Directory Access Protocol directory services created by Microsoft for use primarily in Windows environments. The main purpose of Active Directory is to provide central authentication and authorization services for Windows computers. With Active Directory, administrators can assign policies, deploy software, and apply critical updates to an organization.

- Directory service

- A directory service is a software application or set of applications that store and organize information about the users and resources of a computer network.

- Generic Security Services Application Program Interface (GSS-API)

- The GSS-API enables programs to access security services. The GSS-API alone does not provide any security. Instead, security service providers provide GSS-API implementations, typically in the form of libraries that are installed with their security software. Sensitive application messages can be wrapped, or encrypted, by the GSS-API to provide secure communication between client and server. Typical protections that GSS-API wrapping provides include confidentiality (secrecy) and integrity (authenticity). The GSS-API can also provide local authentication about the identity of a remote user or remote host.

- Key Distribution Center (KDC)

- The authentication server in a Kerberos environment is called the Key Distribution Center.

- Lightweight Directory Access Protocol (LDAP)

- LDAP is an application protocol for querying and modifying directory services running over TCP/IP. An LDAP directory tree typically reflects political, geographic, or organizational boundaries. LDAP deployments typically use Domain Name System (DNS) names for structuring the highest levels of the hierarchy. LDAP entries can represent many different types of objects including people, organizational units, printers, documents, or groups of people.

- Simple and Protected GSS-API Negotiation Mechanism (SPNEGO)

- SPNEGO is used when a client application attempts to authenticate to a remote server, but the authentication protocols supported by the remote server are unknown. SNPEGO is a standard GSS-API pseudo-mechanism. The pseudo-mechanism uses a protocol to determine which common GSS-API mechanisms are available, then SPNEGO selects one GSS-API mechanism to use for all future security operations.

- Trust Association Interceptor (TAI)