- Overview

- New for administrators

- Administrators: Deploying a preview guide to users

- Accessibility features for Connections

- Directory path conventions

- Deployment options

- Connections system requirements

- Connections support statements

- Worksheet for installing Connections

- Connections release notes

- Small deployment

- Medium deployment

- Large deployment

- Install

- New

- The installation process

- Pre-installation tasks

- Prepare to configure the LDAP directory

- Create the Cognos administrator account

- Install IBM WAS

- Access Windows network shares

- Set up federated repositories

- Create databases

- Create multiple database instances

- Register the DB2 product license key

- Create a dedicated DB2 user

- Configure the DB2 databases for unicode

- Create databases with the database wizard

- Create databases with SQL scripts

- Configure a FileNet database for SQL Server or Oracle

- Enable NO FILE SYSTEM CACHING for DB2 on System z

- Populate the Profiles database with LDAP data

- Configure Tivoli Directory Integrator (TDI)

- Add source data to the Profiles database

- Configure the Manager designation in user profiles

- Supplemental user data for Profiles

- Install Cognos Business Intelligence

- Install WAS for Cognos Business Intelligence

- Install the database client for Cognos Transformer

- Install required patches on the Cognos BI Server system

- Install Cognos Business Intelligence components

- Configure Cognos Business Intelligence after installation

- Federating the Cognos server to the Deployment Manager

- Validating the Cognos server installation

- Before installing Connections

- Install Connections

- Install as a non-root user

- Install Connections 4.5

- Install in console mode

- Install silently

- Modify the installation in interactive mode

- Modify the installation using response file

- Modify the installation in console mode

- Post-installation tasks

- Mandatory post-installation tasks

- Review the JVM heap size

- Configure IBM HTTP Server

- Configure the Home page administrator

- Enable Search dictionaries

- Copying Search conversion tools to local nodes

- Create the initial Search index

- Configure file downloads through the HTTP Server

- Configure Cognos Business Intelligence

- Configure Connections Content Manager for Libraries

- Configure Connections Content Manager with a new FileNet deployment

- Configure Connections Content Manager with an existing FileNet deployment

- To confirm changes made post-installation tasks

- Mandatory post-installation tasks

- Uninstalling Connections

- Remove applications

- Uninstalling a deployment

- Uninstalling in console mode

- Uninstalling using response file

- Uninstalling: delete databases with the database wizard

- Uninstalling databases using response file

- Uninstalling: Manually drop databases

- Reverting Common, Connections-proxy, and WidgetContainer Applications for Uninstallation

- Uninstalling Cognos Business Intelligence server

- Update and migrating

- Prepare Connections for maintenance

- Back up Connections

- Saving your customizations

- Prepare to migrate the media gallery

- Migrating Cognos Business Intelligence

- Quickr migration tools for migrating places to Connections

- Migrating to Connections 4.5

- Rolling back a migration

- Update Connections 4.5

Overview

| Activities | Share work related to a project goal. |

| Blogs | Online journals. |

| Bookmarks | Social bookmarking tool. Previously known as Dogear. |

| Communities | Members participate in activities and forums, and share blogs, bookmarks, feeds, and files. |

| Files | Common repository for uploaded files |

| Forums | Brainstorm and collect feedback. |

| Home page | Central location for latest updates |

| Libraries | Work with drafts, reviews, and publishing in Communities. |

| Profiles | Directory of the people in your organization. |

| Metrics | Statistics on how people use Connections applications. |

| Wikis | Share and coauthor information. |

New for administrators

- Install and configure

- Administration

Connections:

- Lock the default frequency with which email digests from Connections applications are sent to users by configuring the frequencyLocked property in notification-config.xml.

Activities:

- The EventLogPurgeJob task deletes old entries from the Activities events log. This task helps maintain performance and keeps the log from becoming too large. By default, it runs daily at 2 AM. You can specify the time that the log is purged and set the properties that define which entries can be deleted.

Communities:

- You can increase the maximum number of communities displayed in Communities user views by adding a configuration setting to LotusConnections-config.xml.

- If the Communities catalog index becomes corrupted or is not being refreshed properly, you can restore the index by deleting the existing index data and waiting for the next scheduled crawl.

- By adding search to the list of modes in the Linked Library widget definition, you can enable search for content in linked libraries.

Wikis:

- The Wikis administrator can delete draft wiki pages created by other users. This capability is useful after a user leaves the organization.

- Create your own message for users by customizing the Wikis welcome page.

Files:

- You can now use a Files administrative command to obtain the ID of a community library using its community uuid value.

The format of the command is:

- FilesLibraryService.getByExternalContainerId(string community_id)

Profiles:

- You can now exclude nicknames when adding or updating user profiles by specifying a new name.expansion property in the tdi-profiles-config.xml file.

- You can use the new mapToNameTable property in the profiles-types.xml file to specify an additional givenname or surname value for use with Profiles directory search.

- You can integrate the Profiles business card with your web application by mapping an LDAP distinguished name, using the DN parameter; in addition to the previously available user ID and email mapping options.

- The hashEmail extended attribute can be added to the map_dbrepos_from_source.properties file or profileExtension table in the tdi-profiles-config.xml file to support Profiles users in conjunction with the Microsoft Outlook Social Connector.

See Use the Connections Desktop Plug-ins for Microsoft Windows.

News:

- Use the NewsActivityStreamService.updateApplicationRegistrationForEmailDigest command to update a registered third-party application to enable it for email digest functionality.

- Use the NewsEmailDigestService.refreshDefaultEmailPrefsFromConfig() command to refresh updates to the default email preferences specified in notification-config.xml without the need for a server restart.

Search:

- Use the new, optional parameter for the SearchService.startBackgroundIndex command to run social analytics indexing jobs at the end of the background indexing operation.

- You can configure post-filtering for the ECM service by enabling or disabling a property in the Search configuration file. Post-filtering is enabled by default.

- Use the SearchService.startBackgroundSandIndex command to create a background index for the social analytics service. Use this command to run background indexing for social analytics without having to run a full index crawl.

- By adding search to the list of modes in the Linked Library widget definition, you can enable search for content in linked libraries using FileNet P8 5.2.

- Application Development

-

The Connections API documentation is now available in the IBM Social Business Development wiki.

- Troubleshooting and Support

Administrators: Deploying a preview guide to users

The Connections preview guide provides...

- Overview of new applications

- Important changes from the previous release

- Familiar applications that remain the same

- Links to product tours, reference cards, and product documentation

- A few key productivity tips

You can download the preview guide from the Connections wiki at http://www-10.lotus.com/ldd/lcwiki.nsf. There are two files available to you:

- An Adobe PDF file, ready for emailing, printing, or distributing to your organization.

- An IBM Symphony ODT file that can be customized for your organization; for example, you can add contact information for your Help Desk. This file includes instructions in blue text for customizing information. Remember to remove these instructions before rolling out the file to your organization.

Accessibility features

- Keyboard-only operation

- Interfaces that are commonly used by screen readers

- Keys that are discernible by touch but do not activate just by touching them

- Industry-standard devices for ports and connectors

- The attachment of alternative input and output devices

- Screen magnification

Accessibility is optimized when using a Microsoft Windows 7 64 bit client, Microsoft Windows Server 2003 or later, FireFox 17 ESR, and JAWS 12 or later.

Interface information

To display a person's business card, hover over a person's name, and then press Ctrl + Enter to open the business card. Press Tab to set focus to the first element in the business card.

For JAWS users: To activate buttons in the user interface, press the Enter key, even when JAWS announces to use the space bar.

If the application user interface contains a View Demo link, you can download the target video in accessible MP4 format by clicking View Demo and then clicking Download the video in accessible format.

For administrators installing the product

For optimal accessibility when installing Connections, follow the instructions to install the product in console mode.

Related accessibility information

The accessible version of this product documentation is available on the Connections wiki.

- The accessible version documentation is available in HTML format to give the maximum opportunity for users to apply screen-reader software technology.

- Images in the accessible version documentation are provided with alternative text so that users with vision impairments can know the contents of the images.

IBM and accessibility

See the IBM Human Ability and Accessibility Center for more information about the commitment that IBM has to accessibility.

Directory path conventions

| Directory variable | Default installation root |

|---|---|

| app_server_root

IBM WAS installation directory | AIX: /usr/IBM/WebSphere/AppServer

Linux: /opt/IBM/WebSphere/AppServer Windows: drive:\\IBM\WebSphere\AppServer |

| profile_root

dmgrGR_PROFILE | AIX: /usr/IBM/WebSphere/AppServer/profiles/profile_name

Linux: /opt/IBM/WebSphere/AppServer/profiles/profile_name Windows: drive:\\IBM\WebSphere\AppServer\profiles\profile_name |

| ibm_http_server_root

IBM HTTP Server installation directory | AIX: /usr/IBM/HTTPServer

Linux: /opt/IBM/HTTPServer Windows: drive:\\IBM\HTTPServer |

| connections_root

Connections installation directory | AIX or Linux: /opt/IBM/Connections

Windows: drive:\\IBM\Connections |

| local_data_directory_root

Local content stores | AIX or Linux: /opt/IBM/Connections/data/local

Windows: drive:\\IBM\Connections\data\local |

| shared_data_directory_root

Shared content stores | AIX or Linux: /opt/IBM/Connections/data/shared

Windows: drive:\\IBM\Connections\data\shared\ |

| IM_root

Installation Manager installation directory | AIX: /opt/IBM/InstallationManager

Linux: /opt/IBM/InstallationManager >Windows: drive:\ \IBM\Installation Manager |

| shared_resources_root

Shared resources directory | AIX or Linux: /opt/IBM/IMShared

Windows: drive:\(x86)\IBM\IMShared |

| db2_root

DB2 database installation directory | AIX: /usr/IBM/db2/version

Linux: /opt/ibm/db2/version Windows: drive:\\IBM\SQLLIB\version |

| oracle_root | AIX or Linux: /home/oracle/oracle/product/version/db_1

Windows: drive:\oracle\product\version\db_1 |

| sql_server_root | Windows: drive:\\Microsoft_SQL_Server C or D. |

| Cognos_BI_install_path

IBM Cognos BI Server installation directory | AIX or Linux: /opt/IBM/CognosBI

Windows: drive:\\IBM\Cognos |

| Cognos_Transformer_install_path

Cognos Transformer installation directory | AIX or Linux: /opt/IBM/CognosTF

Windows: drive:\\IBM\Cognos You can specify the installation directory in the cognos-setup.properties file during installation. |

Deployment options

A network deployment can consist of a single server that hosts all Connections applications or two or more sets of clustered servers that share the workload. You must configure an additional system with WAS Network Deployment Manager.

IBM Cognos Business Intelligence is an optional component in the deployment. If used, Cognos must be federated to the same Deployment Manager as the Connections servers. However, Cognos servers cannot be configured within an Connections cluster.

A network deployment provides the administrator with a central management facility and it ensures that users have constant access to data. It balances the workload between servers, improves server performance, and facilitates the maintenance of performance when the number of users increases. The added reliability also requires a larger number of systems and the experienced administrative personnel who can manage them.

When you are installing Connections, you have three deployment options:

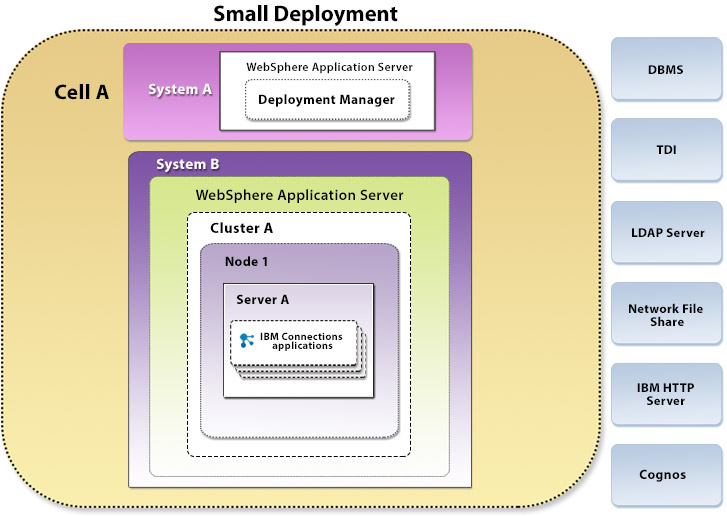

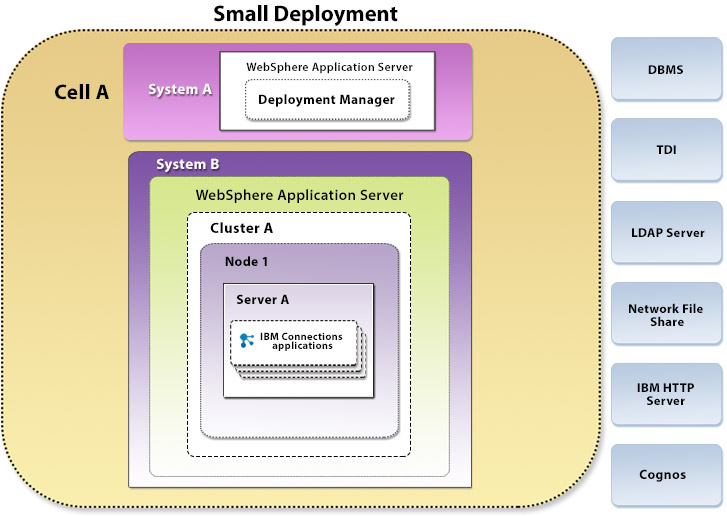

- Small deployment

- Install all Connections applications on a single node in a single cluster. This option is the simplest deployment but has limited flexibility and does not allow individual applications to be scaled up. All the applications run within a single JVM.

The diagram depicts a topology with up to 8 servers. If you install the servers on shared systems, you do not need to deploy 8 separate systems. Figure 1. Small deployment

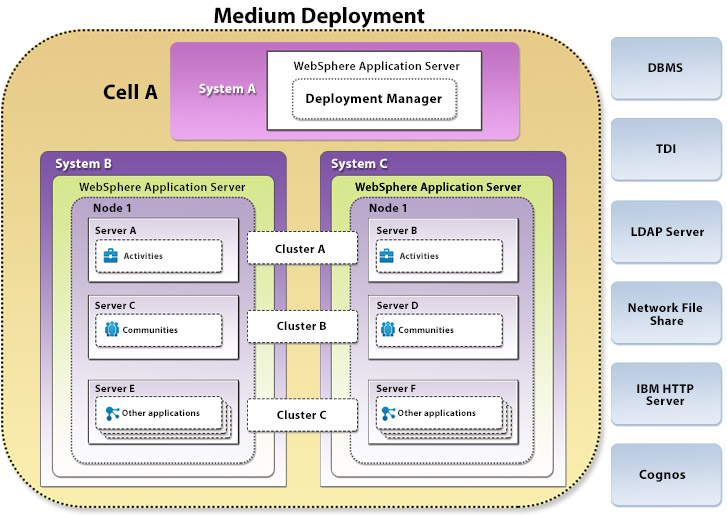

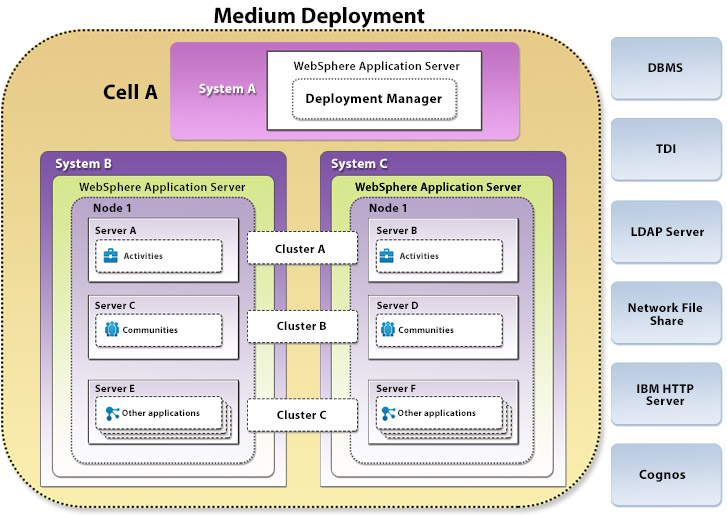

- Medium deployment

- Install a subset of applications in separate clusters. Connections provides three predefined cluster names shared among all of its applications. Use this option to distribute applications according to your usage expectations. For instance, you might anticipate higher loads for the Profiles application and install it in its own cluster, while other applications could be installed in a different cluster. This option allows you to maximize the use of available hardware and system resources to suit your needs. Figure 2. Medium deployment

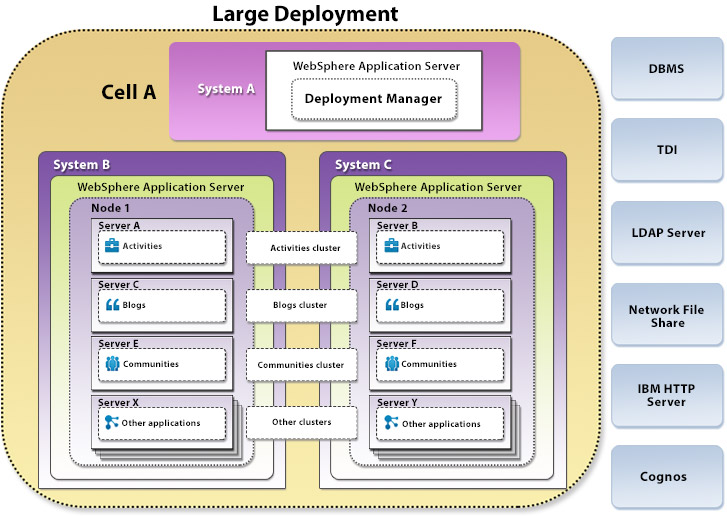

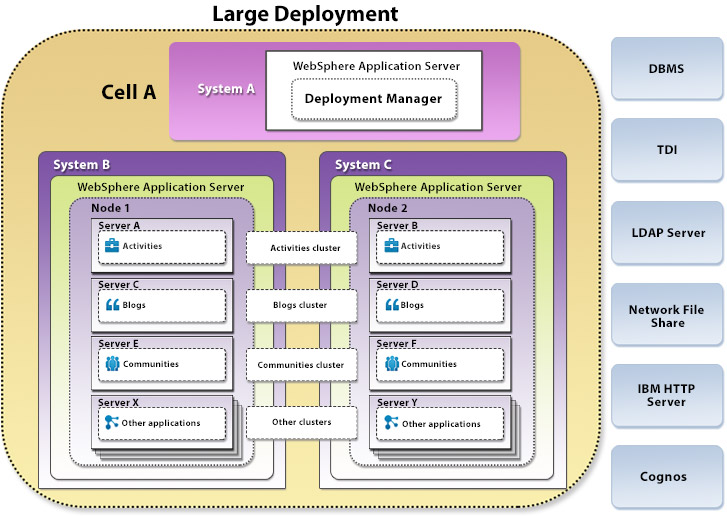

- Large deployment

- Install each application in its own cluster. Connections provides a predefined cluster name for each application. This option provides the best performance in terms of scalability and availability options but also requires more system resources. In most cases, you should install the News and Home page applications in the same cluster. Figure 3. Large deployment

In a multi-node cluster, configure network share directories as shared content stores. When using NFS, use NFS v4 because NFS v3 lacks advanced locking capability. When using Microsoft SMB Protocol for file-sharing, use the UNC file-naming convention; for example: \\machine-name\share-name.

You can assign various combinations of applications to clusters in many different ways, depending on your usage and expectations.

The number of JVMs required for each cluster depends on the user population and workload. For failover, you must have two JVMs per application, or two nodes for each cluster, scaled horizontally. Horizontal scaling refers to having multiple JVMs per application with each JVM running on a WAS instance. Vertical scaling refers to running multiple JVMs for the same application on a single WAS instance. Vertical scaling is not officially supported in Connections. However, it is typically not needed unless your server has several CPUs.

For performance and security reasons, consider using a proxy server in the deployment.

BM Cognos Business Intelligence does not have to be deployed before you install the Metrics application. Even if you do not plan to deploy Cognos now, you should install the Metrics application so that events are recorded in the Metrics database for use when Cognos is available to provide reports.

For added security when you are planning to run 3rd party OpenSocial gadgets, such as those from iGoogle, configure locked domains. Locked domains are required to isolate these gadgets from access to your intranet and SSO information. The basic configuration of locked domains is as follows:

- A second top-level domain that is not in your SSO domain. For example, if you organization's SSO domain is example.com, you will require a distinct top level domain, such as example-modules.com.

- A wild card SSL certificate for this domain name.

No additional server instances are required for the basic configuration.

Connections system requirements

See Detailed system requirements for Connections web page.

If you deploy Cognos Transformer on non-Windows system and connect to the data located in Microsoft SQL Server database, install the ODBC driver of "Progress DataDirect Connect for ODBC". It is the only driver that IBM Cognos supports for such configuration. The driver is not free, if you want to avoid the cost for the licensed driver, you need to install Transformer on Windows system. You have two options, one is to install both Cognos Business Intelligence and Transformer on Windows system, another is to leave Cognos Business Intelligence on non-Windows system and install Transformer on Windows. Refer to this technote for more information.

Worksheet for installing Connections

While installing and configuring Connections, it can be difficult to remember all the user IDs, passwords, server names, and other information that you need during and after installation. Print out and use this worksheet to record that data.LDAP server details

| LDAP data type | Example | Details |

|---|---|---|

| LDAP server type and version | IBM Domino 8.5 | |

| Primary host name | domino_ldap.example.com | |

| Port | 389 | |

| Bind distinguished name | cn=lcadmin,ou=People,dc=example,dc=com | |

| Bind password | ||

| Certificate mapping | ||

| Certificate filter | ||

| Login attribute | mail or uid |

WAS details

| WAS item | Details |

|---|---|

| WAS version 8.0.0.5 or higher | |

| Installation location For example: C:\IBM\WebSphere\AppServer | |

| Update installer location For example: C:\IBM\WebSphere\UpdateInstaller | |

| Administrator ID For example: wsadmin | |

| Administrator password | |

| WAS URL For example:

| |

| WAS secure URL For example:

| |

| WAS host name | |

| HTTP transport port | |

| HTTPS transport port | |

| SOAP connector port | |

| Run application server as a service? (True/False) |

Database details

| Database item | Details |

|---|---|

| Database type and version For example: Oracle Database 10g Enterprise Edition Release 2 10.2.0.4 | |

| Database instance or service name | |

| Database server host name For example: database.example.com | |

| Port The default values are: DB2=50000; Oracle=1433; MS SQL Server=1523. | |

| JDBC driver fully qualified file path For example: C:\IBM\SQLLIB | |

| Database client name and version For example: MS SQL Server Management Studio Express v9.0.2 | |

| Database client user ID. The default is db2admin. | |

| Database client user password | |

| DB2 administrators group (Windows only) The default is DB2AdmgrNS. | |

| DB2 users group (Windows only) The default is DB2USERS. | |

| Activities database server host name | |

| Activities database server port number | |

| Activities database name. Default: OPNACT. | |

| Activities database application user ID | |

| Activities database application user password | |

| Blogs database server host name | |

| Blogs database server port number | |

| Blogs database name. Default: BLOGS. | |

| Blogs database application user ID | |

| Blogs database application user password | |

| Cognos database server host name | |

| Cognos database server port number | |

| Cognos database name. Default: COGNOS. | |

| Cognos database application user ID | |

| Cognos database application user password | |

| Communities database server host name | |

| Communities database server port number | |

| Communities database name Default: SNCOMM. | |

| Communities database application user ID | |

| Communities database application user password | |

| Dogear database server host name | |

| Dogear database server port number | |

| Dogear database name. Default: DOGEAR. | |

| Dogear database application user ID | |

| Dogear database application user password | |

| Files database server host name | |

| Files database server port number | |

| Files database name. Default: FILES. | |

| Files database application user ID | |

| Files database application user password | |

| Forums database server host name | |

| Forums database server port number | |

| Forums database name. Default: FORUM. | |

| Forums database application user ID | |

| Forums database application user password | |

| Home page database server host name | |

| Home page database server port number | |

| Home page database name. Default: HOMEPAGE. | |

| Home page database application user ID | |

| Home page database application user password | |

| Metrics database server host name | |

| Metrics database server port number | |

| Metrics database name. Default: METRICS. | |

| Metrics database application user ID | |

| Metrics database application user password | |

| Mobile database server host name | |

| Mobile database server port number | |

| Mobile database name. Default: MOBILE. | |

| Mobile database application user ID | |

| Mobile database application user password | |

| Profiles database server host name | |

| Profiles database server port number | |

| Profiles database name. Default: PEOPLEDB. | |

| Profiles database application user ID | |

| Profiles database application user password | |

| Wikis database server host name | |

| Wikis database server port number | |

| Wikis database name. Default: WIKIS. | |

| Wikis database application user ID | |

| Wikis database application user password |

Tivoli Directory Integrator (TDI) details

| TDI item | Details |

|---|---|

| TDI installation location For example: C:\IBM\TDI\ | |

| TDI version. For example: 7.1 fix pack 2 or higher | |

| Solutions Directory path For example: C:\IBM\TDISOL\TDI |

LDAP-Profiles mapping details

This table is derived from the map_dbrepos_from_source.properties file.

| Profiles database attribute | LDAP attribute (example) | Profiles database column |

|---|---|---|

| alternateLastname | null | PROF_ALTERNATE_LAST_NAME |

| bldgId | null | PROF_BUILDING_IDENTIFIER |

| blogUrl | null | PROF_BLOG_URL |

| calendarUrl | null | PROF_CALENDAR_URL |

| countryCode | c | PROF_ISO_COUNTRY_CODE |

| courtesyTitle | null | PROF_COURTESY_TITLE |

| deptNumber | null | PROF_DEPARTMENT_NUMBER |

| description | description | PROF_DESCRIPTION |

| displayName | cn | PROF_DISPLAY_NAME |

| distinguishedName | $dn | PROF_SOURCE_UID |

| PROF_MAIL | ||

| employeeNumber | employeenumber | PROF_EMPLOYEE_NUMBER |

| employeeTypeCode | employeetype | PROF_EMPLOYEE_TYPE |

| experience | null | PROF_EXPERIENCE |

| faxNumber | facsimiletelephonenumber | PROF_FAX_TELEPHONE_NUMBER |

| floor | null | PROF_FLOOR |

| freeBusyUrl | null | PROF_FREEBUSY_URL |

| givenName | givenName | PROF_GIVEN_NAME |

| givenNames | givenName | |

| groupwareEmail | null | PROF_GROUPWARE_EMAIL |

| guid | (Javascript function: {func_map_from_GUID}) | PROF_GUID |

| ipTelephoneNumber | null | PROF_IP_TELEPHONE_NUMBER |

| isManager | null | PROF_IS_MANAGER |

| jobResp | null | PROF_JOBRESPONSIBILITIES |

| loginId | employeenumber | PROF_LOGIN and PROF_LOGIN_LOWER |

| logins | PROF_LOGIN | |

| managerUid | $manager_uid

This attribute represents a lookup of the UID of a manager using DN in the manager field. | PROF_MANAGER_UID |

| mobileNumber | mobile | PROF_MOBILE |

| nativeFirstName | null | PROF_NATIVE_FIRST_NAME |

| nativeLastName | null | PROF_NATIVE_LAST_NAME |

| officeName | physicaldeliveryofficename | PROF_PHYSICAL_DELIVERY_OFFICE |

| orgId | ou | PROF_ORGANIZATION_IDENTIFIER |

| pagerId | null | PROF_PAGER_ID |

| pagerNumber | null | PROF_PAGER |

| pagerServiceProvider | null | PROF_PAGER_SERVICE_PROVIDER |

| pagerType | null | PROF_PAGER_TYPE |

| preferredFirstName | null | PROF_PREFERRED_FIRST_NAME |

| preferredLanguage | preferredlanguage | PROF_PREFERRED_LANGUAGE |

| preferredLastName | null | PROF_PROF_PREFERRED_LAST_NAME |

| profileType | null | PROF_TYPE |

| secretaryUid | $secretaryUid

This attribute represents a lookup of the UID of a secretary using DN in the secretary field. | PROF_SECRETARY_UID |

| shift | null | PROF_SHIFT |

| surname | sn | PROF_SURNAME |

| surnames | sn | PROF_SURNAME |

| telephoneNumber | telephonenumber | PROF_TELEPHONE_NUMBER |

| timezone | null | PROF_TIMEZONE |

| title | null | PROF_TITLE |

| uid | (Javascript function - {func_map_to_db_UID}) | PROF_UID |

| workLocationCode | postallocation | PROF_WORK_LOCATION |

Connections details

| Connections item | Details | ||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Connections installation location For example: C:\IBM\Connections | |||||||||||||||||||||||||||||||||||||||||||||||

| Response file directory path. For example: C:\IBM\Connections\InstallResponse.txt | |||||||||||||||||||||||||||||||||||||||||||||||

| DNS host name For example: connections.example.com | |||||||||||||||||||||||||||||||||||||||||||||||

| Choose: DNS MX Records or Java Mail Session? | |||||||||||||||||||||||||||||||||||||||||||||||

| DNS MX Records only: Local mail domain For example: example.com | |||||||||||||||||||||||||||||||||||||||||||||||

| Java Mail

Session only: DNS server name or SMTP relay host For example: dns.example.com; relayhost.example.com | |||||||||||||||||||||||||||||||||||||||||||||||

| Domain name for Reply-to email address | |||||||||||||||||||||||||||||||||||||||||||||||

| Suffix or prefix for Reply-to email address | |||||||||||||||||||||||||||||||||||||||||||||||

| Server that receives Reply-to emails | |||||||||||||||||||||||||||||||||||||||||||||||

| User name and password for that server | |||||||||||||||||||||||||||||||||||||||||||||||

| URL and ports for admin and user access. You can look up the URLs for each application in the text files that the installation wizard generates. These files are located under the connections_root directory. | |||||||||||||||||||||||||||||||||||||||||||||||

| Activities server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Activities cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

Activities URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

Activities secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Activities statistics files directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Activities content files directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Blogs server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Blogs cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Blogs URL For example: http://www.example.com:9080/blogs | |||||||||||||||||||||||||||||||||||||||||||||||

| Blogs secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Blogs upload files directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Bookmarks server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Bookmarks cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Bookmarks URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Bookmarks secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Bookmarks favicon files directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Communities server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Communities cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Communities URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Communities secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Communities statistics files directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Communities discussion forum content directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Files server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Files cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Files URL For example: http://www.example.com:9080/files | |||||||||||||||||||||||||||||||||||||||||||||||

| Files secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Files content store directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Forums server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Forums cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Forums URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Forums secure URL For example:

| |||||||||||||||||||||||||||||||||||||||||||||||

| Forums content store directory path | |||||||||||||||||||||||||||||||||||||||||||||||

| Home page server name | |||||||||||||||||||||||||||||||||||||||||||||||

| Home page cluster member name | |||||||||||||||||||||||||||||||||||||||||||||||

| Home page URL For example:

| Home page secure URL | For example:

| Home page content store directory path

|

| Metrics server name

|

| Metrics cluster member name

|

| Moderation server name

|

| Moderation cluster member name

|

| Moderation URL | For example:

| Moderation secure URL | For example:

| Profiles server name

|

| Profiles cluster member name

|

| Profiles URL | For example:

| Profiles secure URL | For example:

| Profiles statistics files directory path

|

| Profiles cache directory path

|

| Search server name

|

| Search cluster member name

|

| Search dictionary directory path

|

| Search index directory path

|

| Wikis server name

|

| Wikis cluster member name

|

| Wikis URL For example:

http://www.example.com:9080/wikis

|

| Wikis secure URL | For example:

| Wikis content directory path

|

| |

IBM HTTP Server

| IBM HTTP Server item | Details |

|---|---|

| IBM HTTP Server installation location For example: C:\IBM\HTTPServer\ | |

| IBM HTTP Server version For example: V7.0 fix pack 21. | |

| IBM HTTP Server httpd.conf file directory path For example: C:\IBM\HTTPServer\conf\ | |

| web server definition name For example: webserver1 | |

| web server plugin-cfg.xml file directory path For example: C:\IBM\HTTPServer\Plugins\config\webserver1\ | |

| IBM HTTP Server host name | |

| IBM HTTP Server fully qualified host name | |

| IBM HTTP Server IP address | |

| IBM HTTP Server communication port For example: 80 | |

| IBM HTTP Server administration port For example: 8008 | |

| Run IBM HTTP Server as a service? (Y/N) | |

| Run IBM HTTP administration as a service? (Y/N) | |

| IBM HTTP Server administrator ID | |

| IBM HTTP Server administrator password |

Cognos BI Server and Transformer

| Cognos BI Server and Transformer item | Details |

|---|---|

| Cognos BI Server and Transformer | Refer to the cognos-setup.properties file. |

Installation location for BI server, such as:

| |

Installation location for Transformer server, such as:

| |

| Administrator ID | |

| Administrative password | |

Connections Content Manager

| Connections Content Manager item | Details |

|---|---|

| connectionsAdmin alias | For multiple cells - must be defined to be same username/password. |

| filenetAdmin alias | Stores object store administrator credentials used to access the FileNet Collaboration Services URL. |

| FileNet Collaboration Services URL (HTTP) | http://fncs_server:port/dm |

| FileNet Collaboration Services URL (HTTPS) | https://fncs_server:port/dm |

| FileNet Collaboration Services anonymous role | |

| Global configuration data (GCD) admins | |

| Object store admins | |

| FileNet P8 domain name | ICDomain |

| Object store name | ICObjectStore |

Libraries

| Connections Content Manager item | Details |

|---|---|

| Libraries server name | |

| Libraries cluster member name | |

| Libraries FileNet object store database server host name | |

| Libraries FileNet object store database server port number | |

| Libraries FileNet object store database name | Default: FNOS |

| Libraries FileNet object store database application user ID | |

| Libraries FileNet object store database application user password | |

| Libraries FileNet global configuration database server host name | |

| Libraries FileNet global configuration database server port number | |

| Libraries FileNet global configuration database name | Default: FNGCD |

| Libraries FileNet global configuration database application user ID | |

| Libraries FileNet global configuration database application user password |

Connections release notes

The release notes for Connections 4.5 explain compatibility, installation, and other getting-started issues.

Contents

Description

Connections 4 introduces metrics for Communities. Metrics data is available for the entire product as well as for individual communities. Metrics employs the analytic capabilities of the IBM Cognos Business Intelligence server, which is provided as part of the Connections installation to support the collection of metrics data.

Announcement

The Connections 4.5 announcement notification describes the following information. Additional overview information is available at http://www-03.ibm.com/software/products/us/en/conn.

- Detailed product description, including a description of new function

- Product-positioning statement

- Packaging and ordering details

- International compatibility information

System requirements

For information about hardware and software compatibility, see the Connections system requirements topic.

Install Connections 4.5

For step-by-step installation instructions, refer to the Installing section of the product documentation.

Once mandatory tasks are completed, go to fix central to obtain the latest iFixes and apply them using " Update Connections 4.5" to ensure the deployment will have the latest set of software fixes.

Known problems

Known problems are documented in the form of individual technotes in the Connections. Support Portal. As problems are discovered and resolved, the IBM Support team updates the knowledge base. By searching the knowledge base, you can quickly find workarounds or solutions to problems.

The following links launch customized queries of the live Support knowledge base:

- All known problems for Connections 4.5

- Activities

- Blogs

- Bookmarks

- Communities

- Files

- Forums

- Home page

- Mobile

- News

- Profiles

- Search

- Wikis

- Installation

- Connections Plugin for Lotus Notes

- Connections Plugin for Microsoft Office

- Connections Plugin for Microsoft Outlook

- Connections Plugin for Microsoft Windows Explorer

- Connections Plugin for WebSphere Portal

- Connections APIs

This image represents a sample topology for an Connections install. This topology shows a clustered deployment on one node, called Node 1. The image shows one cell, Cell A, containing two systems, System A and System B. Each system has WAS (WAS) installed. System A has the deployment manager installed. System B shows Cluster A, along with Node 1 and Server A. Server A has all Connections applications. Outside of the Systems you see other deployment components listed. The deployment components that are listed are DBMS, TDI, LDAP Server, Network File Share, IBM HTTP Server, and Cognos.

This image represents a sample topology for an Connections install. This topology shows a clustered deployment on one node, called Node 1. The three systems that are displayed have WAS (WAS) installed. This topology includes three systems, with two of the systems connected by three clusters.

The image shows one cell, Cell A, containing three systems, System A, System B, and System C. Each system has WAS (WAS) installed. System A has the deployment manager installed. Systems B and C each have one node installed, Node 1. Systems B and C also have three servers installed on Node 1. System B has servers A, C, and E installed. System C has servers B, D, and F installed. Systems B and C both contain Activities, Communities, and other applications. In System B, Server A has Activities installed, Server C has Communities installed, and Server E has other Connections applications installed. In System C, Server B has Activities installed, Server D has Communities installed, and Server F has other Connections applications installed.

The diagram also shows three clusters, Cluster A, Cluster B, and Cluster C, going between the different servers on Systems B and C. The servers and clusters are all installed on Node 1. Outside of the Systems you see other deployment components listed. The deployment components that are listed are DBMS, TDI, LDAP Server, Network File Share, IBM HTTP Server, and Cognos.

This image represents a sample topology for an Connections install. This topology shows a clustered deployment on two nodes, Node 1 and Node 2. All three systems that are displayed have WAS (WAS) installed.

The image shows one cell, Cell A, containing three systems, System A, System B, and System C. Each system has WAS (WAS) installed. System A has the Deployment Manager installed. Systems B and C each have one node that is installed, Node 1 on System B and Node 2 on System C.

System B has four servers A, C, E and X installed on Node 1. System C also has four servers B, D, F, and Y installed on Node 2. Systems B and C both contain Activities, Blogs, Communities, and other applications. In System B, Server A has Activities installed, Server C has Blogs installed, and Server E has Communities installed, and Server X has other Connections applications installed. In System C, Server B has Activities installed, Server D has Blogs installed, Server F has Communities installed, and Server X has other applications installed.

The diagram also shows four clusters: an Activities cluster, a Blogs cluster, a Communities cluster, and an Other clusters extending between the different servers on Systems B and C. Outside of the Systems you see other deployment components listed. The deployment components that are listed are DBMS, TDI, LDAP Server, Network File Share, IBM HTTP Server, and Cognos.

Install

New Connections 4.5 install

- Installation of Connections Content Manager for the Libraries application on AIX, Linux, and Windows.

- Updates to configuring Connections Content Manager for Libraries

- Migration tools for Lotus Quickr for Portal and Lotus Quickr for Domino places.

- Updated system requirements (new support)

- OAuth2 support for Connections Content Manager (same cell)

- Configuring Interoperability mode is no longer mandatory when setting up federated repositories and enabling single sign-on for Domino.

Migrating to this release

- After migration to Connections 4.5, you can reuse content stores from 4.0.

- Connections 4.5 is the first release on the

IBM i operating system, so no migration is necessary forIBM i .

The installation process

Installing Connections in a production environment involves several procedures to deploy the different components of the environment.

- Review the software and hardware requirements for the systems that will host Connections.

Download the Installation Manager v1.5.3 or later

Recent releases of IIM offer a 64-bit version, however the 64-bit version is not compatible with the Connections package so a 32-bit version of the IIM needs to be used when installing Connections.

- Install the required software, choosing a supported product in each case:

- WAS

- LDAP directory

- Database server

- TDI

- IBM Cognos (optional)

- To use mail notification, ensure that you have the SMTP and DNS details of your mail infrastructure available at installation time.

- Prepare the LDAP directory, install WAS, and create databases

- Install Connections and, optionally, Connections Content Manager.

You may rerun the installation program to add Connections Content Manager after an initial installation of Connections only, or you can install both at the same time

- Complete the post-installation tasks that apply to your configuration. For example, map the installed applications to IBM HTTP Server.

Pre-installation tasks

If you are migrating from a prior release of Connections, do not complete the tasks for creating databases or populating the Profiles database. The migration process handles those tasks automatically.

Special consideration is required when restoring backed up content:

- Content only can be restored to the same major/minor release that it was backed up from. For example Code Refresh (CR) releases for Connections 4.5 are considered part of the IC 4.5 release.

- Content cannot be restored to Connections 4.5 if it was backed up on Connections 4.0 (CRx).

- Content cannot be restored to a later release if it was backed up on Connections 4.5 (CRx).

- The /provision subdirectory in the shared content folder only can be restored to the exact version that it was backed up on. It is generally not safe to restore the /provision subdirectory to a version with a CR or an iFix different from the version the directory was backed up from.

To use Connections Content Manager, configure Connections and FileNet with the same WebSphere federated repositories. When Connections is installed, the installer provides a user name and password for a system user account created by the installer to handle feature-to-feature communication. The Connections installer also creates a J2C authentication alias name connectionsAdmin. This alias is filled with the specified user and maps that user to a set of application roles.

For many advanced security scenarios (especially Tivoli Access Manager and Siteminder) and in cases where an existing FileNet server is used with Connections Content Manager, the connectionsAdmin user should be located in an LDAP directory or a common directory that is available to all services.

Connections Content Manager is not supported on

To use the Connections Metrics application on

Prepare to configure the LDAP directory

To ensure that the Profiles population wizard can return the maximum number of records from your LDAP directory, set the Size Limit parameter in your LDAP configuration to match the number of users in the directory. For example, if your directory has 100,000 users, set this parameter to 100000. If you cannot set the Size Limit parameter, you could run the wizard multiple times. Alternatively, you could write a JavaScript function to split the original LDAP search filter, then run the collect_dns_iterate.bat file, and finally run the populate_from_dns_files.bat file.

To prepare to configure your LDAP directory with IBM WAS...

- Identify LDAP attributes to use for the following roles.

If no corresponding attribute exists, create one. You can use an attribute for multiple purposes. For example, you can use the mail attribute to perform the login and messaging tasks.

Display name The cn LDAP attribute is used to display a person's name in the product user interface. Ensure that the value you use in the cn attribute is suitable for use as a display name. Log in Determine which attribute or attributes to use to log in to Connections. For example: uid. The values of the login name attribute must be unique in the LDAP directory. Messaging Determine which attribute to use to define the email address of a person. The email address must be unique in the LDAP directory. If a person does not have an email address and does not have an LDAP attribute that represents the email address, that person cannot receive notifications. Global unique identifier (GUID) Determine which attribute to use as the unique identifier of each person and group in the organization. This value must be unique across the organization. - Collect the following information about your LDAP directory before configuring it for WAS:

- Directory Type

- Primary host name

- Port

- Bind distinguished name

- Bind password

- Certificate mapping

- Certificate filter, if applicable.

- LDAP entity types or classes. Identifies and selects LDAP object classes. For example, select the LDAP inetOrgPerson object class for the Person Account entity, or the LDAP groupOfUniqueNames object class for the Group entity.

- Search base. Identifies and selects the distinguished name (DN) of the LDAP subtree as the search scope. For example, select o=ibm.com to allow all directory objects underneath this subtree node to be searched.

For example: Group, OrgContainer, PersonAccount, or inetOrgPerson.

Create the Cognos administrator account

Create a new user, or select an existing user in the LDAP directory to serve as the administrator of the IBM Cognos BI Server component (you will add the administrator credentials to a configuration script when you deploy Cognos Business Intelligence).

The Cognos administrator account must reside in the same LDAP directory used by Connections.

If you will use an existing LDAP account, take note of the user name and password. For example, if your organization already has a Cognos deployment, you might choose to use the same administrator account with Connections.

If an acceptable account does not exist already, create it now; again, note the credentials for use later.

The Cognos administrator account is specified in the cognos.admin.username setting of the cognos_setup.properties file.

The Cognos administrator user name should use the value of the LDAP attribute to be used for the User lookup field. For example, if the value for User lookup is (uid=${userID}), then use the value of the uid attribute of the account as user name.

Install IBM WAS

Install IBM WAS.

WAS Network Deployment is provided with Connections.

To establish an environment with one Deployment Manager and one or more managed nodes, use the following table to determine the installation option that you should choose. The Connections installation wizard creates server instances that require each node to have an application server. Choose one of these options when installing WAS to ensure that each node has an application server.

- Deployment Manager and one node on the same system

- Deployment Manager and nodes on separate systems. You can deploy one node on the same system as the dmgr but you must use separate systems for all other nodes in a cluster.

- The heap size of the Deployment Manager might need to be increased beyond the default values if an out-of-memory condition is experienced while installing Connections.

Notes for

IBM i :- Make sure the offering tag of the WAS 8 response file includes both core.feature and ejbdeploy when installing WAS.

- After installing WAS 8, change WAS 8 to use the 64-bit version of JVM. Refer to the managesdk command for more information.

- Use dmgr as Deploy Manager profile name.

- DmgrHost requires in lowercase for the manageprofiles or addNode command.

- Java 2 security is not supported

Java 2 security is disabled by default in WebSphere and must remain disabled for Connections.

- In WAS admin console click Security > Global.

- In the Java 2 security section, ensure that the Use Java 2 security to restrict application access to local resources option is not selected.

- In WAS admin console click Security > Global.

- Logout on HTTP Session Expiration must be disabled

This WebSphere feature, disabled by default, is only applicable to single web applications that do not integrate with other web applications through single sign-on. Since Connections is a set of tightly integrated web applications and not a single web application, this setting cannot be applied. If you have customized security in WebSphere, ensure that this WebSphere setting is not enabled as follows:

- In WAS admin console click Security > Global.

- Under Custom properties, make sure that com.ibm.ws.security.web.logoutOnHTTPSessionExpire is either not listed or set to false.

To install and configure WAS...

- Install WAS Network Deployment.

Enable security when the installation wizard requests it. The administrative user ID that you create must be unique and must not exist in the LDAP repository that you plan to federate.

- Apply the available fix packs.

See the Connections system requirements topic for details.

- Configure WAS to communicate with the LDAP directory.

Perform this step on the Dmgr admin console.

Configure the LDAP for Cognos separately.

See the Configuring support for LDAP authentication for Cognos Business Intelligence topic.

- Configure Application Security after you have completely installed WAS ND.

Perform this step on the Dmgr admin console.

- Add further nodes, if required, to the cell. For each node to add to the cell...

- Log on to node as user wasadmin, and run...

-

cd WAS_HOME/profiles/profile/bin

./addNode.sh DmgrHost DmgrSoapPort -username AdminUserId -password AdminPwd - Repeat this step for each additional node that your want to add to the cell.

- Synchronize all the nodes.

- Log on to node as user wasadmin, and run...

- To confirm changes made . Set up single sign on

Create an administrator for the WAS

Use an existing FileNet deployment for Connections Content Manager: If you install Connections Content Manager or integrate with FileNet, you must use an administrative account that is a recognized account in both your Connections environment and your FileNet environment.

If you use an existing FileNet deployment, you must ensure the Connections administrator is a valid user account on the FileNet system. The easiest way to do is to make the Connections administrator a user in a shared LDAP.

If you use the Connections installation to install a new FileNet deployment for use with Connections Content Manager, having the Connections administrator be a user in both Connections and FileNet occurs automatically. With the new FileNet deployment option, both Connections and FileNet share directory configuration in a single WebSphere cell.

- Restart the Dmgr and then log into the dmgr again.

- Click Users and Groups > Administrative user roles and then click Add.

- Select Administrator from the Roles option and then search for a user.

- Select the target user and use the move arrow button to add that user's name to the Mapped to role option.

Ensure that this user ID does not have spaces in the name.

- Click OK and then click Save.

- Log out of the dmgr.

- Restart the dmgr and the nodes.

- Log into the dmgr using the new administrator credentials.

Access Windows network shares

Configure a user account to access network shares in an Connections deployment on the Microsoft Windows operating system

This task applies only to deployments of Connections environments where the data is located on network file shares, and where you have installed WAS on Microsoft Windows and configured it to run as a service.

When WAS runs as a Windows service, it uses the local system account to log in with null credentials. When WAS tries to access an Connections network share using Universal Naming Convention (UNC) mapping, the access request fails because the content share is accessible only to valid user IDs.

When using a Windows service to start WAS, you must use UNC mapping; you cannot use drive letters to reference network shares.

To resolve this problem, configure the WAS service login attribute to log in with a user account that is authorized to access the content share.

To configure the WAS service...

- Click Start > Control Panel and select Administrative Tools > Services.

- Open the service for the first node in the list of WAS services.

- Click the Log On tab and select This account.

- Enter a user account name or click Browse to search for a user account.

- Enter the account password, and then confirm the password.

- Click OK to save your changes and click OK again to return to the Services window.

- Stop and restart the service.

- Repeat steps 3-7 for each node.

Your corporate password policy might require that you change this login attribute periodically. If so, remember to update this service configuration. Otherwise, your access to network shares might fail.

Set up federated repositories

Use federated repositories with IBM WAS to manage and secure user and group identities.

Complete the steps described in Prepare to configure the LDAP directory.

You can configure the user directory for Connections to be populated with users from more than one LDAP directory.

Ensure that you meet the following guidelines for entity-object class mapping:

- If you are using IBM Tivoli Directory Server, decide whether the deployment will rely on the LDAP groupOfNames or groupOfUniqueNames object class for group entities. WAS uses groupOfNames by default. In most cases, you need to delete this default mapping and create a new mapping for group entities using the LDAP groupOfUniqueNames object class.

- If you are using the groupOfUniqueNames object class for group entities, use the uniqueMember attribute for the group member attribute.

- If you are using the groupOfNames object class group entities, use the member attribute for the group member attribute.

To set up federated repositories in WAS...

- Log on to the Dmgr console:

-

http://DmgrHost:9060/ibm/console

- Click Security > Global Security.

- Select Federated Repositories from the Available realm definitions field, and then click Configure.

- If installing Connections Content Manager, set the realm name to defaultWIMFileBasedRealm.

- Click Click Add Base entry to Realm and then, on the Repository reference page, click Add Repository.

- On the New page, type a repository identifier, such as myFavoriteRepository into the Repository identifier field.

- Specify the LDAP directory that you are using in the Directory type field.

Directory type option LDAP directory supported by Connections IBM Tivoli Directory Server IBM Tivoli Directory Server 6.1, 6.2, 6.3 z/OS Integrated Security Services LDAP Server IBM Lotus Domino IBM Lotus Domino 8.0 or later, 8.5 or later Novell Directory Services eDirectory 8.8 Sun Java System Directory Server Sun Java System Directory Server 7 Windows Active Directory Microsoft Active Directory 2008 Microsoft Active Directory Application Mode Microsoft Active Directory Application Mode Referred to as Active Directory Lightweight Directory Services (AD LDS) in Windows Server 2008.

- Type the host name of the primary LDAP directory server in the Primary host name field. The host name is either an IP address or a DNS name.

- If your directory does not allow LDAP attributes to be searched anonymously, provide values for the Bind distinguished name and Bind password fields. For example, the Domino LDAP directory does not allow anonymous access, so if you are using a Domino directory, you must specify the user name and password with administrative level access in these fields.

- Login attribute or attributes to use for authentication in the Login properties field.

Separate multiple attributes with a semicolon. For example: uid;mail.

If you are using Active Directory and you use an email address as the login, specify mail as the value for this property. If you use the samAccountName attribute as the login, specify uid as the value for this property.

- Click Apply and then click Save.

- On the Repository reference page, the following fields represent the LDAP attribute type and value pairs for the base element in the realm and the LDAP repository. (The type and value pair are separated by an equal sign (=), for example: o=example.

These can be the same value when a single LDAP repository is configured for the realm or can be different in a multiple LDAP repository configuration.)

- Distinguished name of a base entry that uniquely identifies this set of entries in the realm

- Identifies entries in the realm. For example, on a Domino LDAP server: cn=john doe, o=example.

- Distinguished name of a base entry in this repository

- Identifies entries in the LDAP directory. For example, cn=john

doe, o=example.

This value defines the location in the LDAP directory information tree from which the LDAP search begins. The entries beneath it in the tree can also be accessed by the LDAP search. In other words, the search base entry is the top node of a subtree which consists of many possible entries beneath it. For example, the search base entry could be o=example and one of the entries underneath this search base could be cn=john doe, o=example.

For defined flat groups in the Domino directory, enter a blank character in this field.

- Click Apply and then click Save.

- Click OK to return the Federated Repositories page.

- In the Repository Identifier column, click the link for the repository or repositories that you just added.

- In the Additional Properties area, click the LDAP entity types link.

- Click

the Group entity type and modify the object classes mapping. You can also edit the Search bases and Search filters fields, if necessary. Enter LDAP parameters that are suitable for your LDAP directory.

You can accept the default object classes value for Group. However, if you are using Domino, change the value to dominoGroup.

- Click Apply and then click Save.

- Click the PersonAccount entity type and modify the default object classes mapping. You can also edit the Search bases and Search filters fields, if necessary. Enter LDAP parameters that are suitable for your LDAP directory. Click Apply, and then click Save to save this setting.

If you are using a Domino LDAP, replace the default mapping with dominoPerson and dominoGroup object classes for person account and group entities.

- In the navigation links at the beginning of the page, click the name of the repository that you have just modified to return to the Repository page.

- If your applications rely on group membership from LDAP...

- Click the Group attribute definition link in the Additional Properties area, and then click the Member attributes link.

- Click New to create a group attribute definition.

- Enter group membership values in the Name of member attribute and Object class fields.

- Click Apply and then click Save.

-

If you have already accepted the default groupOfNames value for Group, then you can also accept the default value for Member.

- If you changed objectclass for Group to dominoGroup earlier, you must add dominoGroup to the definition of Member.

- If you do not configure the group membership attribute, then the group member attribute is used when you search group membership. If you need to enable searches of nested group membership, then configure the group membership attribute.

- Consider an example of group membership attribute for using Activities: the Member attribute type is used by the groupOfNames object class, and the uniqueMember attribute type is used by groupOfUniqueNames.

- To support more than one LDAP directory, repeat steps 8-22 for each additional LDAP directory.

- Add Base Entry to Realm for each of the repositories added.

- Set the new repository as the current repository:

- Click Global Security in the navigation links at the beginning of the page.

- Select Federated Repositories from the Available realm definitions field, and then click Set as current.

- Enable login security on WAS:

- Select the Administrative Security and Application Security check boxes. For better performance, clear the Java 2 security check box.

- Click Apply and then click Save.

- Create an administrator for WAS:

- Restart the dmgr and then log into the dmgr again.

- Click Users and Groups > Administrative user roles and then click Add.

- Select Adminstrator from the Roles box and then search for a user.

- Select the target user and click the arrow to move the user name to the Mapped to role box.

- Click OK and then click Save.

- Log out of the dmgr.

- Restart the dmgr and the nodes.

- Log into the dmgr using the new administrator credentials.

- Ensure that this user ID does not have spaces in the name.

- Set a primary administrative user:

- Click Security > Global Security.

- Select Federated Repositories from the Available realm definitions field, and then click Configure.

- Enter the user name that you mapped in the previous step in the Primary administrative user name box.

- Click Apply and then click Save.

- Log out of the dmgr and restart WAS.

- When WAS is running again, log in to the Integrated Solutions Console using the primary administrative user name and password.

- Test the new configuration by adding some LDAP users to the WAS with administrative roles.

- If you are using SSL for LDAP, add a signer certificate to your trust store by completing the following steps:

- From the WAS admin console, select SSL Certificate and key management > Key Stores and certificates > CellDefaultTrustStore > Signer Certificates > Retrieve from port.

- Type the DNS name of the LDAP directory in the Host field.

- Type the secure LDAP port in the Port field (typically 636).

- Type an alias name, such as LDAPSSLCertificate, in the Alias field.

- Click Apply and then click Save.

- To enable SSO, prepare the WAS environment...

- From the WAS admin console, select...

-

Security | Global security | web and SIP security | Single sign-on (SSO) | Enabled | Interoperability Mode (optional) | web inbound security attribute propagation

- Return to the Global security page and click...

-

Web and SIP security | General settings | Use available authentication data when an unprotected URI is accessed | Apply | Save

- From the WAS admin console, select...

- Verify that users in the LDAP directory have been successfully added to the repository:

- From the WAS admin console, select Users and Groups > Manage Users.

- In the Search by field, enter a user name that you know to be in the LDAP directory and click Search. If the search succeeds, you have partial verification that the repository is configured correctly. However, this check cannot check for the groups that a user belongs to. Check that if you leave the default Search by field of User ID, then you need to specify a known UID within the LDAP in the search input field.

- From the WAS admin console, select Users and Groups > Manage Users.

Results

You have configured WAS to use a federated repository.

Choosing login values

Determine which LDAP attribute or attributes you want to use to log in to Connections.

The following scenarios are supported:

- Single LDAP attribute with a single value

- For example: uid=jsmith.

- Multiple LDAP attributes, each with a single value

- To specify multiple attributes, separate them with a semicolon when you enter them in the Login properties field (while adding the repository to IBM WAS). For example, where uid=jsmith and mail=jsmith@example.com, you would enter: uid; mail.

- Single LDAP attribute with multiple values

- For example, mail is the login attribute and it accepts two different email addresses: an intranet address and an extranet address.

For example: mail=jsmith@myCompany.com or mail=jsmith@example.com.

- Multiple LDAP attributes, each with multiple values

- For example: uid=jsmith or uid=john_smith and mail=jsmith@example.com or mail=john_smith@example.com or mail=jsmith@MyCompany.com.

- Multiple LDAP directories

- For example: One LDAP directory uses uid as the login attribute and the other uses mail. You must repeat the steps in Set up federated repositories for each LDAP directory.

Multi-valued attributes

You can map multiple values to common attributes such as uid or mail.

If, for example, you mapped the following attributes for a user called Sample User, all three values for the user are populated in the PROFILE_LOGIN table in the Profiles database:

- mail=suser@example.com

- mail=sample_user@example.com

- mail=user_sample@example.com

A similar example for the uid property would have the following attributes:

- uid=suser

- uid=sampleuser

- uid=user_sample

By default, the population wizard only allows you to choose one attribute for logins, so you can't select mail and uid. You can, however, write a custom function to union multiple attributes.

Custom attributes

The Profiles population wizard populates uid and mail during the population process but maps the loginID attribute to null. You can specify a custom attribute if your directory uses a unique login attribute other than, for example, uid or mail. The login value can be based on any attribute that you have defined in your repository. You can specify that attribute by setting loginID=attribute when you populate the Profiles database.

The following sample extract from the profiles-config.xml file shows the standard login attributes:

<loginAttributes> <loginAttribute>uid</loginAttribute> <loginAttribute>email</loginAttribute> <loginAttribute>loginId</loginAttribute> </loginAttributes>

The value for the loginID attribute is stored in the Prof_Login column of the Employee table in the Profiles database.

See the Mapping fields manually topic.

Use Profiles or LDAP as the repository

The default login attributes that are defined in the profiles-config.xml file are uid, email, or loginID

If you change the default Connections configuration to use the LDAP directory as the user repository, WAS maps uid as the login default.

Specify the global ID attribute for users and groups

Determine which attribute to use as the unique identifier of each person and group in the organization. This identifier must be unique across the organization.

By default, WAS reserves the following attributes as unique identifiers for the following LDAP directory servers:

| IBM Tivoli Directory Server | ibm-entryUUID |

| Microsoft Active Directory | objectGUID

If you are using Active Directory, remember that the samAccountName attribute has a 20 character limit; other IDs used by Connections have a 256 character limit. |

| IBM Domino Enterprise Server | dominoUNID

If the bind ID for the Domino LDAP does not have sufficient manager access to the Domino directory, the Virtual Member Manager (VMM) does not return the correct attribute type for the Domino schema query; DN is returned as the VMM ID. To override VMM's default ID setting, add the following line to the <config:attributeConfiguration> section of the wimconfig.xml file: <config:externalIdAttributes name="dominoUNID"/> |

| Sun Java System Directory Server | nsuniqueid |

| eNovell Directory Server | GUID |

| Custom ID | If your organization already uses a unique identifier for each user and group, you can configure Connections to use that identifier. |

The wimconfig.xml file is stored in the following location:

-

/usr/IBM/WebSphere/AppServer/profiles/profile_name/config/cells/cell_name/wim/config

IBM recommends that you do not allow the GUID of a user to change. If you change the GUID, the user will not have access to their data unless you re-synchronize the LDAP and Profiles database with the new GUID. When you change the GUID and run the sync_all_dns batch file, the user's GUID is initially changed in the Profiles database, and then propagated to the other components using the user life cycle commands. Be sure when you are running sync_all_dns that an unchanged field is used as the hash.

See Managing user data using Profiles administrative commands.

Specify a custom ID attribute for users or groups

Specify custom global unique ID attributes to identify users and groups in the LDAP directory. This is an optional task.

By default, Connections looks for LDAP attributes to use as the global unique IDs (guids) to identify users and groups in the LDAP directory. The identifiers assigned by LDAP directory servers are usually unique for any LDAP entry instance. If the user information is deleted and re-added, or exported and imported into another LDAP directory, the guid changes. Changes like this are usually implemented when employees change status, a directory record is deleted and added again, or when user data is ported across directories.

When the guid of a user changes, you must synchronize the LDAP with the Profiles database before that user logs in again. Otherwise, the user will have two accounts in Connections and the user's previous content will appear to be lost as it is associated with the previous guid. If you assign a fixed attribute to each record, you can minimize the possibility of accidentally introducing dual accounts for a user in Connections.

The custom ID attribute must be chosen carefully and must have certain properties as follows:

In other words, the value of an ID must not be assigned to one user today and then a different user sometime in the future. The ID is used to reference a user for security and access control, so reuse of an ID may accidentally grant a user permissions to content previously available to a prior user with the same ID.

That is, a user should not have one ID today and a different ID at some time in the future. Changing a user's ID might result in loss of access to content and references to that user reporting that the user is not found or no longer exists.

Since these attributes are frequently recycled as different users join and leave an organization,jsmith may not reference the same user today and into the future. Since this attribute is used in access control lists, the use of login name, e-mail or similar attributes that are recycled might result in a future user getting access to a current user's private communities and content.

Connections will store that jsmith has access to content if that is used as the value of the ID. Whom jsmith refers to might change over time. If this occurs, a new user might get access to content unintentionally because a prior jsmith had access.

If you are planning to install the Files or Wikis application, the ID cannot exceed 252 characters in length. If you are planning to install Connections Content Manager, the string representation of the ID cannot exceed 507 bytes. Values of IDs are compared frequently, so you should choose reasonably compact values for performance. The lengths of default ID values range from approximately 16-to-36 bytes.

As long the value is stable over time and not reused, a good choice for a custom ID might be a global employee or customer ID that you generate and assign to individuals in your directory. An LDAP's GUID, the default for the ID attribute, might or might not be a good choice, depending on how it is populated in your directory and how your organization uses LDAP. If you frequently delete and then recreate LDAP entries for the same user but want the old and new entry to represent the same user, you might need to specify a custom ID. Other considerations for choosing a custom ID are as follows:

An employee's family name might change as a result of a change in marital status or other reasons.

Such a change obviously does not affect the employee's security role, but it would have the unintended effect of cause the employee's ID to change, leading to losing access.

The wimconfig.xml file governs a single ID attribute for all supported objects such as users, groups, and organizations in WAS. You can use the LotusConnections-config.xml file to override the ID attribute in the wimconfig.xml file.

For example, you could use the wimconfig.xml file to specify the ibm-entryUUID attribute as the ID Key attribute for users and groups in all applications running on WAS, and then modify the LotusConnections-config.xml file to specify the employeeID as the ID Key attribute for Connections applications.

Also refer to Manage users for best practices for keeping the Connections membership tables up-to-date with the changes that occur in your corporate directory

You can change the default setting to use a custom ID to identify users and groups in the directory.

A custom ID must meet the following requirements:

If you are planning to install the Files or Wikis application, the ID cannot exceed 252 characters in length.

To specify a custom attribute as the unique ID for users or groups...

VMM_HOME is the directory where the Virtual Member Manager files are located.

This location is set to either the wim.home system property or the user.install.root/config/cells/local.cell/wim directory.

The customUserID and customGroupID properties are not related to the properties of the login ID.

Open the wimconfig.xml file in a text editor.

If you specified different ID attributes for users and groups, complete the steps in the Configuring the custom ID attribute for users or groups topic in the Post-installation tasks section of the product documentation. The steps in that task configure Connections to use the custom ID attributes specified in this task.

When you map fields in the Profiles database, ensure that you add the custom ID attribute to the PROF_GUID field in the EMPLOYEE table. See the Mapping fields manually topic.

Create databases for the applications that you plan to install. You can use the database wizard or run the SQL scripts that are provided with Connections.

Each Connections application requires its own database, except Moderation, News, and Search. The Moderation application does not have an associated database or content store, while the News and Search applications share the Home page database.

The database wizard automates the process of creating databases for the applications that you plan to install. It is a more reliable method for creating databases because it validates the databases as you create them.

Consult your database documentation for detailed information about preparing your databases.

You must have already created and started a database instance before you can create databases.

If you install the database for Connections Content Manager, a new feature in the 4.5 release, it will create two databases: Global Configuration Database and Object Store.

Complete the procedures that are appropriate for the deployment:

Create multiple instances of a database for a more versatile database environment.

This is an optional procedure. If you need to have only one database instance (in Oracle terminology, one database), you can skip this task. (Windows only)

Complete the following steps for each instance that you plan to create:

If you are using DB2, add the new user to the DB2AdmgrNS group as well.

The new account uses the local system as the domain.

A database environment with multiple instances provides several benefits:

Multiple instances require additional system resources.

To create multiple instances of a database...

Choose your database type:

An instance called db2inst1 is created during DB2 installation.