5.5.2 IBM HTTP Server and the IBM WAS plug-in

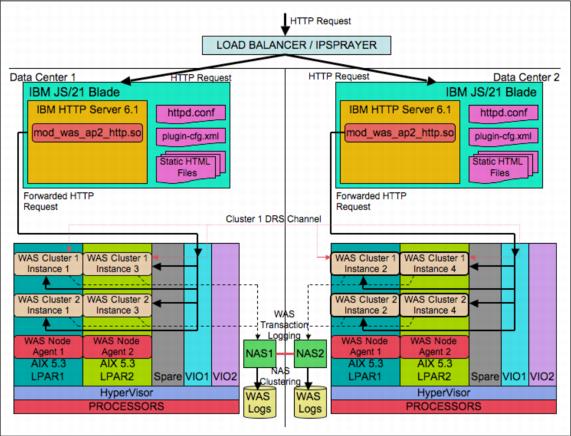

A useful standard Web server tier could be supported by the highly performant and scalable IBM Blade Center environment, with up to 14 IBM Blade Center Servers per blade center chassis. As we are considering AIX, assume the IBM JS-21 BladeCenter Servers are in use with AIX 5.3 or AIX 6.1 as their operating system. With these machines, partitioning is now supported by a HyperVisor, and for AIX 6.1, Workload Partitions are also supported. Thus, multiple layers of isolation and protection for the Web servers is possible for the purposes of security.

In many ways, the ideal configuration is to install IBM HTTP Server (IHS) 6.1 in the global environment, but not make that accessible to the outside world. Then then create multiple WPARS that act as hosts to the outside world to give a very secure environment that can be monitored and controlled from the global environment. For our purposes, assume that AIX 5.3 is being used.

When the IBM HTTP Servers are installed, the Deployment Manager can be used to push the configuration out. A plug-in called mod_was_ap20_http.so is installed and configured for loading by the Web server. The configuration of routing requests to the application server clusters is handled by entries in a file usually called plugin-cfg.xml. The plug-in is loaded inside IHS by configuring the IHS httpd.conf file, as shown in Example 5-67.

Example 5-67 IHS httpd.conf: routing request configuration

LoadModule

was_ap20_module /usr/IBMIHS/Plugins/bin/mod_was_ap20_http.so

WebSpherePluginConfig

/usr/IBMIHS/Plugins/config/webserver1/plugin-cfg.xml

The plugin-cfg.xml file is generated using the administration console from the Deployment Manager, or by using the GenPluginCfg.sh script in the directory /usr/IBM/WebSphere/AppServer/bin. This generates a file with weightings and load balancing algorithms including backoff and retry (that is, Nagle) to ensure that the cluster instances are used appropriately and that resilience is available. As is often the case, manual editing of this file to simplify the configuration can be helpful.

Example 5-68 plugin-cfg.xml example

<?xml version="1.0"?>

<Config ASDisableNagle="false" IISDisableNagle="false"

IgnoreDNSFailures="false" RefreshInterval="60"

ResponseChunkSize="64" AcceptAllContent="false"

AppServerPortPreference="webserverPort" VHostMatchingCompat="true"

ChunkedResponse="false">

<Log LogLevel="Error"

Name="/usr/IBMIHS/plugins/logs/webserver1/http_plugin.log"/>

<Property Name="ESIEnable" Value="true"/>

<Property Name="ESIMaxCacheSize" Value="1024"/>

<Property Name="ESIInvalidationMonitor" Value="false"/>

<VirtualHostGroup Name="default_host">

<VirtualHost Name="*:9080"/>

<VirtualHost Name="*:80"/>

<VirtualHost Name="*:9443"/>

</VirtualHostGroup>

<ServerCluster Name="server1_Cluster" CloneSeparatorChange="false"

LoadBalance="Round Robin"

PostSizeLimit="-1" RemoveSpecialHeaders="true" RetryInterval="60">

<ClusterAddress Name="server1_lpar1" ConnectTimeout="0"

ExtendedHandshake="false"

LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false">

<Transport Hostname="aix_lpar1" Port="9080" Protocol="http"/>

<Transport Hostname="aix_lpar1" Port="9443" Protocol="https">

<Property name="keyring"

value="/usr/IBMIHS/plugins/etc/plugin-key.kdb"/>

<Property name="stashfile"

value="/usr/IBMIHS/plugins/etc/plugin-key.sth"/>

</Transport>

</ClusterAddress>

<Server Name="server1_lpar2" ConnectTimeout="0" ExtendedHandshake="false"

LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false">

<Transport Hostname="aix_lpar2" Port="9080" Protocol="http"/>

<Transport Hostname="aix_lpar2" Port="9443" Protocol="https">

<Property name="keyring"

value="/usr/IBMIHS/plugins/etc/plugin-key.kdb"/>

<Property name="stashfile"

value="/usr/IBMIHS/plugins/etc/plugin-key.sth"/>

</Transport>

</Server>

<Server Name="server2_lpar1" ConnectTimeout="0" ExtendedHandshake="false"

LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false">

<Transport Hostname="aix_lpar1" Port="9081" Protocol="http"/>

<Transport Hostname="aix_lpar1" Port="9444" Protocol="https">

<Property name="keyring"

value="/usr/IBMIHS/plugins/etc/plugin-key.kdb"/>

<Property name="stashfile"

value="/usr/IBMIHS/plugins/etc/plugin-key.sth"/>

</Transport>

</Server>

<Server Name="server2_lpar2" ConnectTimeout="0" ExtendedHandshake="false"

LoadBalanceWeight="1" MaxConnections="-1" WaitForContinue="false">

<Transport Hostname="aix_lpar2" Port="9081" Protocol="http"/>

<Transport Hostname="aix_lpar2" Port="9444" Protocol="https">

<Property name="keyring"

value="/usr/IBMIHS/plugins/etc/plugin-key.kdb"/>

<Property name="stashfile"

value="/usr/IBMIHS/plugins/etc/plugin-key.sth"/>

</Transport>

</Server>

<PrimaryServers>

<Server Name="server1_lpar1"/>

<Server Name="server1_lpar2"/>

<Server Name="server2_lpar1"/>

<Server Name="server2_lpar2"/>

</PrimaryServers>

</ServerCluster>

<UriGroup Name="server1_Cluster_URIs">

<Uri Name="/TestManagerWeb/*" />

<Uri Name="/TestManagerWeb" />

<Uri Name="/TMAdmin/*" />

<Uri Name="/TMAdmin" />

<Uri Name="*.do" />

<Uri Name="*.action" />

<Uri AffinityCookie="JSESSIONID" AffinityURLIdentifier="jsessionid"

Name="/TestManagerWeb/*" />

<Uri Name="/snoop/*"/>

<Uri Name="/snoop"/>

<Uri Name="/hello"/>

<Uri Name="/hitcount"/>

<Uri Name="*.jsp"/>

<Uri Name="*.jsv"/>

<Uri Name="*.jsw"/>

<Uri Name="/j_security_check"/>

<Uri Name="/ibm_security_logout"/>

<Uri Name="/servlet/*"/>

<Uri Name="/ivt"/>

<Uri Name="/ivt/*"/>

<Uri Name="/_DynaCacheEsi"/>

<Uri Name="/_DynaCacheEsi/*"/>

<Uri Name="/wasPerfTool"/>

<Uri Name="/wasPerfTool/*"/>

<Uri Name="/wasPerfToolservlet"/>

<Uri Name="/wasPerfToolservlet/*"/>

</UriGroup>

<Route ServerCluster="server1_Cluster"

UriGroup="server1_Cluster_URIs" VirtualHostGroup="default_host"/>

<RequestMetrics armEnabled="false" newBehavior="false"

rmEnabled="false" traceLevel="HOPS">

<filters enable="false" type="URI">

<filterValues enable="false" value="/servlet/snoop"/>

<filterValues enable="false" value="/webapp/examples/HitCount"/>

</filters>

<filters enable="false" type="SOURCE_IP">

<filterValues enable="false" value="255.255.255.255"/>

<filterValues enable="false" value="254.254.254.254"/>

</filters>

</RequestMetrics>

</Config>

The plugin-cfg.xml file shown in Example 5-68 will run in a round robin manner among the application server instances server1_lpar1 and server1_lpar2 in LPARs 1 and 2, the server instances server2_lpar2 and server2_lpar2 for URLs /TestManagerWeb and /TMAdmin, and anything with the extensions .do and .action.

IHS is a derivative of the Apache 2 Web server, but it has an IBM internal security engine with appropriate licenses for some subsystems that Open Source developments cannot obtain. It also has support for Fast Response Cache Architecture (FRCA) to accelerate responses by caching commonly used static data lower down the TCP/IP stack using a kernel extension.

Essentially, the static content cached is kept on the kernel side of the TCP/IP stack, which avoids the expensive copying of large amounts of data between kernel and user space. This improves performance, and is in addition to the content itself being cached rather than reread from disk buffers.

The FRCA kernel extension is loaded using frcactrl load, usually as part of the AIX startup initiated in /etc/inittab. It is used by loading the mod_afpa_cache.so shared object by adding the entries shown in Example 5-69 to the end of the httpd.conf file.

Example 5-69 FRCA startup modifications to httpd.conf

AfpaEnable On AfpaCache On AfpaLogFile "/usr/IBMIHS/logs/afpalog" V-ECLF AfpaPluginHost aix_lpar1:9080 AfpaPluginHost aix_lpar1:9081 AfpaPluginHost aix_lpar1:9443 AfpaPluginHost aix_lpar1:9444 AfpaPluginHost aix_lpar2:9080 AfpaPluginHost aix_lpar2:9081 AfpaPluginHost aix_lpar2:9443 AfpaPluginHost aix_lpar2:9444

You must also remember to load the FRCA module by adding the appropriate entries to the LoadModules section of httpd.conf, as shown in Example 5-70.

Example 5-70 httpd.conf: loading FRCA modules

# # Dynamic Shared Object (DSO) Support # # To be able to use the functionality of a module which was built as a DSO you # have to place corresponding `LoadModule' lines at this location so the # directives contained in it are actually available _before_ they are used. # Statically compiled modules (those listed by `httpd -l') do not need # to be loaded here. # # Example: # LoadModule foo_module modules/mod_foo.so ... LoadModule deflate_module modules/mod_deflate.so LoadModule ibm_afpa_module modules/mod_afpa_cache.so

In Example 5-70, note that the mod_deflate.so shared object is also loaded to enable compressed HTTP pages. This deflate compression is supported by most browsers and response times can be greatly improved, so this should be used.

Now when a request comes into the Web channel, as shown in Figure 5-20, it will be picked up by the load balancing router (something like a Cisco LocalDirector) or IPSprayer and sent to one of the Web servers in one of the data centers, depending on loading and which received the last request.

This will be handled by one of the JS/21 blades IP stack. And, if it is for a static page that it has cached in the Network Buffer Cache/Fast Response Cache Architecture cache, it will return the response immediately from the cache. This is likely to be the case for commonly used static content.

The request is then passed to the Apache2-based IBM HTTP Server process listener for handling. If the request is for content that it can serve, or that it knows is in error, it will either handle it itself or pass it on to another Web server process to handle.

Otherwise, if the request is for a URL configured in the plugin-cfg.xml file, the mod_was_ap2_http.so module comes into play. This compares the request URL to the list configured in plugin-cfg.xml file. The plug-in will check authorizations and look at the HTTP cookies or URL query string to get access to the JSESSIONID value.

Figure 5-20 Load balanced clustered data center

J2EE specifies that when a Web application sets up a session, usually after a user has logged on, it should return a session cookie or a value in the query string of JSESSIONID that should be a pseudorandom value used to identify the session. The WAS clustering mechanism modifies this value in order to append a clone or instance ID to it, which it can then use in the plug-in to identify the server and cluster that initiated the session.

It then forwards the request as an HTTP or HTTPS request to the WAS instance identified by the instance ID. If that instance has failed or does not respond within a timeout value, it will choose another instance in that configured cluster, using the information in the plugin-cfg.xml file to route the request to.

This all assumes that the Data Replication Service (DRS) has been configured to replicate all of the session information between the nodes in the cluster using the Reliable Multicast Messaging protocol, or that the session information has been stored in a session database accessible by all nodes in the cluster. Thus, the cluster nodes can take over the session of a failed node. If the user has yet to login and set up a session, then any server can handle the request.

To understand how the plug-in works, the book Apache: The Definitive Guide is a useful reference. Essentially, an Apache module is given an opportunity to load its configuration into each instance of the Web server and use the ap_hook_handler call to register a handler that gets to peek at requests as the HTTP server examines them; see Figure 5-21.

The use of the "strings" call against the mod_was_as20_http.so binary shows that the WebSphere plug-in functions in the same way, and suggests that the websphereHandleRequest and websphereExecute functions handle the reading of requests from IHS and the forwarding to the appropriate WAS cluster instance. To develop a better understanding of this subject, you can download the TomcatConnectors mod_jk.c file from the following address and examine the use of the Apache 2 APIs:

http://www.apache.org

Figure 5-21 WAS end-to-end transaction path