|

5.5.1 WebSphere cluster and cell configurationTo configure the WebSphere Application Server environment for high availability, a cell is set up (or two cells, if internal and external user traffic to the application set are to be separated for security reasons), which is the unit of management. A unit of failover with WebSphere Application Server - Network Deployment is a cell, and there can be multiple clusters per cell. Within the cell two separate clusters are set up and instances created across partitions in both data centers. The number of instances (formerly known as clones) should be calculated by determining how long an average transaction will take and using this with the peak-of-peak number of transactions required multiplied by two or the number of identical data centers in use (assuming the infrastructures are the same in each). Why multiply by two, you may wonder? Because this is a load balanced environment and at peak, each data center should carry half (or 1 / number of data centers) the load. However, if a data center or its communications are lost, then the remaining data center (or data centers) must carry the whole load. This is best explained with an example, as shown in Figure 5-19. Assume you have two data centers, and each data center has a single System p p570 with two WebSphere Application Server partitions (to support easy WebSphere Application Server upgrades). Your application needs to handle a peak load of 360,000 transactions per hour, and each transaction takes 2 seconds, of which the whole time is spent actively processing (that is, a calculations engine). So, 360,000 transactions per hour is 100 transactions per second, but because each transaction takes 2 seconds, there will be 200 transactions in flight at any one time. Each WebSphere Application Server Web container is limited to 50 threads, so each can handle a maximum of 50 in-flight transactions. Therefore, we need to have 4 instances to support your load. To account for loss of a cluster and loss of a complete data center where the remaining cluster or data center will have to take over the complete load, we need to have 4 instances per cluster split across two data centers and two clusters; that is, 8 instances. This is configured by connecting to the active WebSphere Deployment Manager and using wsadmin scripts or the administration System Console to set up the two cells and 4 instances in each.

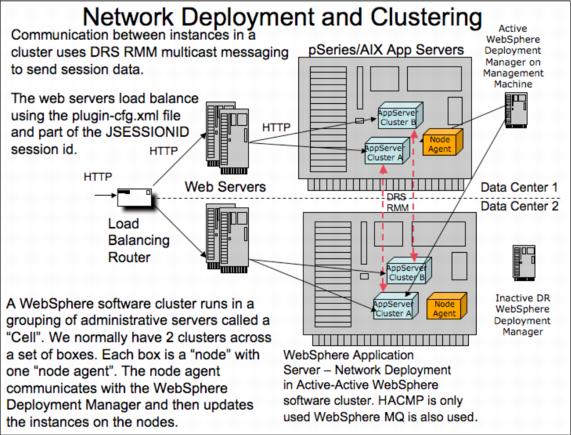

Figure 5-19 Network deployment and clustering The Deployment Manager holds all of the configuration information for the cell and cluster in XML files as outlined previously. It synchronizes the configuration of the cell and clusters with each node agent, of which there is one node agent (usually) per operating system image unless multiple cells are to be configured. In this case, assume one cell and one node agent per operating system image/partition for your configuration. The instances are created and the node agent gives each instance its configuration, but the master configuration for the whole cell and all of the clusters it contains is in the Deployment Manager, which should be backed up accordingly. When the application is deployed, it is rolled out to each of the instances, and your middle tier configuration is complete. |