Adjusting WAS system queues

WAS establishes a queuing network, which is a group of interconnected queues that represent various components. There are queues established for the network, Web server, Web container, EJB container, Object Request Broker (ORB), data source, and possibly a connection manager to a custom back-end system. Each of these resources represents a queue of requests waiting to use that resource. Queues are load-dependent resources. As such, the average response time of a request depends on the number of concurrent clients.

As an example, think of an application, consisting of servlets and EJBs, that accesses a back-end database. Each of these application elements reside in the appropriate WebSphere component (for example servlets in the Web container) and each component can handle a certain number of requests in a given time frame.

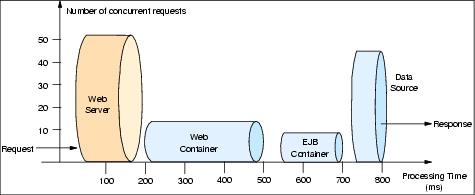

A client request enters the Web server and travels through WebSphere components in order to provide a response to the client. Figure 19-5 illustrates the processing path this application takes through the WebSphere components as interconnected pipes that form a large tube.

Figure 19-5 Queuing network

The width of the pipes (illustrated by height) represents the number of requests that can be processed at any given time. The length represents the processing time that it takes to provide a response to the request.

In order to find processing bottlenecks, it is useful to calculate a transactions per second (tps) ratio for each component. Ratio calculations for a fictional application are shown in Example 19-3:

Example 19-3 Transactions per second ratio calculations

The Web Server can process 50 requests in 100 ms =

The Web container parts can process 18 requests in 300 ms =

The EJB container parts can process 9 requests in 150 ms =

The datasource can process 40 requests in 50 ms =

The example shows that the application elements in the Web container and in the EJB container process requests at the same speed. Nothing would be gained from increasing the processing speed of the servlets and/or Web container because the EJB container would still only handle 60 transactions per second. The requests normally queued at the Web container would simply shift to the queue for the EJB container.

This example illustrates the importance of queues in the system. Looking at the operations ratio of the Web and EJB containers, each is able to process the same number of requests over time. However, the Web container could produce twice the number of requests that the EJB container could process at any given time. In order to keep the EJB container fully utilized, the other half of the requests must be queued.

It should be noted that it is common for applications to have more requests processed in the Web server and Web container than by EJBs and back-end systems. As a result, the queue sizes would be progressively smaller moving deeper into the WebSphere components. This is one of the reasons queue sizes should not solely depend on the operation ratios.

The following section outlines a methodology for configuring the WAS queues. The dynamics of an individual system can be dramatically changed by moving resources, for example moving the database server onto another machine, or providing more powerful resources, for example a faster set of CPUs with more memory. Thus, adjustments to the tuning parameters are for a specific configuration of the production environment.

WebSphere is a trademark of the IBM Corporation in the United States, other countries, or both.

IBM is a trademark of the IBM Corporation in the United States, other countries, or both.