WebSphere eXtreme Scale v8.6 Overview

- Elastic scalability

- WXS with databases

- What's new in Version 8.6

- Release notes

- Hardware and software requirements

- Directory conventions

- WXS technical overview

- Caching overview

- Cache integration overview

- Spring cache provider

- Liberty profile

- OpenJPA level 2 (L2) and Hibernate cache plug-in

- HTTP session management

- Dynamic cache provider overview

- Deciding how to use WXS

- Decision tree for migrating existing dynamic cache applications

- Decision tree for choosing a cache provider for new applications

- Feature comparison

- Topology types

- Remote topology

- Dynamic cache engine and eXtreme Scale functional differences

- Dynamic cache statistics

- MBean calls

- Dynamic cache replication policy mapping

- Global index invalidation

- Security

- Near cache

- Additional information

- Database integration

- Serialization overview

- Scalability overview

- Availability overview

- High availability

- Heart beating

- Failures

- Process failure

- Loss of connectivity

- Host failure

- Islanding

- Container failures

- Container failure detection latency

- Catalog service failure

- Multiple container failures

- Replication for availability

- High availability catalog service

- Catalog server quorums

- Heartbeats and failure detection

- Container servers and core groups

- Catalog service domain heart-beating

- Failure detection

- Quorum behavior

- Reasons for quorum loss

- Quorum loss from JVM failure

- Quorum loss from network brownout

- Catalog service JVM cycling

- Consequences of lost quorum

- Quorum recovery

- Overriding quorum

- Container behavior during quorum loss

- Synchronous replica behavior

- Asynchronous replica behavior

- Client behavior during quorum loss

- Islanding

- Replicas and shards

- Shard types

- Minimum synchronous replica shards

- Replication and Loaders

- Primary side

- Replica side

- Replica side on failover

- Shard placement

- Scaling out

- Scaling in

- Lifecycle events

- Primary shard

- Recovery events

- Replica shard becomes a primary shard

- Replica shard recovery

- Failure events

- Too many register attempts

- Failure while entering peer mode

- Recovery after re-register or peer mode failure

- Map sets for replication

- Replica side

- Transaction processing

- Security overview

- REST data services overview

- High availability

Overview

WebSphere eXtreme Scale (WXS) is a data grid that caches application data across multiple servers, performing massive volumes of transaction processing with high efficiency and linear scalability.WXS can be used...

- As a cache

- As an in-memory database processing space to manage application state

- To build Extreme Transaction Processing (XTP) applications

eXtreme Scale splits data set into partitions. Each partition exists as a primary copy, or shard. A partition also contains replica shards for backing up the data.

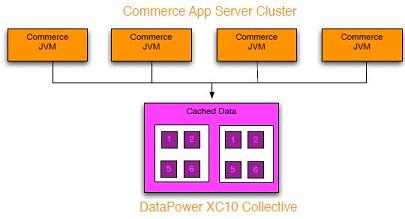

WebSphere DataPower XC10 can be used with WebSphere Commerce Suite as an alternative caching mechanism for Dynamic Cache to reduce local memory. Commerce nodes can use the WebSphere eXtreme Scale dynamic cache provider to off load caching from local memory to XC10. All of the cached data is stored in on the XC10 Collective, providing availability of cache data and improved performance.

Elastic scalability

Elastic scalability is enabled using distributed object caching. The data grid monitors and manages itself, adding or removing servers from the topology, which increases memory, network throughput, and processing capacity as needed. Capacity is added to the data grid while it is running without requiring a restart. The data grid self-heals by automatically recovering from failures.

WXS with databases

Using the write-behind cache feature, eXtreme Scale can serve as a front-end cache for a database. WXS clients send and receive data from the data grid, which can be synchronized with a backend data store. The cache is coherent because all of the clients see the same data in the cache. Each piece of data is stored on exactly one writable server in the cache. Having one copy of each piece of data prevents wasteful copies of records that might contain different versions of the data. A coherent cache holds more data as more servers are added to the data grid, and scales linearly as the data grid grows in size. The data can also be optionally replicated for additional fault tolerance.

WXS container servers provide the in-memory data grid. Container servers can run...

- Inside WebSphere Application Server

- On a J2SE JVM

The data grid is not limited by, and does not have an impact on, the memory or address space of the application or the application server. The memory can be the sum of the memory of several hundred, or thousand, JVMs, running on many different physical servers.

As an in-memory database processing space, WXS can be backed by disk, database, or both.

While eXtreme Scale provides several Java APIs, many use cases require no user programming, just configuration and deployment in your WebSphere infrastructure.

Data grid overview

The simplest eXtreme Scale programming interface is the ObjectMap interface, a map interface that includes:

- map.put(key,value)

- map.get(key)

The fundamental data grid paradigm is a key-value pair, where the data grid stores values (Java objects), with an associated key (another Java object). The key is later used to retrieve the value. In eXtreme Scale, a map consists of entries of such key-value pairs.

WXS offers a number of data grid configurations, from a single, simple local cache, to a large distributed cache, using multiple JVMs or servers.

In addition to storing simple Java objects, we can store objects with relationships. Use a query language that is like SQL, with SELECT - FROM - WHERE statements to retrieve these objects. For example, an order object might have a customer object and multiple item objects associated with it. WXS supports one-to-one, one-to-many, many-to-one, and many-to-many relationships.

WXS also supports an EntityManager programming interface for storing entities in the cache. This programming interface is like entities in Java Enterprise Edition. Entity relationships can be automatically discovered from..

- entity descriptor XML file

- annotations in the Java classes

Retrieve entities from the cache by primary key using the find method on the EntityManager interface. Entities can be persisted to or removed from the data grid within a transaction boundary.

Consider a distributed example where the key is a simple alphabetic name. The cache might be split into four partitions by key: partition 1 for keys starting with A-E, partition 2 for keys starting with F-L, and so on. For availability, a partition has a primary shard and a replica shard. Changes to the cache data are made to the primary shard, and replicated to the replica shard. You configure the number of servers that contain the data grid data, and eXtreme Scale distributes the data into shards over these server instances. For availability, replica shards are placed in separate physical servers from primary shards.

WXS uses a catalog service to locate the primary shard for each key. It handles moving shards among eXtreme Scale servers when the physical servers fail and later recover. If the server containing a replica shard fails, eXtreme Scale allocates a new replica shard. If a server containing a primary shard fails, the replica shard is promoted to be the primary shard . As before, a new replica shard is constructed.

Release notes

Hardware and software requirements

Formally supported hardware and software options are available on the Systems Requirements page.

Install and deploy the product in Java EE and Java SE environments. We can also bundle the client component with Java EE applications directly without integrating with WebSphere Application Server.

Hardware requirements

WXS does not require a specific level of hardware. The hardware requirements are dependent on the supported hardware for the Java SE installation used to run WXS. If we are using eXtreme Scale with WebSphere Application Server or another JEE implementation, the hardware requirements of these platforms are sufficient for WXS.

Operating system requirements

Each Java SE and Java EE implementation requires different operating system levels or fixes for problems that are discovered during the testing of the Java implementation. The levels required by these implementations are sufficient for eXtreme Scale.

Installation Manager requirements

Before installing WXS, install Installation Manager using either...

- product media

- file obtained from the Passport Advantage site

- file containing the most current version of Installation Manager from the IBM Installation Manager download website

Web browser requirements

The web console supports the following Web browsers:

- Mozilla Firefox, version 3.5.x and later

- Microsoft Internet Explorer, version 7 and later

WebSphere Application Server requirements

- WebSphere Application Server Version 7.0.0.21 or later

- WebSphere Application Server Version 8.0.0.2 or later

Java requirements

Other Java EE implementations can use the WXS run time as a local instance or as a client to WXS servers. To implement Java SE, use Version 6 or later.

Directory conventions

- wxs_install_root

- Root directory where WXS product files are installed. Can be the directory in which the trial archive is extracted or the directory in which the WXS product is installed.

- Trial...

-

/opt/IBM/WebSphere/eXtremeScale

- WXS stand-alone directory...

-

/opt/IBM/eXtremeScale

C:\Program Files\IBM\WebSphere\eXtremeScale - WXS integrated WebSphere Application Server...

-

/opt/IBM/WebSphere/AppServer

- Trial...

- wxs_home

- Root directory of the WXS product libraries, samples, and components.

- Trial...

-

/opt/IBM/WebSphere/eXtremeScale

- WXS stand-alone directory...

-

/opt/IBM/eXtremeScale/ObjectGrid

wxs_install_root \ObjectGrid - WXS integrated with WebSphere Application Server...

/opt/IBM/WebSphere/AppServer/optionalLibraries/ObjectGrid

- Trial...

- was_root

- Root directory of a WebSphere Application Server installation:

-

/opt/IBM/WebSphere/AppServer

-

net_client_home

- Root directory of a .NET client installation.

-

C:\Program Files\IBM\WebSphere\eXtreme Scale .NET Client

- restservice_home

- WXS REST data service libraries and samples. Named restservice.

- Stand-alone deployments:

-

/opt/IBM/WebSphere/eXtremeScale/ObjectGrid/restservice

wxs_home\restservice - WebSphere Application Server integrated deployments:

-

/opt/IBM/WebSphere/AppServer/optionalLibraries/ObjectGrid/restservice

- Stand-alone deployments:

- tomcat_root

- Root directory of the Apache Tomcat installation.

-

/opt/tomcat5.5

- wasce_root

- Root directory of the WebSphere Application Server Community Edition installation.

-

/opt/IBM/WebSphere/AppServerCE

- java_home

- Root directory of a JRE installation...

/opt/IBM/WebSphere/eXtremeScale/java

wxs_install_root \java

wxs_home /samples

wxs_home \samples

-

dvd_root/docs/

-

/opt/equinox

c:\Documents and Settings\user_name

/home/user_name

WXS technical overview

The first steps to deploying a data grid are to...

- Start a core group and catalog service

- Start WXS server processes for the data grid to store and retrieve data

A local data grid is a simple, single-instance grid. To best use WXS as an in-memory database processing space, deploy a distributed data grid. Data is spread out over the various eXtreme Scale servers. Each server contains only some of the data, called a partition.

The catalog service locates the partition for a given datum based on its key. A server contains one or more partitions, limited only by the server's memory space.

Increasing the number of partitions increases the capacity of the data grid. The maximum capacity of a data grid is the number of partitions times the usable memory size of each server.

The data of a partition is stored in a shard. For availability, a data grid can be configured with synchronous or asynchronous replicas. Changes to the grid data are made to the primary shard, and replicated to the replica shards. The total memory used or required by a data grid is thus the size of the data grid times (1 (for the primary) + the number of replicas).

WXS distributes the shards of a data grid over the number of servers comprising the grid. These servers may be on the same or different physical servers. For availability, replica shards are placed in separate physical servers from primary shards.

WXS monitors the status of its servers and moves shards during shard or physical server failure and recovery. If the server containing a replica shard fails, WXS allocates a new replica shard, and replicates data from the primary to the new replica. If a server that contains a primary shard fails, the replica shard is promoted to be the primary shard, and, a new replica shard is constructed. If you start an additional server for the data grid, the shards are balanced over all servers. This rebalancing is called scale-out. Similarly, for scale-in, you might stop one of the servers to reduce the resources used by a data grid. As a result, the shards are balanced over the remaining servers.

Caching overview

Caching architecture: Maps, containers, clients, and catalogs

With WXS, your architecture can use either...

- local in-memory data caching

- distributed client-server data caching

WXS requires minimal additional infrastructure to operate. The infrastructure consists of scripts to install, start, and stop a JEE application on a server. Cached data is stored in the WXS server, and clients remotely connect to the server.

Distributed caches offer increased performance, availability and scalability and can be configured using dynamic topologies, in which servers are automatically balanced. We can also add additional servers without restarting your existing eXtreme Scale servers. We can create either simple deployments or large, terabyte-sized deployments in which thousands of servers are needed.

Catalog service

The catalog service...

- controls placement of shards

- discovers and monitors the health of container servers in the data grid

The catalog service hosts logic that should be idle and has little influence on scalability. It is built to service hundreds of container servers that become available simultaneously, and run services to manage the container servers.

The catalog server responsibilities consist of the following services:

- Location service

- Container servers register with the location service. Clients use the location service to search for container servers to host applications.

- Placement service

- Manages the placement of shards across available container servers. Runs as a One of N elected service in the cluster and in the data grid. which means that exactly one instance of the placement service is running. If an instance fails, another process is elected and takes over. For redundancy, the state of the catalog service is replicated across all the servers that are hosting the catalog service.

- Core group manager

-

Manages peer grouping for availability monitoring. Organizes container servers into small groups of servers. Automatically federates the groups of servers.

Uses the high availability manager (HA manager) to group processes together for availability monitoring. Each grouping of the processes is a core group. The core group manager dynamically groups the processes together. These processes are kept small to allow for scalability. Each core group elects a leader that is responsible for sending heartbeat messages to the core group manager. These messages detect if an individual member failed or is still available. The heartbeat mechanism is also used to detect if all the members of a group failed, which causes the communication with the leader to fail.

Responsible for organizing containers into small groups of servers that are loosely federated to make a data grid. When a container server first contacts the catalog service, it waits to be assigned to either a new or existing group. An eXtreme Scale deployment consists of many such groups, and this grouping is a key scalability enabler. Each group consists of JVMs. An elected leader uses the heartbeat mechanism to monitor the availability of the other groups. The leader relays availability information to the catalog service to allow for failure reaction by reallocation and route forwarding.

- Administration

- The catalog service hosts a Managed Bean (MBean) and provides JMX URLs for any of the servers that the catalog service is managing.

For high availability, configure a catalog service domain, which consists of multiple JVMs, including a master JVM and a number of backup JVMs.

Container servers, partitions, and shards

The container server stores application data for the data grid. This data is generally broken into parts, which are called partitions. Partitions are hosted across multiple shard containers. Each container server in turn hosts a subset of the complete data. A JVM might host one or more shard containers and each shard container can host multiple shards. Plan out the heap size for the container servers, which host all of your data. Configure heap settings accordingly.

Partitions host a subset of the data in the grid. WXS automatically places multiple partitions in a single shard container and spreads the partitions out as more container servers become available. Choose the number of partitions carefully before final deployment because the number of partitions cannot be changed dynamically. A hash mechanism is used to locate partitions in the network and eXtreme Scale cannot rehash the entire data set after it has been deployed. As a general rule, we can overestimate the number of partitions

Shards are instances of partitions and have one of two roles: primary or replica. The primary shard and its replicas make up the physical manifestation of the partition. Every partition has several shards that each host all of the data contained in that partition. One shard is the primary, and the others are replicas, which are redundant copies of the data in the primary shard . A primary shard is the only partition instance that allows transactions to write to the cache. A replica shard is a "mirrored" instance of the partition. It receives updates synchronously or asynchronously from the primary shard. The replica shard only allows transactions to read from the cache. Replicas are never hosted in the same container server as the primary and are not normally hosted on the same machine as the primary.

To increase the availability of the data, or increase persistence guarantees, replicate the data. However, replication adds cost to the transaction and trades performance in return for availability.

With eXtreme Scale, we can control the cost as both synchronous and asynchronous replication is supported, as well as hybrid replication models using both synchronous and asynchronous replication modes. A synchronous replica shard receives updates as part of the transaction of the primary shard to guarantee data consistency. A synchronous replica can double the response time because the transaction has to commit on both the primary and the synchronous replica before the transaction is complete. An asynchronous replica shard receives updates after the transaction commits to limit impact on performance, but introduces the possibility of data loss as the asynchronous replica can be several transactions behind the primary.

Maps

A map is a container for key-value pairs, which allows an application to store a value indexed by a key. Maps support indexes that can be added to index attributes on the key or value. These indexes are automatically used by the query runtime to determine the most efficient way to run a query.

A map set is a collection of maps with a common partitioning algorithm. The data within the maps are replicated based on the policy defined on the map set. A map set is only used for distributed topologies and is not needed for local topologies.

A map set can have a schema associated with it. A schema is the metadata that describes the relationships between each map when using homogeneous Object types or entities.

WXS can store serializable Java objects or XDF serialized objects in each of the maps using either...

- ObjectMap API for Java clients

- IGridMapAutoTx/IGridMapPessimisticTx API for .NET clients

A schema can be defined over the maps to identify the relationship between the objects in the maps where each map holds objects of a single type. Defining a schema for maps is required to query the contents of the map objects. WXS can have multiple map schemas defined.

WXS can also store entities using the EntityManager API . Each entity is associated with a map. The schema for an entity map set is automatically discovered using either...

- Entity descriptor XML file

- Annotated Java classes

Each entity has a set of key attributes and set of non-key attributes. An entity can also have relationships to other entities. WXS supports one to one, one to many, many to one and many to many relationships. Each entity is physically mapped to a single map in the map set. Entities allow applications to easily have complex object graphs that span multiple Maps. A distributed topology can have multiple entity schemas.

Clients

Clients...

- connect to a catalog service

- retrieve a description of the server topology

- communicate directly to each server as needed

When the server topology changes because new servers are added or existing servers have failed, the dynamic catalog service routes the client to the appropriate server that is hosting the data. Clients must examine the keys of application data to determine which partition to route the request. Clients can read data from multiple partitions in a single transaction. Clients can update only a single partition in a transaction. After the client updates some entries, the client transaction must use that partition for updates.

Use two types of clients:

- Java clients

- .NET clients

Java clients

Java client applications run on JVMs and connect to the catalog service and container servers.

- A catalog service exists in its own data grid of JVMs. A single catalog service can be used to manage multiple clients or container servers.

- A container server can be started in a JVM by itself or can be loaded into an arbitrary JVM with other containers for different data grids.

- A client can exist in any JVM and communicate with one or more data grids. A client can also exist in the same JVM as a container server.

.NET clients

NET clients work similarly to Java clients, but do not run in JVMs. .NET clients are installed remotely from the catalog and container servers. You connect to the catalog service from within the application. Use a .NET client application to connect to the same data grid as your Java clients.

Enterprise data grid overview

Enterprise data grids use the eXtremeIO transport mechanism and a new serialization format. With the new transport and serialization format, we can connect both Java and .NET clients to the same data grid.

With the enterprise data grid, we can create multiple types of applications, written in different programming languages, to access the same objects in the data grid. In prior releases, data grid applications had to be written in the Java programming language only. With the enterprise data grid function, we can write .NET applications that can create, retrieve, update, and delete objects from the same data grid as the Java application. data grid high-level overview

Object updates across different applications

- The .NET client saves data in its format to the data grid.

- The data is stored in a universal format, so that when the Java client requests this data it can be converted to Java format.

- The Java client updates and re-saves the data.

- The .NET client accesses the updated data, during which the data is converted to .NET format.

Transport mechanism

eXtremeIO (XIO) is a cross-platform transport protocol that replaces the Java-bound ORB. With the ORB, WXS is bound to Java native client applications. The XIO transport mechanism is specifically targeted for data caching, and enables client applications that are in different programming languages to connect to the data grid.

XDF

XDF is a cross-platform serialization format that replaces Java serialization. Enabled on maps with a copyMode attribute value of COPY_TO_BYTES in the ObjectGrid descriptor XML file. With XDF, performance is faster and data is more compact. In addition, the introduction of XDF enables client applications that are in different programming languages to connect to the same data grid.

Class evolution

XDF allows for class evolution. We can evolve class definitions used in the data grid without affecting older applications using previous versions of the class. Classes can function together when one of the classes has fewer fields than the other class.

XDF implementation scenarios:

- Multiple versions of the same object class

- In this scenario, you have a map in a sales application used tracking customers. This map has two different interfaces. One interface is for the web purchases. The second interface is for the phone purchases. In version 2 of this sales application, you decide to give discounts to web shoppers based on their purchasing habits. This discount is stored with the Customer object. The phone sales employees are still using version 1 of the application, which is unaware of the new discount field in the web version. You want Customer objects from version 2 of the application to work with Customer objects that were created with the version 1 application and vice versa.

- Multiple versions of a different object class

- In this scenario, you have a sales application that is written in Java that keeps a map of Customer objects. You also have another application that is written in C# and is used to manage the inventory in the warehouse and ship goods to customers. These classes are currently compatible based on the names of the classes, fields, and types. In your Java sales application, we want to add an option to the Customer record to associate the sales person with a customer account. However, you do not want to update the warehouse application to store this field because it is not needed in the warehouse.

- Multiple incompatible versions of the same class

- In this scenario, your sales and inventory applications both contain a Customer object. The inventory application uses an ID field that is a string and the sales application uses an ID field that is an integer. These types are not compatible. As a result, the objects are probably not stored in the same map. The objects must be handled by the XDF serialization and treated as two distinct types. While this scenario is not really class evolution, it is a consideration that must be part of your overall application design.

Determination for evolution

XDF attempts to evolve a class when the class names match and the field names do not have conflicting types. Using the ClassAlias and FieldAlias annotations are useful when we are trying to match classes between C# and Java applications where the names of the classes or fields are slightly different. We can put these annotations on either the Java and C# application, or both. However, the lookup for the class in the Java application can be less efficient than defining the ClassAlias on the C# application.

The effect of missing fields in serialized data

The constructor of the class is not invoked during deserialization, so any missing fields have a default that is assigned to it based on the language. The application that is adding new fields must be able to detect the missing fields and react when an older version of class is retrieved.

Updating the data is the only way for older applications to keep the newer fields

An application might run a fetch operation and update the map with an older version of the class that is missing some fields in the serialized value from the client. The server then merges the values on the server and determines whether any fields in the original version are merged into the new record. If an application runs a fetch operation, and then removes and inserts an entry, the fields from the original value are lost.

Merging capabilities

Objects within an array or collection are not merged by XDF. It is not always clear whether an update to an array or collection is intended to change the elements of that array or the type. If a merge occurs based on positioning, when an entry in the array is moved, XDF might merge fields that are not intended to be associated. As a result, XDF does not attempt to merge the contents of arrays or collections. However, if you add an array in a newer version of a class definition, the array gets merged back into the previous version of the class.

IBM eXtremeMemory

IBM eXtremeMemory enables objects to be stored in native memory instead of the Java heap. By moving objects off the Java heap, we can avoid garbage collection pauses, leading to more constant performance and predicable response times.

The JVM relies on usage heuristics to collect, compact, and expand process memory. The garbage collector completes these operations. However, running garbage collection has an associated cost. The cost of running garbage collection increases as the size of the Java heap and number of objects in the data grid increase. The JVM provides different heuristics for different use cases and goals: optimum throughput, optimum pause time, generational, balanced, and real-time garbage collection. No heuristic is perfect. A single heuristic cannot suit all possible configurations.

WXS uses data caching, with distributed maps that have entries with a well-known lifecycle. This lifecycle includes the following operations: GET, INSERT, DELETE, and UPDATE. By using these well-known map lifecycles, eXtremeMemory can manage memory usage for data grid objects in container servers more efficiently than the standard JVM garbage collector.

The following diagram shows how using eXtremeMemory leads to more consistent relative response times in the environment. As the relative response times reach the higher percentiles, the requests that are using eXtremeMemory have lower relative response times. The diagram shows the 95-100 percentiles. .

Zones

Zones give you control over shard placement. Zones are user-defined logical groupings of physical servers. The following are examples of different types of zones:

- Different blade servers

- Chassis of blade servers

- Floors of a building

- Buildings

- Different geographical locations in a multiple data center environment

- Virtualized environment where many server instances, each with a unique IP address, run on the same physical server

Zones defined between data centers

The classic example and use case for zones is when you have two or more geographically dispersed data centers. Dispersed data centers spread the data grid over different locations for recovery from data center failure. For example, you might want to ensure that you have a full set of asynchronous replica shards for the data grid in a remote data center. With this strategy, we can recover from the failure of the local data center transparently, with no loss of data. Data centers themselves have high speed, low latency networks. However, communication between one data center and another has higher latency. Synchronous replicas are used in each data center where the low latency minimizes the impact of replication on response times. Using asynchronous replication reduces impact on response time. The geographic distance provides availability in case of local data center failure.

In the following example, primary shards for the Chicago zone have replicas in the London zone. Primary shards for the London zone have replicas in the Chicago zone.

Three configuration settings in eXtreme Scale control shard placement:

- Set the deployment file

- Group containers

- Specify rules

Disable development mode

To disable development mode, and to activate the first eXtreme Scale shard placement policy, in the deployment XML file, set...

-

developmentMode="false"

Policy 1: Primary and replica shards for partition are placed in separate physical servers

If a single physical server fails, no data is lost. Most efficient setting for production high availability.

Physical servers are defined by an IP address. The IP addresses used by container servers hosting the shards is set using the start script parameter...

-

-listenerHost

Policy 2: Primary and replica shards for the same partition are placed in separate zones

With this policy, we can control shard placement by defining zones. You choose your physical or logical boundary or grouping of interest. Then, choose a unique zone name for each group, and start the container servers in each of your zones with the name of the appropriate zone. Thus eXtreme Scale places shards so that shards for the same partition are placed in separate zones.

Container servers are assigned to zones with the zone parameter on the start server script. In a WAS environment, zones are defined through node groups with a specific name format:

ReplicationZone<Zone>

We can extend the example of a data grid with one replica shard by deploying it across two data centers. Define each data center as an independent zone. Use a zone name of DC1 for the container servers in the first data center, and DC2 for the container servers in the second data center. With this policy, the primary and replica shards for each partition would be in different data centers. If a data center fails, no data is lost. For each partition, either its primary or replica shard is in the other data center.

Zone rules

The finest level of control over shard placement is achieved using zone rules, specified in the zoneMetadata element of the WXS deployment policy descriptor XML. A zone rule defines a set of zones in which shards are placed. A shardMapping element assigns a shard to a zone rule. The shard attribute of the shardMapping element specifies the shard type:

| P | primary shard |

| S | replica shards |

| A | replica shards |

If more than one synchronous or asynchronous replica exist, provide shardMapping elements of the appropriate shard type. The exclusivePlacement attribute of the zoneRule element determines the placement of shards...

| true | Shards cannot be placed in the same zone as another shard from the same partition. Have at least as many zones in the rule as you have shards to ensure each shard can be in its own zone. false . |

| false | Shards from the same partition can be placed in the same zone. |

Shard placement strategies

Rolling upgrades

Consider a scenario in which we want to apply rolling upgrades to your physical servers, including maintenance that requires restarting the deployment. In this example, assume that you have a data grid spread across 20 physical servers, defined with one synchronous replica. You want to shut down 10 of the physical servers at a time for the maintenance.When you shut down groups of 10 physical servers, to assure no partition has both its primary and replica shards on the servers we are shutting down, define a third zone. Instead of two zones of 10 physical servers each, use three zones, two with seven physical servers, and one with six. Spreading the data across more zones allows for better failover for availability.

Rather than defining another zone, the other approach is to add a replica.

Upgrade eXtreme Scale

When upgrading eXtreme Scale software in a rolling manner with data grids that contain live data, the catalog service software version must be greater than or equal to the container server software versions. Upgrade all the catalog servers first with a rolling strategy.

Change data model

To change the data model or schema of objects that are stored in the data grid without causing downtime...

- Start a new data grid with the new schema

- Copy the data from the old data grid to the new data grid

- Shut down the old data grid

Each of these processes are disruptive and result in downtime. To change the data model without downtime, store the object in one of these formats:

- XML as the value

- blob made with Google protobuf

- JavaScript Object Notation (JSON)

Write serializers to go from plain old Java object (POJO) to one of these formats on the client side.

Virtualization

eXtreme Scale insures that two shards for the same partition are never placed on the same IP address, as described in Policy 1 . When deploying on virtual images, such as VMware, many server instances, each with a unique IP address, can be run on the same physical server. To ensure that replicas can only be placed on separate physical servers, group physical servers into zones, and use zone placement rules to keep primary and replica shards in separate zones.

Multiple buildings or data centers with slower network connections can lead to lower bandwidth and higher latency connections. The WXS catalog service organizes container servers into core groups that exchange heartbeats to detect container server failure. These core groups do not span zones. A leader within each core group pushes membership information to the catalog service which verifies reported failures before responding to membership information by heartbeating the container server in question. If the catalog service sees a false failure detection, the catalog service takes no action. The core group partition heals quickly. The catalog service also heartbeats core group leaders periodically at a slow rate to handle the case of core group isolation.

Evictors

Evictors remove data from the data grid. We can set either...

- A time-based evictor

- BackingMap that specifies the pluggable evictor

Evictor types

A default time to live TTL evictor is created for every backing map.

- None

-

Entries never expire and are never removed from the map.

- Creation time (CREATION_TIME ttlType)

- Entries are evicted when time from creation equals TimeToLive (TTL). If TTL is 10 seconds, the entry is automatically evicted ten seconds after it was inserted.

This evictor is best used when reasonably high amounts of additions to the cache exist that are only used for a set amount of time. With this strategy, anything that is created is removed after the set amount of time.

The CREATION_TIME ttlType is useful in scenarios such as refreshing stock quotes every 20 minutes or less. Suppose a Web application obtains stock quotes, and getting the most recent quotes is not critical. In this case, the stock quotes are cached in a data grid for 20 minutes. After 20 minutes, the map entries expire and are evicted. Every twenty minutes or so, the data grid uses the Loader plug-in to refresh the data with data from the database. The database is updated every 20 minutes with the most recent stock quotes.

- Last access time (LAST_ACCESS_TIME ttlType)

-

Entries are evicted depending upon when they were last accessed, whether they were read or updated.

- Last update time (LAST_UPDATE_TIME ttlType)

-

Entries are evicted depending upon when they were last updated.

If we are using LAST_ACCESS_TIME or LAST_UPDATE_TIME, set the TTL value to a lower number than if we are using CREATION_TIME. The TTL attributes for each are reset every time they are access. If the TTL attribute is equal to 15, and an entry has existed for 14 seconds but then gets accessed, it does not expire again for another 15 seconds. If you set the TTL value to a relatively high number, many entries might never be evicted. However, if you set the value to something like 15 seconds, entries might be removed when they are not often accessed.

LAST_ACCESS_TIME and LAST_UPDATE_TIME are useful in scenarios such as holding session data from a client, using a data grid map. Session data must be destroyed if the client does not use the session data for some period of time. For example, if an application's session data times out after 30 minutes of no activity by the client, using an evictor type of LAST_ACCESS_TIME or LAST_UPDATE_TIME with the TTL value set to 30 minutes is appropriate.

You may also write your own evictors

Pluggable evictor

Use an optional pluggable evictor to evict entries based on the number of entries in the BackingMap.

| LRUEvictor | Uses a least recently used (LRU) algorithm to decide which entries to evict when the BackingMap exceeds a maximum number of entries. |

| LFUEvictor | Uses a least frequently used (LFU) algorithm to decide which entries to evict when the BackingMap exceeds a maximum number of entries. |

The BackingMap informs an evictor as entries are created, modified, or removed in a transaction, keeping track of these entries and choosing when to evict entries.

A BackingMap instance has no configuration information for a maximum size. Instead, evictor properties are set to control the evictor behavior. Both the LRUEvictor and the LFUEvictor have a maximum size property used to cause the evictor to begin to evict entries after the maximum size is exceeded. Like the TTL evictor, the LRU and LFU evictors might not immediately evict an entry when the maximum number of entries is reached to minimize impact on performance. We can write your own custom evictors.

Memory-based eviction

Memory-based eviction is only supported on JEE v5 or later.

All built-in evictors support memory-based eviction that can be enabled on the BackingMap interface by setting the evictionTriggers attribute of BackingMap interface MEMORY_USAGE_THRESHOLD.

Memory-based eviction is based on heap usage threshold. When memory-based eviction is enabled on BackingMap and the BackingMap has any built-in evictor, the usage threshold is set to a default percentage of total memory if the threshold has not been previously set.

When using memory-based eviction, configure the garbage collection threshold to the same value as the target heap utilization. If the memory-based eviction threshold is set at 50 percent and the garbage collection threshold is at the default 70 percent level, the heap utilization can go as high as 70 percent because memory-based eviction is only triggered after a garbage collection cycle.

To change the default usage threshold percentage, set memoryThresholdPercentage. To set the target usage threshold on a client process, we can use the MemoryPoolMXBean.

The memory-based eviction algorithm used by WXS is sensitive to the behavior of the garbage collection algorithm in use. The best algorithm for memory-based eviction is the IBM default throughput collector. Generation garbage collection algorithms can cause undesired behavior, and so you should not use these algorithms with memory-based eviction.

To change the usage threshold percentage, set the memoryThresholdPercentage property on the container and server property files for eXtreme Scale server processes.

During runtime, if the memory usage exceeds the target usage threshold, memory-based evictors start evicting entries and try to keep memory usage below the target usage threshold. However, no guarantee exists that the eviction speed is fast enough to avoid a potential out of memory error if the system runtime continues to quickly consume memory.

OSGi framework overview

WXS OSGi support allows us to deploy WXS v8.6 in the Eclipse Equinox OSGi framework.

With the dynamic update capability that the OSGi framework provides, we can update the plug-in classes without restarting the JVM. These plug-ins are exported by user bundles as services. WXS accesses the service or services by looking them up the OSGi registry.

eXtreme Scale containers can be configured to start more dynamically using either...

- OSGi configuration admin service

- OSGi Blueprint

To deploy a new data grid with its placement strategy, create an OSGi configuration or deploy a bundle with WXS descriptor XML files. With OSGi support, application bundles containing WXS configuration data can be installed, started, stopped, updated, and uninstalled without restarting the whole system. With this capability, we can upgrade the application without disrupting the data grid.

Plug-in beans and services can be configured with custom shard scopes, allowing sophisticated integration options with other services running in the data grid. Each plug-in can use OSGi Blueprint rankings to verify that every instance of the plug-in is activated is at the correct version. An OSGi-managed bean (MBean) and xscmd utility are provided, allowing you to query the WXS plug-in OSGi services and their rankings.

This capability allows administrators to quickly recognize potential configuration and administration errors and upgrade the plug-in service rankings in use by eXtreme Scale .

OSGi bundles

To interact with and deploy plug-ins in the OSGi framework, use bundles. In the OSGi service platform, a bundle is a JAR file that contains Java code, resources, and a manifest that describes the bundle and its dependencies. The bundle is the unit of deployment for an application. The eXtreme Scale product supports the following bundle types:

- Server bundle

- The server bundle is the objectgrid.jar file and is installed with the WXS stand-alone server installation and is required for running eXtreme Scale servers and can also be used for running eXtreme Scale clients, or local, in-memory caches. The bundle ID for the objectgrid.jar file is com.ibm.websphere.xs.server_<version>, where the version is in the format: <Version>.<Release>.<Modification>. For example, the server bundle for eXtreme Scale version 7.1.1 is com.ibm.websphere.xs.server_7.1.1.

- Client bundle

- The client bundle is the ogclient.jar file and is installed with the WXS stand-alone and client installations and is used to run eXtreme Scale clients or local, in-memory caches. The bundle ID for the ogclient.jar file is com.ibm.websphere.xs.client_version, where the version is in the format: <Version>.<Release>.<Modification>. For example, the client bundle for eXtreme Scale version 7.1.1 is com.ibm.websphere.xs.client_7.1.1.

Limitations

We cannot restart the WXS bundle because we cannot restart the object request broker (ORB) or eXtremeIO (XIO). To restart the WXS server, restart the OSGi framework.

Cache integration overview

The crucial element that gives WXS the capability to perform with such versatility and reliability is its application of caching concepts to optimize the persistence and recollection of data in virtually any deployment environment.

Spring cache provider

Spring Framework Version 3.1 introduced a new cache abstraction. With this new abstraction, we can transparently add caching to an existing Spring application. Use WXS as the cache provider for the cache abstraction.

Liberty profile

The Liberty profile is a highly composable, fast-to-start, dynamic application server runtime environment.

You install the Liberty profile when you install WXS with WebSphere Application Server Version 8.5. Because the Liberty profile does not include a Java runtime environment (JRE), you have to install a JRE provided by either Oracle or IBM .

This server supports two models of application deployment:

- Deploy an application by dropping it into the dropins directory.

- Deploy an application by adding it to the server configuration.

The Liberty profile supports a subset of the following parts of the full WebSphere Application Server programming model:

- Web applications

- OSGi applications

- JPA

Associated services such as transactions and security are only supported as far as is required by these application types and by JPA.

Features are the units of capability by which you control the pieces of the runtime environment that are loaded into a particular server. The Liberty profile includes the following main features:

- Bean validation

- Blueprint

- Java API for RESTful Web Services

- Java Database Connectivity (JDBC)

- Java Naming and Directory Interface

- JPA

- JavaServer Faces (JSF)

- JavaServer Pages (JSP)

- Lightweight Directory Access Protocol (LDAP)

- Local connector (for JMX clients)

- Monitoring

- OSGi JPA (JPA support for OSGi applications)

- Remote connector (for JMX clients)

- SSL

- Security

- Servlet

- Session persistence

- Transaction

- Web application bundle (WAB)

- z/OS security

- z/OS transaction management

- z/OS workload management

We can work with the runtime environment directly, or using the WebSphere Application Server Developer Tools for Eclipse.

On distributed platforms, the Liberty profile provides both a development and an operations environment. On the Mac, it provides a development environment.

On z/OS systems, the Liberty profile provides an operations environment. We can work natively with this environment using the MVS. console. For application development, consider using the Eclipse-based developer tools on a separate distributed system, on Mac OS, or in a Linux shell on z/OS.

Run the Liberty profile with a third-party JRE

When you use a JRE that Oracle provides, special considerations must be taken to run WXS with the Liberty profile.

- Classloader deadlock

- You might experience a classloader deadlock which has been worked around using the following JVM_ARGS settings. If you experience a deadlock in BundleLoader logic, add the following arguments:

export JVM_ARGS="$JVM_ARGS -XX:+UnlockDiagnosticVMOptions -XX:+UnsyncloadClass"

- IBM ORB

- WXS requires that you use the IBM ORB, which is included in a WebSphere Application Server installation, but not in the Liberty profile. Set the endorsed directories using the Java system property, java.endorsed.dirs, to add the directory containing the IBM ORB JAR files. The IBM ORB JAR files are included in the WXS installation in the wlp\wxs\lib\endorsed directory.

WebSphere eXtreme Scale server features for the Liberty profile

Features are the units of capability by which you control the pieces of the runtime environment that are loaded into a particular server.

The following list contains information about the main available features. Including a feature in the configuration might cause one or more more features to be loaded automatically. Each feature includes a brief description and an example of how the feature is declared

Server feature

The server feature contains the capability for running a WXS server, both catalog and container. Add the server feature when we want to run a catalog server in the Liberty profile or when we want to deploy a grid application into the Liberty profile.

<wlp_install_root>/usr/server/wxsserver/server.xml file

<server description="WXS Server"><featureManager>

<feature>eXtremeScale.server-1.1</feature>

</featureManager>

<com.ibm.ws.xs.server.config /> </server>

Client feature

The client feature contains most of the programming model for eXtreme Scale. Add the client feature when you have an application running in the Liberty profile that is going to use eXtreme Scale APIs.

<wlp_install_root>/usr/server/wxsclient/server.xml file

<server description="WXS client"><featureManager>

<feature>eXtremeScale.client-1.1</feature>

</featureManager>

<com.ibm.ws.xs.client.config /> </server>

Web feature

Deprecated. Use the webapp feature when we want to replicate HTTP session data for fault tolerance.

The web feature contains the capability to extend the Liberty profile web application. Add the web feature when we want to replicate HTTP session data for fault tolerance.

<wlp_install_root>/usr/server/wxsweb/server.xml file

<server description="WXS enabled Web Server"><featureManager>

<feature>eXtremeScale.web-1.1</feature>

</featureManager>

<com.ibm.ws.xs.web.config /> </server>

WebApp feature

The webApp feature contains the capability to extend the Liberty profile web application. Add the webApp feature when we want to replicate HTTP session data for fault tolerance.

<wlp_install_root>/usr/server/wxswebapp/server.xml file

<wlp_install_root>/usr/server/wxswebapp/server.xml file <server description="WXS enabled Web Server"> <featureManager> <feature>eXtremeScale.webApp-1.1</feature> </featureManager> <com.ibm.ws.xs.webapp.config /> </server>

WebGrid feature

A Liberty profile server can host a data grid that caches data for applications to replicate HTTP session data for fault tolerance.

<wlp_install_root>/usr/server/wxswebgrid/server.xml file

<wlp_install_root>/usr/server/wxswebgrid/server.xml file <server description="WXS enabled Web Server"> <featureManager> <feature>eXtremeScale.webGrid-1.1</feature> </featureManager> <com.ibm.ws.xs.webgrid.config /> </server>

Dynamic cache feature

A Liberty profile server can host a data grid that caches data for applications that have dynamic cache enabled.

<wlp_install_root>/usr/server/wxsweb/server.xml file

<server description="WXS enabled Web Server"><featureManager>

<feature>eXtremeScale.dynacacheGrid-1.1</feature>

</featureManager>

<com.ibm.ws.xs.xsDynacacheGrid.config /> </server>

JPA feature

Use the JPA feature for the applications that use JPA in the Liberty profile.

<wlp_install_root>/usr/server/wxsjpa/server.xml file

<wlp_install_root>/usr/server/wxsjpa/server.xml file <server description="WXS enabled Web Server"> <featureManager> <feature>eXtremeScale.jpa-1.1</feature> </featureManager> <com.ibm.ws.xs.jpa.config /> </server>

REST feature

Access simple data grids hosted by a collective in the Liberty profile.

<wlp_install_root>/usr/server/wxsrest/server.xml file

<wlp_install_root>/usr/server/wxsrest/server.xml file <server description="WXS enabled Web Server"> <featureManager> <feature>eXtremeScale.rest-1.1</feature> </featureManager> <com.ibm.ws.xs.rest.config /> </server>

OpenJPA level 2 (L2) and Hibernate cache plug-in

WXS includes JPA L2 cache plug-ins for both OpenJPA and Hibernate JPA providers. When you use one of these plug-ins, the application uses the JPA API. A data grid is introduced between the application and the database, improving response times.

Using WXS as an L2 cache provider increases performance when we are reading and querying data and reduces load to the database. WXS has advantages over built-in cache implementations because the cache is automatically replicated between all processes. When one client caches a value, all other clients are able to use the cached value that is locally in-memory.

We can configure the topology and properties for the L2 cache provider in the persistence.xml file.

The JPA L2 cache plug-in requires an application that uses the JPA APIs. To use WXS APIs to access a JPA data source, use the JPA loader.

JPA L2 cache topology considerations

The following factors affect which type of topology to configure:- How much data do you expect to be cached?

- If the data can fit into a single JVM heap, use the Embedded topology or Intra-domain topology.

- If the data cannot fit into a single JVM heap, use the Embedded, partitioned topology, or Remote topology

- What is the expected read-to-write ratio?

The read-to-write ratio affects the performance of the L2 cache. Each topology handles read and write operations differently.

Embedded topology local read, remote write Intra-domain topology local read, local write Embedded, partitioned topology Partitioned: remote read, remote write Remote topology remote read, remote write. Applications that are mostly read-only should use embedded and intra-domain topologies when possible. Applications that do more writing should use intra-domain topologies.

- What is percentage of data is queried versus found by a key?

When enabled, query operations make use of the JPA query cache. Enable the JPA query cache for applications with high read to write ratios only, for example when we are approaching 99% read operations. If you use JPA query cache, use the Embedded topology or Intra-domain topology .

The find-by-key operation fetches a target entity if the target entity does not have any relationship. If the target entity has relationships with the EAGER fetch type, these relationships are fetched along with the target entity. In JPA data cache, fetching these relationships causes a few cache hits to get all the relationship data.

- What is the tolerated staleness level of the data?

In a system with few JVMs, data replication latency exists for write operations. The goal of the cache is to maintain an ultimate synchronized data view across all JVMs. When using the intra-domain topology, a data replication delay exists for write operations. Applications using this topology must be able to tolerate stale reads and simultaneous writes that might overwrite data.

Intra-domain topology

With an intra-domain topology, primary shards are placed on every container server in the topology. These primary shards contain the full set of data for the partition. Any of these primary shards can also complete cache write operations. This configuration eliminates the bottleneck in the embedded topology where all the cache write operations must go through a single primary shard .

In an intra-domain topology, no replica shards are created, even if you have defined replicas in the configuration files. Each redundant primary shard contains a full copy of the data, so each primary shard can also be considered as a replica shard. This configuration uses a single partition, similar to the embedded topology.

Related JPA cache configuration properties for the intra-domain topology:

- ObjectGridName=objectgrid_name,ObjectGridType=EMBEDDED,PlacementScope=CONTAINER_SCOPE,PlacementScopeTopology=HUB|RING

Advantages:

- Cache reads and updates are local.

- Simple to configure.

Limitations:

- This topology is best suited for when the container servers can contain the entire set of partition data.

- Replica shards, even if they are configured, are never placed because every container server hosts a primary shard . However, all the primary shards are replicating with the other primary shards, so these primary shards become replicas of each other.

Embedded topology

Consider using an intra-domain topology for the best performance.

An embedded topology creates a container server within the process space of each application. OpenJPA and Hibernate read the in-memory copy of the cache directly and write to all of the other copies. We can improve the write performance by using asynchronous replication. This default topology performs best when the amount of cached data is small enough to fit in a single process. With an embedded topology, create a single partition for the data.

Related JPA cache configuration properties for the embedded topology:

ObjectGridName=objectgrid_name,ObjectGridType=EMBEDDED,MaxNumberOfReplicas=num_replicas,ReplicaMode=SYNC | ASYNC | NONE

Advantages:

- All cache reads are fast, local accesses.

- Simple to configure.

Limitations:

- Amount of data is limited to the size of the process.

- All cache updates are sent through one primary shard, which creates a bottleneck.

Embedded, partitioned topology

Consider using an intra-domain topology for the best performance.

Do not use the JPA query cache with an embedded partitioned topology. The query cache stores query results that are a collection of entity keys. The query cache fetches all entity data from the data cache. Because the data cache is divided up between multiple processes, these additional calls can negate the benefits of the L2 cache.

When the cached data is too large to fit in a single process, we can use the embedded, partitioned topology. This topology divides the data over multiple processes. The data is divided between the primary shards, so each primary shard contains a subset of the data. We can still use this option when database latency is high.

Related JPA cache configuration properties for the embedded, partitioned topology:

ObjectGridName=objectgrid_name,ObjectGridType=EMBEDDED_PARTITION,ReplicaMode=SYNC | ASYNC | NONE, NumberOfPartitions=num_partitions,ReplicaReadEnabled=TRUE | FALSE

Advantages:

- Stores large amounts of data.

- Simple to configure

- Cache updates are spread over multiple processes.

Limitation:

- Most cache reads and updates are remote.

For example, to cache 10 GB of data with a maximum of 1 GB per JVM, 10 JVMs are required. The number of partitions must therefore be set to 10 or more. Ideally, the number of partitions must be set to a prime number where each shard stores a reasonable amount of memory. Usually, the numberOfPartitions setting is equal to the number of JVMs. With this setting, each JVM stores one partition. If you enable replication, you must increase the number of JVMs in the system. Otherwise, each JVM also stores one replica partition, which consumes as much memory as a primary partition.

Read about Sizing memory and partition count calculation to maximize the performance of your chosen configuration.

For example, in a system with four JVMs, and the numberOfPartitions setting value of

4, each JVM hosts a primary partition. A read operation has a 25 percent chance of fetching data from a locally available partition, which is much faster compared to getting data from a remote JVM. If a read operation, such as running a query, needs to fetch a collection of data that involves 4 partitions evenly, 75 percent of the calls are remote and 25 percent of the calls are local. If the ReplicaMode setting is set to either

SYNC or ASYNC and the ReplicaReadEnabled setting is set to true, then four replica partitions are created and spread across four JVMs. Each JVM hosts one primary partition and one replica partition. The chance that the read operation runs locally increases to 50 percent. The read operation that fetches a collection of data that involves four partitions evenly has 50 percent remote calls and 50 percent local calls. Local calls are much faster than remote calls. Whenever remote calls occur, the performance drops.

Remote topology

CAUTION:

Do not use the JPA query cache with a remote topology . The query cache stores query results that are a collection of entity keys. The query cache fetches all entity data from the data cache. Because the data cache is remote, these additional calls can negate the benefits of the L2 cache.

Consider using an intra-domain topology for the best performance.

A remote topology stores all of the cached data in one or more separate processes, reducing memory use of the application processes. We can take advantage of distributing your data over separate processes by deploying a partitioned, replicated eXtreme Scale data grid. As opposed to the embedded and embedded partitioned configurations described in the previous sections, if we want to manage the remote data grid, you must do so independent of the application and JPA provider.

Related JPA cache configuration properties for the remote topology :

ObjectGridName=objectgrid_name,ObjectGridType=REMOTE

The REMOTE ObjectGrid type does not require any property settings because the ObjectGrid and deployment policy is defined separately from the JPA application. The JPA cache plug-in remotely connects to an existing remote ObjectGrid.

Because all interaction with the ObjectGrid is remote, this topology has the slowest performance among all ObjectGrid types.

Advantages:

- Stores large amounts of data.

- Application process is free of cached data.

- Cache updates are spread over multiple processes.

- Flexible configuration options.

Limitation:

- All cache reads and updates are remote.

HTTP session management

The session replication manager that is shipped with WXS can work with the default session manager in WebSphere Application Server. Session data is replicated from one process to another process to support user session data high availability.

Features

The session manager has been designed so that it can run in any Java EE v 6 or later container. Because the session manager does not have any dependencies on WebSphere APIs, it can support various versions of WebSphere Application Server, as well as vendor application server environments.

The HTTP session manager provides session replication capabilities for an associated application. The session replication manager works with the session manager for the web container. Together, the session manager and web container create HTTP sessions and manage the life cycles of HTTP sessions that are associated with the application. These life cycle management activities include: the invalidation of sessions based on a timeout or an explicit servlet or JavaServer Pages (JSP) call and the invocation of session listeners that are associated with the session or the web application. The session manager persists its sessions in a fully replicated, clustered and partitioned data grid. The use of the WXS session manager enables the session manager to provide HTTP session failover support when application servers are shut down or end unexpectedly. The session manager can also work in environments that do not support affinity, when affinity is not enforced by a load balancer tier that sprays requests to the application server tier.

Usage scenarios

The session manager can be used in the following scenarios:

- In environments that use application servers at different versions of WebSphere Application Server, such as in a migration scenario.

- In deployments that use application servers from different vendors. For example, an application that is being developed on open source application servers and that is hosted on WebSphere Application Server. Another example is an application that is being promoted from staging to production. Seamless migration of these application server versions is possible while all HTTP sessions are live and being serviced.

- In environments that require the user to persist sessions with higher quality of service (QoS) levels. Session availability is better guaranteed during server failover than default WebSphere Application Server QoS levels.

- In an environment where session affinity cannot be guaranteed, or environments in which affinity is maintained by a vendor load balancer. With a vendor load balancer, the affinity mechanism must be customized to that load balancer.

- In any environment to offload the processing required for session management and storage to an external Java process.

- In multiple cells to enable session failover between cells.

- In multiple data centers or multiple zones.

How the session manager works

The session replication manager uses a session listener to listen on the changes of session data. The session replication manager persists the session data into an ObjectGrid instance either locally or remotely. We can add the session listener and servlet filter to every web module in the application with tooling that ships with WXS. We can also manually add these listeners and filters to the web deployment descriptor of the application.

This session replication manager works with each vendor web container session manager to replicate session data across JVMs. When the original server dies, users can retrieve session data from other servers.

Deployment topologies

The session manager can be configured using two different dynamic deployment scenarios:

- Embedded, network attached eXtreme Scale container servers

- In this scenario, the WXS servers are collocated in the same processes as the servlets. The session manager can communicate directly to the local ObjectGrid instance, avoiding costly network delays. This scenario is preferable when running with affinity and performance is critical.

- Remote, network attached eXtreme Scale container servers

- In this scenario, the WXS servers run in external processes from the process in which the servlets run. The session manager communicates with a remote eXtreme Scale server grid. This scenario is preferable when the web container tier does not have the memory to store the session data. The session data is offloaded to a separate tier, which results in lower memory usage on the web container tier. Higher latency occurs because the data is in a remote location.

Generic embedded container startup

eXtreme Scale automatically starts an embedded ObjectGrid container inside any application-server process when the web container initializes the session listener or servlet filter, if the objectGridType property is set to EMBEDDED.

You are not required to package an ObjectGrid.xml file and objectGridDeployment.xml file into your web application WAR or EAR file. The default ObjectGrid.xml and objectGridDeployment.xml files are packaged in the product JAR file. Dynamic maps are created for various web application contexts by default. Static eXtreme Scale maps continue to be supported.

This approach for starting embedded ObjectGrid containers applies to any type of application server. The approaches involving a WebSphere Application Server component or WebSphere Application Server Community Edition GBean are deprecated.

Dynamic cache provider overview

The WebSphere Application Server provides a Dynamic Cache service that is available to deployed Java EE applications. This service is used to cache data such as output from servlet, JSP or commands, as well as object data progamatically specified within an enterprise application using the DistributedMap APIs.

Initially, the only service provider for the Dynamic Cache service was the default dynamic cache engine that is built into WebSphere Application Server. Today customers can also specify WXS to be the cache provider for any given cache instance. By setting up this capability, we can enable applications that use the Dynamic Cache service, to use the features and performance capabilities of WXS.

We can install and configure the dynamic cache provider

Deciding how to use WXS

The available features in WXS significantly increase the distributed capabilities of the Dynamic Cache service beyond what is offered by the default dynamic cache provider and data replication service. With eXtreme Scale, we can create caches that are truly distributed between multiple servers, rather than just replicated and synchronized between the servers. Also, eXtreme Scale caches are transactional and highly available, ensuring that each server sees the same contents for the dynamic cache service. WXS offers a higher quality of service for cache replication provided via DRS.

However, these advantages do not mean that the WXS dynamic cache provider is the right choice for every application. Use the decision trees and feature comparison matrix below to determine what technology fits the application best.

Decision tree for migrating existing dynamic cache applications

Decision tree for choosing a cache provider for new applications

Feature comparison

| Feature | DynaCache | WXS provider | WXS API |

|---|---|---|---|

| Local, in-memory caching | Yes | via Near-cache capability | via Near-cache capability |

| Distributed caching | via DRS | Yes | Yes |

| Linearly scalable | No | Yes | Yes |

| Reliable replication (synchronous) | No | Yes | Yes |

| Disk overflow | Yes | N/A | N/A |

| Eviction | LRU/TTL/heap-based | LRU/TTL (per partition) | LRU/TTL (per partition) |

| Invalidation | Yes | Yes | Yes |

| Relationships | Dependency / template ID relationships | Yes | No (other relationships are possible) |

| Non-key lookups | No | No | via Query and index |

| Back-end integration | No | No | via Loaders |

| Transactional | No | Yes | Yes |

| Key-based storage | Yes | Yes | Yes |

| Events and listeners | Yes | No | Yes |

| WebSphere Application Server integration | Single cell only | Multiple cell | Cell independent |

| Java Standard Edition support | No | Yes | Yes |

| Monitoring and statistics | Yes | Yes | Yes |

| Security | Yes | Yes | Yes |

An eXtreme Scale distributed cache can only store entries where the key and the value both implement the java.io.Serializable interface.

Topology types

Deprecated: The local, embedded, and embedded-partitioned topology types are deprecated.

A dynamic cache service created with WXS as the provider can be deployed in a remote topology .

Remote topology

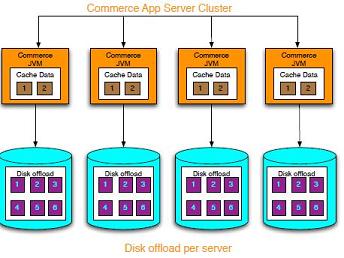

The remote topology eliminates the need for a disk cache. All of the cache data is stored outside of WebSphere Application Server processes.

WXS supports standalone container processes for cache data. These container processes have a lower overhead than a WebSphere Application Server process and are also not limited to using a particular JVM. For example, the data for a dynamic cache service being accessed by a 32-bit WebSphere Application Server process could be located in a WXS container process running on a 64-bit JVM. This allows users to use the increased memory capacity of 64-bit processes for caching, without incurring the additional overhead of 64-bit for application server processes. The remote topology is shown in the following image:

Dynamic cache engine and eXtreme Scale functional differences

Users should not notice a functional difference between the two caches except that the WXS backed caches do not support disk offload or statistics and operations related to the size of the cache in memory.

There will be no appreciable difference in the results returned by most Dynamic Cache API calls, regardless of whether the customer is using the default dynamic cache provider or the WXS cache provider. For some operations we cannot emulate the behavior of the dynamic cache engine using eXtreme Scale.

Dynamic cache statistics

Statistical data for a WXS dynamic cache can be retrieved using the WXS monitoring tooling.

MBean calls

The WXS dynamic cache provider does not support disk caching. Any MBean calls relating to disk caching will not work.

Dynamic cache replication policy mapping

The WXS dynamic cache provider's remote topology supports a replication policy that most closely matches the SHARED_PULL and SHARED_PUSH_PULL policy (using the terminology used by the default WebSphere Application Server dynamic cache provider). In a WXS dynamic cache, the distributed state of the cache is completely consistent between all the servers.

Global index invalidation

Use a global index to improve invalidation efficiency in large partitioned environments; for example, more than 40 partitions. Without the global index feature, the dynamic cache template and dependency invalidation processing must send remote agent requests to all partitions, which results in slower performance. When configuring a global index, invalidation agents are sent only to applicable partitions that contain cache entries related to the Template or Dependency ID. The potential performance improvement will be greater in environments with large numbers of partitions configured. We can configure a global index using the Dependency ID and Template ID indexes, which are available in the example dynamic cache objectGrid descriptor XML files.

Security

When a cache is running in a remote topology, it is possible for a standaloneeXtreme Scale client to connect to the cache and affect the contents of the dynamic cache instance. It is therefore important WXS servers containing the dynamic cache instances reside in an internal network, behind what is typically known as the network DMZ.

Near cache

A dynamic cache instance can be configured to create a maintain a near cache, which will reside locally within the application server JVM and will contain a subset of the entries contained within the remote dynamic cache instance. We can configure a near cache instance using file...

-

dynacache-nearCache-ObjectGrid.xml

Database integration

WXS is used to front a traditional database and eliminate read activity normally pushed to the database. A coherent cache can be used with an application directly or indirectly using an object relational mapper. The coherent cache can then offload the database or backend from reads. In a slightly more complex scenario, such as transactional access to a data set where only some of the data requires traditional persistence guarantees, filtering can be used to offload even write transactions.