6.7.1 Primary and backup servers

When the plugin-cfg.xml is generated, all servers are initially listed under the PrimaryServers tag, which is an ordered list of servers to which the plug-in can send requests.

There is also an optional tag called BackupServers which is an ordered list of servers to which requests should only be sent if all servers specified in the Primary Servers list are unavailable.

Within the Primary Servers, the plug-in routes traffic according to server weight and/or session affinity. When all servers in the Primary Server list are unavailable, the plug-in will then route traffic to the first available server in the Backup Server list.

If the first server in the Backup Server list is not available, the request is routed to the next server in the Backup Server list until no servers are left in the list or until a request is successfully sent and a response received from an appserver. Weighted round robin routing is not performed for the servers in the Backup Server list.

Note that the primary and backup Server lists are only used when partition ID logic is NOT used. When partition ID comes into play, then primary/backup Server logic no longer applies. Partition ID logic is used when the session manager instances in all the domains are set to a peer-to-peer cluster topology.

To learn about partition ID, please see Session affinity and Partition ID.

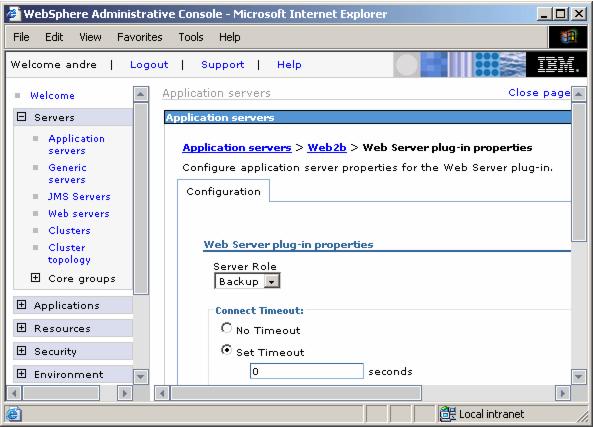

To change an appserver's role in a cluster, select...

Servers | Application servers | <AppServer_Name> | Web Server plug-in properties

...and select the appropriate value from the Server Role pull-down menu. For example, change from Primary to Backup.

Figure 6-26 Setting up a backup server

All appserver details in the plugin-cfg.xml file are listed under the ServerCluster tag. This includes the PrimaryServers and BackupServers tags, as illustrated in Example 6-5.

Example 6-5 ServerCluster element depicting primary and backup servers

...

<ServerCluster CloneSeparatorChange="false"

LoadBalance="Round Robin"

Name="WEBcluster" PostSizeLimit="-1"

RemoveSpecialHeaders="true"

RetryInterval="120">

<Server CloneID="vve2m4fh"

ConnectTimeout="5"

ExtendedHandshake="false"

LoadBalanceWeight="2"

MaxConnections="-1"

Name="app1Node_Web1"

WaitForContinue="false">

<Transport Hostname="app1.itso.ibm.com"

Port="9080"

Protocol="http"/>

</Server>

<Server CloneID="vv8kelbq"

ConnectTimeout="5"

ExtendedHandshake="false"

LoadBalanceWeight="2"

MaxConnections="-1"

Name="app2Node_Web2a"

WaitForContinue="false">

<Transport Hostname="app2.itso.ibm.com"

Port="9080"

Protocol="http"/>

</Server>

<Server CloneID="vv8keohs"

ConnectTimeout="5"

ExtendedHandshake="false"

LoadBalanceWeight="2"

MaxConnections="-1"

Name="app2Node_Web2b"

WaitForContinue="false">

<Transport Hostname="app2.itso.ibm.com"

Port="9081"

Protocol="http"/>

</Server>

<PrimaryServers>

<Server Name="app2Node_Web2a"/>

<Server Name="app1Node_Web1"/>

</PrimaryServers>

<BackupServers>

<Server Name="app2Node_Web2b"/>

</BackupServers>

</ServerCluster>

...

As mentioned before, the backup server list is only used when all primary servers are down. Figure 6-27 shows this process in detail.

Figure 6-27 Primary and Backup server selection process

| 1. | The request comes in and is sent to the plug-in. |

| 2. | The plug-in chooses the next primary server, checks whether the cluster member has been marked as down and the retry interval. If it has been marked as down and the retry timer is not 0, it continues to the next cluster member. |

| 3. | A stream is opened to the appserver (if not already open) and a connection is attempted. |

| 4. | The connection to the cluster member fails. When a connection to a cluster member has failed, the process begins again. |

| 5. | The plug-in repeats steps 2, 3 and 4 until all primary servers are marked down. |

| 6. | When all primary servers are marked as down, the plug-in will then repeat steps 2 and 3 with the backup server list. |

| 7. | It performs steps 2 and 3 with a successful connection to the backup server. Data is sent to the Web container and is then returned to the user. If the connection is unsuccessful, the plug-in will then go through all the primary servers again and then through all the servers marked as down in the backup server list until it reaches a backup server that is not marked as down. |

| 8. | If another requests comes in and one of the primary server's retry timer is now at 0 the plug-in will try and connect to it. |