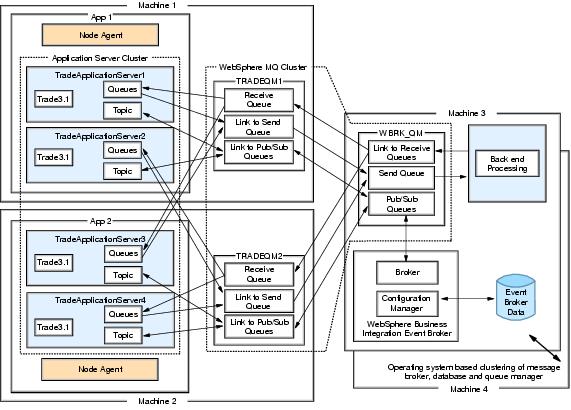

The chosen WebSphere MQ clustering topology

Figure 15-16 Example topology for WebSphere MQ and Event Broker for Trade3 scenario

This topology has been chosen because:

- It provides workload management.

There are four servers in the appserver cluster that will have workload distributed to them, both from the Web facing side and from the messaging side. The queue managers also have their workload managed when requests are returned from the back end system. Finally, although not specified on the diagram, there is potential for the broker to be workload managed as well.

- It is has been designed for high availability and reliability of WAS.

Four appservers are used instead of two to cover the chance of an application server failing. In Figure 15-14 for the previous example, there was an issue that when an application server fails, messages will not be processed on its JMS server. This problem becomes even more important in this topology. In Figure 15-14 it is Trade3Server1 that is the only server placing messages on its JMS server. Should Trade3Server1 fail then no more messages will be delivered to app1's JMS server and so the number of messages waiting for processing will depend on what Trade3Server1 was doing when it stopped.

In this topology, messages arriving from the back end are workload managed between any available queue manager. If TRADEQM1 and TRADEQM2 are both up then response messages will continue to arrive at both. If Trade3Server1 was stopped and Trade3Server2 did not exist then messages would continue to arrive at TRADEQM1 but would not get processed.

This is overcome in this example by having one appserver on each node pointing at the remote queue manager. Should Trade3Server1 stop, any arriving messages will be picked up by the MDB running on Trade3Server2.

There is still the issue that should TRADEQM1 or TRADEQM2 stop then two of the four appservers will not be able to process order requests that will still be coming in from the front end. This failure in sending of messages can be avoided by changing the Trade3 code to have two QCFs to try, a primary and a backup. Upon sending a message if the first QCF fails to respond then the second could be used. This code change will not be made for this example.

- It has been designed for high availability and reliability of WebSphere MQ.

In this topology, the WebSphere MQ infrastructure uses clustering to provide failover and workload management. This prevents the queue managers becoming a single point of failure for messages arriving from the back end system. See Initial WebSphere MQ configuration.

- It is has been designed for high availability and reliability for WebSphere WebSphere Business Integration Event Broker.

WebSphere Business Integration Event Broker and its supporting software of a WebSphere MQ queue manager and DB2, are made highly available through the use of operating system level clustering software, for example HACMP for AIX. The setup of these products on various types of clustering software is discussed in Chapter 11, WebSphere Embedded JMS server and WebSphere MQ high availability and thus will not be covered here.

In this topology there is a reliance on the queue managers TRADEQM1 and TRADEQM2 being available for pub/sub messages to be delivered. The appservers use the two local queue managers as routing mechanisms to reach the message broker.

- Through use of durable subscriptions, published messages are delivered regardless of the state of the client at publish time.

If the MDB in Trade3 remains using a non-durable subscription as it is currently set, then the subscription to the broker is based on the connection the MDB listener has acquired to its local queue manager. If that connection is broken then the MDB loses its subscription. As this system needs to be reliable and recoverable from failure, a durable subscription needs to be used and the infrastructure setup accordingly. A durable subscription will live past any failure to communicate with the client, storing undeliverable messages until the client comes back online.

- It provides a topology that fits the functional requirements and allows communication with other WebSphere MQ queue managers outside of the WAS cell.

As with the first example this is not the perfect topology for all applications in all cases.

The back-end application has been included into the additional materials repository of this redbook. It is a very simple EAR file called TradeRedirector.ear, that contains one MDB. This MDB listens for arriving messages on one queue, picks up the messages and puts them back on the response queue. Basic instructions for installing it are included in the EAR file package.

This topology involves more products than in the first example. The following steps for WebSphere MQ and WebSphere Business Integration Event Broker have been designed to be the easiest method of getting a WAS skilled person up and running. However, they might not be the most efficient method to complete the tasks needed.

WebSphere is a trademark of the IBM Corporation in the United States, other countries, or both.

IBM is a trademark of the IBM Corporation in the United States, other countries, or both.